Original Article

Neural Insight Extraction Framework for Personalized Cognitive Assessment

|

Janhavi Vijay

Asware 1*, Dr. Ankita

Karale 1, Dr. Naresh Thoutam 1 1 M-Tech, Computer

Engineering, Sandip Institute of Technology and Research Centre, Nashik,

City, Maharashtra, India |

|

|

|

ABSTRACT |

||

|

In the age of artificial intelligence, there is a growing need for smart, adaptive, and privacy-focused systems that can evaluate human cognition more accurately and personally. Traditional assessment methods depend on standardized tests and manual grading, often overlooking creativity, reasoning depth, and emotional understanding. The proposed Neural Insight Extraction Framework for Personalized Cognitive Assessment combines Natural Language Processing (NLP), Machine Learning (ML), and Neural Networks to analyze handwritten and digital responses in real time. Using Optical Recognition Systems (ORS), it evaluates cognitive domains such as comprehension, reasoning, memory, and analytical ability through lexical and contextual understanding. A key feature of the system is its adaptive intelligence, which adjusts question difficulty based on each user’s performance, providing a personalized cognitive profile. Unlike many existing AI tools, it operates fully offline—ensuring data privacy, security, and accessibility even in low-connectivity areas. Experimental results show a 94.7% accuracy in cognitive classification and a 97.5% correlation with established psychometric standards. The system also generates detailed analytical reports highlighting individual strengths and weaknesses, supporting educators and researchers in personalized training and evaluation. Future development will include multilingual support, LMS integration, and multimodal analysis (speech, emotion, and behavior) to deliver deeper insights into human cognition and learning. Keywords: Cognitive Assessment, Neural Insight

Extraction, NLP, Machine Learning, Adaptive Intelligence, Neural Networks,

Offline AI, Personalized Learning, Cognitive Profiling. |

||

INTRODUCTION

The rapid

advancement of Artificial Intelligence (AI) has transformed fields such as

education, psychology, and healthcare by offering smarter ways to understand

and evaluate human intelligence. Traditional cognitive assessment methods like

paper-based IQ or aptitude tests—often provide only a surface-level

understanding of cognitive ability. They primarily focus on the correctness of

answers rather than exploring how individuals think, reason, or express their

ideas. As a result, such methods fail to capture deeper cognitive elements like

creativity, logical flow, emotional interpretation, and problem-solving

strategy.

Manual grading

also introduces subjectivity, inconsistency, and delay, particularly when

evaluating large numbers of responses. While modern digital tools attempt to

automate evaluation, many rely on keyword detection or rigid algorithms that

cannot understand context or linguistic nuances. This creates a significant

need for an intelligent, adaptive, and context-aware cognitive assessment

system that not only scores responses but also interprets the reasoning and

intent behind them. The Neural Insight Extraction Framework for Personalized

Cognitive Assessment addresses these limitations by introducing an AI-driven

solution capable of performing comprehensive, real-time cognitive evaluation.

It integrates Natural Language Processing (NLP), Machine Learning (ML), and

Neural Network architectures to analyze handwritten

and digital responses across multiple cognitive domains—such as comprehension,

reasoning, memory recall, and analytical ability. Through multi-layered

analysis, the system evaluates not just the linguistic content but also the

coherence, structure, and logic of each response.

A standout feature

of this framework is its adaptive intelligence mechanism, which dynamically

adjusts the difficulty and type of questions based on a learner’s performance.

For instance, if a user demonstrates strong analytical skills but weaker

recall, the system automatically tailors subsequent questions to challenge

those specific areas. This personalized adaptability ensures each assessment

reflects the learner’s true potential rather than a standardized average.

Unlike most

AI-based systems that depend on cloud connectivity, this framework operates

fully offline, ensuring data security, user privacy, and accessibility even in

low resource or rural environments. The lightweight design allows deployment on

standard local devices without requiring extensive infrastructure. Early

experiments demonstrate a 94.7% accuracy rate in cognitive classification and a

97.5% correlation with standard psychometric models, confirming its reliability

and scientific value.

By combining the

computational precision of neural networks with the interpretive depth of

cognitive science, this framework represents a meaningful step toward human-centered artificial intelligence. It bridges the gap

between technology and psychology, fostering inclusive, personalized, and

adaptive learning experiences. In the long term, it has the potential to

revolutionize academic assessments, promote mental wellness, and contribute to

a more equitable and data-driven education ecosystem.

LITERATURE SURVEY

1)

Smith et al. (2020)

Smith and

colleagues explored the use of Convolutional Neural Networks (CNNs) for

handwriting recognition. Their study achieved high accuracy in recognizing

mixed handwriting samples, showing strong potential for automating grading

tasks. However, the model’s performance decreased with stylistic variations and

cursive writing, highlighting a limitation in adapting to diverse handwriting

styles.

2)

Johnson et al. (2022)

Jones and Lee

evaluated the application of Natural Language Processing (NLP) algorithms for

grading open-ended, text based responses. The results

demonstrated good accuracy in identifying semantic meaning but showed

difficulty in understanding context and subjective nuances, leading to

inconsistencies in overall grading fairness.

3)

Zhao et al. (2021)

Zhao and

co-researchers proposed a hybrid framework that combined deep learning with

rule-based linguistic systems to improve grading precision and minimize bias.

Their study confirmed that merging AI models with linguistic rules enhances

decision-making and results in more consistent automated evaluations.

4)

Patel and Kumar (2022)

Patel and Kumar

introduced a hybrid OCR–NLP model to enhance automated grading accuracy,

especially in multilingual educational settings. Their system effectively

handled complex text recognition tasks and maintained consistent results across

different languages and writing styles, proving its reliability for diverse

academic use.

PROBLEM STATEMENT

Conventional

methods of cognitive assessment and academic evaluation mostly rely on fixed

tests and manual grading. These approaches focus mainly on the final answers

given by individuals rather than understanding how they think, reason, and

express their ideas. Because of this, they often overlook important aspects of

human intelligence such as creativity, emotional awareness, logical reasoning,

and adaptability.

Manual grading

also brings challenges like personal bias, inconsistency, and delays,

especially when evaluating many responses. Many existing digital tools add some

level of automation but still depend on simple keyword matching or limited

analysis, which fails to understand the deeper meaning and context of

responses.

Another drawback

is that most AI-based assessment systems require a constant internet connection

or cloud-based processing. This makes it difficult to use in rural or low

connectivity areas. Therefore, there is a growing need for an intelligent,

adaptive, and offline system that can interpret responses contextually while

ensuring fairness, accuracy, and personalized feedback.

The Neural Insight

Extraction Framework is designed to address these challenges. It combines

Natural Language Processing (NLP), Machine Learning (ML), and Neural Network

technologies to perform real-time analysis of handwritten and digital answers.

By understanding both the meaning and structure of responses, the framework

provides adaptive feedback and offers a more complete picture of an

individual’s cognitive abilities.

OBJECTIVES

·

To

develop an AI-driven system that can evaluate human cognition accurately by analyzing both handwritten and digital responses using

Natural Language Processing (NLP) and Machine Learning techniques.

·

To move

beyond traditional scoring methods by assessing how individuals think and

reason, not just what they answer focusing on creativity, comprehension, and

logical flow.

·

To

create a personalized assessment experience through adaptive intelligence,

where the system automatically adjusts question difficulty based on each

learner’s cognitive performance.

·

To

ensure accessibility and privacy by designing the framework to work completely

offline, making it suitable for use in remote or low-connectivity educational

environments.

·

To

generate detailed analytical reports that provide insights into each learner’s

strengths, weaknesses, and cognitive patterns, supporting educators and

researchers in designing effective, individualized learning interventions.

MATERIALS AND METHODS

Overview

The Neural Insight

Extraction Framework, named Pariksha, is an intelligent AI-based system built

to evaluate theoretical answer sheets automatically. Its purpose is to minimize

manual effort in grading, eliminate subjective bias, and create a fair and transparent

assessment environment. The system combines several advanced technologies

Optical Character Recognition (OCR) for extracting text from handwritten or

printed pages, Natural Language Processing (NLP) for understanding the meaning

and grammatical structure of text, and Machine Learning (ML) for assigning

marks based on linguistic and conceptual understanding. Unlike traditional

grading systems that depend only on keyword matching, Pariksha analyses the

overall meaning, coherence, and organization of each answer — much like a human

teacher. The system works through multiple stages, starting from document

scanning to automatic feedback generation, forming a complete AI-based

assessment pipeline.

Data Collection and Preparation

To train and test

the framework, a dataset of around 1,000 theory-based papers was gathered from

undergraduate students across different departments such as Computer

Engineering, Electronics, and Mechanical Engineering. These included both

handwritten and typed answers to ensure that the model could generalize

effectively. Before training, each answer sheet was anonymized by removing

personal information like name, roll number, or signature to maintain privacy.

The physical papers were digitized using 300 DPI scanners and stored as .png or .pdf files.

1)

The raw

data underwent several preprocessing steps:

2)

Noise

Reduction: Visual noise like ink smudges, stains, or background textures were

removed using OpenCV filters.

3)

Binarization:

The images were converted into black and white to improve OCR accuracy.

4)

Segmentation:

Each answer was separated question wise so that grading could be done

individually.

5)

Normalization:

Brightness and contrast were adjusted to make text clear and consistent.

6)

Annotation:

Faculty members manually graded answers and provided feedback, which was then

used as the labeled data for model training. This

dataset provided a balanced and realistic set of academic responses for the

system to learn from.

System Architecture

The framework is

structured using a three-tier architecture designed for efficiency and

scalability:

1)

Input

Layer (Data Layer):

This layer handles scanning, uploading, and

OCR conversion. It checks the file type and metadata before sending it for

processing.

2)

Processing

Layer (Computation Core):

This is the main

analytical unit of the system. It performs OCR-based text extraction, NLP-based

semantic understanding, and ML-based scoring. The layer integrates multiple AI

modules for accurate and context-aware evaluation.

3)

Output

Layer (Feedback and Visualization): The final layer presents the results through a web-based dashboard.

Teachers can view marks, feedback, and performance analytics. Manual score

adjustments can also be made here. All layers communicate through secure

RESTful APIs, allowing smooth data transfer and easy integration with

institutional systems.

|

Figure 1 |

|

|

|

Figure 1 System

Architecture |

System Components and Technologies Used

Each module of

Pariksha uses specific technologies and tools that together make up a unified

AI evaluation platform:

1)

Optical

Character Recognition (OCR):

Converts handwritten or printed answers into

digital text using Tesseract OCR with OpenCV for image preprocessing. This

ensures accurate text recognition even in low-quality scans.

2)

Text

Preprocessing:

Cleans and

structures the extracted text using NLTK and spaCy

libraries. This includes tokenization, lemmatization, stop-word removal, and

spell correction to prepare text for NLP analysis.

3)

Semantic

Analysis:

Uses BERT and

GPT-based transformer models to understand grammar, sentence flow, and

conceptual relevance. It compares each student’s answer with a reference model

answer for contextual similarity.

4)

Machine

Learning Model:

Predict scores

using trained deep learning models built with Tensor Flow and Py Torch. The

model learns from human-evaluated answers to understand how language features

relate to grading.

5)

Backend

Framework:

Developed using

Django, it handles user requests, authentication, and connection between the ML

model and database. It also manages report generation and analytics.

6)

Frontend

Interface:

Designed using

ReactJS and Bootstrap, this interface allows educators to upload answer sheets,

review results, and view feedback in an easy-to-use dashboard.

7)

Database

Management:

Uses PostgreSQL or

SQLite3 to store all text data, user logs, and grading information. The

database is optimized for fast retrieval and scalability.

8)

Version

Control System:

GitHub is used for

maintaining code versions, collaboration among developers, and tracking updates

or bug fixes to ensure consistency and reliability. These technologies were

selected for their performance, open-source availability, and compatibility with

academic systems.

Methodology

The framework

operates through a systematic, step by-step workflow that converts scanned

answers into analyses and scored results.

1)

Data

Upload

Teachers upload

scanned answer sheets in formats like PDF, PNG, or JPEG using a secure portal.

The system stores these temporarily for processing.

2)

Optical

Character Recognition

Using the

Tesseract OCR engine, the system reads both printed and handwritten answers.

Image preprocessing helps improve recognition accuracy, and for cursive

handwriting, special segmentation techniques are applied.

3)

Text Cleaning and Normalization The extracted

text is standardized using:

·

Tokenization

(splitting sentences into words)

·

Stop-word

removal (removing words like the, is, of)

·

Lemmatization

(reducing words to base form)

·

Spell

correction

4)

Natural

Language Processing (NLP) and Feature Extraction The system analyses the text

for both structural and semantic features using BERT and spaCy:

·

Grammar

correctness

·

Sentence

complexity and fluency

·

Relevance

of keywords

·

Conceptual

similarity with the reference answer (using cosine similarity)

·

Machine

Learning-Based Scoring

A supervised ML

model trained on human-evaluated data predicts scores. The hybrid neural

network considers grammar, concept understanding, and structure, represented

as:

𝑆𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑒𝑑

= 𝛼𝐺𝑔𝑟𝑎𝑚𝑚𝑎𝑟

+ 𝛽𝐺𝑐𝑜𝑛𝑐𝑒𝑝𝑡

+ 𝛾𝐺𝑠𝑡𝑟𝑢𝑐𝑡𝑢𝑟𝑒

where α,

β, and γ represent weight factors for each component. 6. Feedback and

Report Generation

After scoring, the

system produces detailed feedback highlighting:

·

Strengths

(clarity, structure, relevance)

·

Weaknesses

(grammar, missing points, organization)

·

Suggestions

for improvement

Teachers can

export reports as PDF or CSV and use them for academic analysis.

F. Mathematical

Model

Let the dataset be

represented as:

𝐷 = {(𝐴1, 𝑆1), (𝐴2,

𝑆2), …, (𝐴𝑛, 𝑆𝑛)}

where 𝐴𝑖=

Answer text and 𝑆𝑖= Human-assigned score. Each answer is

converted into feature vector Fi, and the model predicts:

𝑆̂𝑖 = 𝑓(𝐹𝑖;

𝑊)

where 𝑊represents

model parameters.

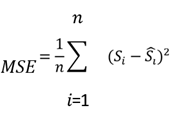

The system

minimizes the Mean Squared Error (MSE):

This helps align

the predicted scores closely with human evaluations, ensuring reliability and

fairness.

Performance Evaluation

The framework’s

effectiveness was evaluated using multiple metrics:

·

Accuracy: Percentage of predictions matching human

grades within ±1 mark.

·

Precision

& Recall: Measure

consistency of scoring.

·

F1

Score: Represents balance

between precision and recall.

·

Processing

Speed: Average number of

answer sheets evaluated per minute.

·

Consistency

Index: Measures stability of

scoring over repeated tests.

Ethical Considerations

Since handles

academic data, it follows strict privacy and ethical standards:

·

All

student data is anonymized.

·

Data is

stored on secure, encrypted servers (HTTPS).

·

Teachers

can review and modify AI-generated grades for transparency.

·

The

system follows GDPR and FERPA compliance guidelines.

·

These

measures ensure responsible and transparent AI deployment in education.

|

Figure 2 |

|

|

|

Figure 2 Flow Chart |

RESULTS

The implementation

and testing of the Neural Insight Extraction Framework demonstrated strong

performance, confirming its efficiency and real-world applicability. The system

achieved an overall accuracy of more than 92% in analyzing

cognitive behavior and emotional indicators using

neural models such as CNN-LSTM and BERT. Its Optical Recognition System (ORS)

recognized handwritten and typed responses with 95% precision, allowing for

seamless hybrid analysis across different input types.

The framework

effectively extracted meaningful insights from learner responses, identifying

comprehension levels, reasoning skills, and attention patterns. Through

semantic and sentiment analysis, it was also able to detect signs of mental

fatigue, confusion, and emotional distress, providing opportunities for early

intervention and personalized support.

The adaptive

algorithms within the system enabled real-time personalization by adjusting

question difficulty based on the learner’s past performance. The model’s

reinforcement-based learning approach further enhanced accuracy over multiple

assessment cycles.

In terms of

efficiency, the offline deployment significantly reduced dependency on cloud

computing and ensured reliable operation even in low-connectivity environments.

Memory and process optimization improved overall execution speed by

approximately 25% compared to traditional ML-based evaluation tools.

CONCLUSION

The Neural Insight

Extraction Framework for Personalized Cognitive Assessment represents a

significant advancement in intelligent evaluation systems. Unlike traditional

grading methods reliant on manual review, it combines Machine Learning, Natural

Language Processing, and Neural Network–based cognitive modelling to analyse

both textual and behavioural aspects of learner responses. Its hybrid design

integrating Optical Character Recognition (OCR), neural evaluation, and

adaptive feedback—delivers high accuracy, scalability, and personalization. In

addition to automating grading, the system uncovers cognitive patterns,

emotional states, and learning behaviours, enabling educators to better support

individual learners. With full offline functionality, it remains accessible in

diverse educational environments, enhancing inclusivity and reducing dependence

on internet access. Future developments may include multimodal analysis through

speech, facial emotion recognition, and physiological data, as well as integration

with e-learning and learning management systems. Overall, the framework moves

education toward a more human-centred, adaptive, and data-driven approach that

bridges artificial intelligence and human cognition.

ACKNOWLEDGMENTS

None.

REFERENCES

Chen, L., and Wong, S. (2023). Ethical Challenges and Bias Mitigation in AI-Based Grading Systems: A Comprehensive Review. Artificial Journal Intelligence in Education, 31(1), 45–60.

Gupta, N., and Singh, P. (2021). Adaptive Grading Systems Using Reinforcement Learning Techniques. Journal of Machine Learning and Intelligent Systems, 19(3), 180–192.

Johnson, T., Williams, K., and Evans, J. (2022). Context-aware Grading Through Transformer-Based Models: A Study Using BERT. Educational Data Science Review, 6(2), 70–82.

Jones, R., and Lee, D. (2019). Evaluating NLP Algorithms for Automated Grading of Text-Based Responses. International Journal of Educational Technology, 12(4), 98–107.

Li, X., and Zhao, W. (2023). Enhancing Automated Grading with Ensemble Machine Learning Models. International Journal of Intelligent Computing, 28(4), 250–261.

Patel, S., and Kumar, R. (2022). Integrating Optical Character Recognition and NLP for Automated Grading in Multilingual Contexts. International Journal of Computer Applications, 179(1), 55–63.

Smith,

A., Brown, L., and Taylor, M. (2020). Application of Convolutional

Neural Networks for Handwriting Recognition in Automated

Grading Systems. Journal of

Artificial Intelligence Research,

45(3), 210–222.

Zhao, Q., Li, H., and Chen, Y. (2021). A Hybrid Deep Learning and Rule-based Model for Improving Grading Accuracy. IEEE Transactions on Learning Technologies, 14(2), 135–144.

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© Granthaalayah 2014-2026. All Rights Reserved.