ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Decoding Viewer Emotions in Visual Media: Neuromarketing Perspectives on Digital Art and Online Visual Advertising

Dr. Archana Borde 1![]() , Dr. Neelam Raut 2

, Dr. Neelam Raut 2![]()

![]() , Dr. Girdhar Gopal 3

, Dr. Girdhar Gopal 3![]() , Dr. Prashant

Kalshetti 4

, Dr. Prashant

Kalshetti 4![]()

![]() , Dr. Gaganpreet Kaur Ahluwalia 5

, Dr. Gaganpreet Kaur Ahluwalia 5![]()

![]() , Dr. Nilesh Anute 6

, Dr. Nilesh Anute 6![]()

![]() , Dr. Shailesh Tripathi 7

, Dr. Shailesh Tripathi 7![]()

1 Associate

Professor, Symbiosis Skills and Professional University, Pune, India

2 Associate

Professor, Prin. L. N. Welingkar Institute of

Management Development and Research (PGDM), Mumbai, India

3 Associate Professor, SHEAT College of Engineering, Babatpur

Varanasi, Uttar Pradesh, India

4 Professor and Head, Department of BBA, Dr. D Y Patil Vidyapeeth,

Global Business School and Research Centre, Pune, India

5 Associate Professor and Area Chair (MBA Program)- Marketing

Management, School of Business, Indira University, Pune, India

6 Associate Professor, Balaji Institute of Management and Human Resource

Development, Sri Balaji University, Pune, India

7 Professor, Balaji Institute of Management and Human Resource

Development, Sri Balaji University, Pune, India

|

|

ABSTRACT |

||

|

The emotional

reaction of the viewer to visual media is one of the key issues of digital

art and visual advertising online where the aesthetic impression directly

determines the attention, memory, and choice. Current methods of evaluation

are based on self-reports and superficial measures of engagement which

provide little information on underlying affective reactions that underlie

consumer behavior. This gap has been filled in this paper by considering

viewer emotion decoding in the light of neuromarketing viewpoint that

involves applying computational emotion analysis with visual media research.

The main goal is to explore the effects of visual characteristics, narrative,

and style in digital art and web-based advertisements in

order to stimulate measurable emotional response and connect such a

response to the results of engagement and persuasion. The paper is a

synthesis of results on emotion recognition models, biometric measures, and

behavioral analytics to create a hybrid framework of analytical analysis of

affect-based visual comparison. The central results have shown that

emotion-sensitive visual design is much more efficient in retaining

attention, emotional appeal, and brand memorability, as positive affect and

moderate arousal become the most prominent predictors of the viewer

involvement in any platform. Moreover, the adaptive images in terms of

emotions proved to be more effective than the non-adaptive ones in respect of

having the artistic meaning and selling messages. The paper has a wider scope

than commercial advertising in respect to digital art shows, immersive media

and culturally contextual visual storytelling, with an emphasis on ethical

aspects and issues on interpretability. This work provides a systematic base

of the research on emotionally intelligent visual media and the potential

further study of visual communication and neural markers by connecting

neuromarketing and visual analytics based on AI and emotion recognition. |

|||

|

Received 11 May 2025 Accepted 14 September 2025 Published 25 December 2025 Corresponding Author Dr.

Archana Borde, archanaajitborde@gmail.com

DOI 10.29121/shodhkosh.v6.i4s.2025.6970 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Neuromarketing, Emotion Recognition, Visual Media

Analytics, Digital Art, Online Visual Advertising, Viewer Engagement |

|||

1. INTRODUCTION

Emotional persuasion has a determining impact on the way viewers engage, interpret and react to visual media in the modern digital contexts. The online art communities, social networks, and digital visual advertisement systems are becoming clogged with images that seek to capture the scarce viewer attention and, therefore, emotional connection is an essential factor in achieving success. Affective responses are formed by visual stimuli color, composition, movement, symbolism and narrative pattern, which shape cognition, memory development, and behavioral intention. According to past studies in visual communication and media psychology, feelings have always been proven to be mediators of the relationship between visual perception and decision-making process which directly influences the engagement, preference, and persuasion levels Antonov et al. (2024), Šola et al. (2024). The skillfulness with which visual content can arouse instant emotional reactions in digitally mediated situations, where the interactions are both short-lived and highly volatile has taken on an even greater role in artistic expressiveness as well as in marketing efficacy Qureshi et al. (2020).

Irrespective of this significance, conventional methods of assessing viewer engagement are largely based on self-report tools in the form of surveys, interviews, and focus groups. Although these approaches provide subjective understanding, they have shortcomings of recall bias, social desirability, and access to deeply hidden emotional processes which in most instances form the basis of authentic responses Li et al. (2022). Even the measures of click-through rates, counts of views, and dwell time, do not reveal the underlying complexity of the affective states that lie behind visual impact Xia et al. (2020). Such constraints produce a methodological gap that is critical, especially to the digital art and online advertising, in which the emotional intensity and aesthetic experience play the most important role in meaning-making and connectivity to the audience.

Neuromarketing and emotive based visual analytics will create new possibilities to overcome such obstacles. Neuromarketing is a field of study that combines neuroscience, psychology, and marketing to examine how the human brain and physiologies react to stimuli in order to measure implicit emotional responses that cannot be measured using self-reported information Šola et al. (2022). The artificial intelligence, affective computing, and computer vision have also developed to a point where the viewer emotion can be decoded in large scale using facial expression, patterns of eye movement, physiological, and behavioral signals Sánchez-Fernández et al. (2021). As applied to visual media, these techniques can facilitate emotion-based study of the perception, interpretation, and recollection of digital artworks and advertisements. It is against this background that the research problem underpinning this study is the absence of unified frameworks that can critically relate emotional reactions to visual media attributes and quantifiable engagement outcomes of artistic and advertising interest. In the literature, digital art and commercial advertising are usually considered as distinct areas, as these areas have similarities in the basis of visual persuasion and emotion appeal Joseph and Maheswari (2025). Additionally, the ethical transparency/interpretability has not been fully investigated within emotion decoding systems, especially in the multicultural visual space setting.

The aim of the research is to analyze the emotion decoding of viewers through a neuromarketing approach by integrating emotion recognition methods and the analysis of visual media. The article adds hierarchical conceptual approach, linking the visual characteristics, emotional reactions, and measures of engagement, which are useful to digital artists, advertisers, and researchers. This research contributes to the knowledge in the area of emotionally intelligent visual media creation and assessment, filling the gap between neuromarketing theory, emotion analytics with the use of AI, and the study of visual culture, as well as it defines the prospects of responsible and explainable systems with emotion responses Talala et al. (2024).

2. Theoretical Foundations

The aesthetic experience of visual media has a theoretical basis on the well-known theories of emotion, affect and perception of the visual image which elucidate the transformation of sensorial influences into subjective experience. Classical, psychological theories, including dimensional theory of emotion, endeavor to explain affective states as either existing along linear, continuous scales of valence and arousal and provide an organized foundation to explain emotional responses to visual stimuli Mancini et al. (2023). The theory of cognitive-appraisal further proposes that emotions arise because of the evaluative processes where the viewers make judgments of the visual information by comparing it against the personal goals, cultural background, and previous experience Antonov et al. (2024). In perceptual perspective, theories of visual perception have placed emphasis on the low-level features; color contrast, symmetry, motion, and spatial organization, in guiding attention and influencing the affective reaction. All these frameworks take place as to why visual media can trigger quick, even subconscious emotional responses in the form of a response that comes prior to conscious thought and as such it may be especially useful in the high-paced digital context.

Neuromarketing is based on these theoretical foundations with neuroscientific and psychophysiological applications to advertising and visual communication. The fundamental principles of neuromarketing assume that consumer behavior can be better explained by emotional involvement rather than a rational consideration of the issue being discussed since most decisions are influenced by implicit affective judgment Panić et al. (2023). Eye-tracking, electroencephalography, analysis of facial expressions and biometric monitoring are some of the techniques that have been used to unveil how viewers attend, process and retain visual advertisements emotionally. Neuromarketing has also shown that emotionally congruent imagery improves message understanding, brand association, and retention especially in visually dense online technology Nilashi et al. (2020). Emotional aesthetics takes a prominent place in the sphere of digital art practices, where artists increasingly appeal to their audiences with immersive and interactive visual experiences as well as those based on data. Digital art in contrast to the traditional static artworks may react in a dynamic manner to the presence of a viewer, their action, or data within the environment, creating emotionally participatory experiences Schröter et al. (2021). Digital art theory of emotional aesthetics focuses on experience through which affective intensity, ambiguity, and the experience of being immersed with the senses play a role in the path to meaning. Research in this field points to the role of color theory, generative patterns, motion, and sound integration in emotional interpretation to establish digital art as a promising subject of research on emotion-based visual perception outside the scope of commercial goals Alsharif et al. (2022). In parallel with such changes, emotion recognition and affective computing, based on AI, have been developed rapidly and now allow analyzing human feelings based on visual, auditory, and behavioral signals. At scale, machine learning and deep learning models can now produce results upon inferring emotional states based on facial expressions, gaze patterns, posture, and patterns of interaction Adedoyin and Soykan (2023).

Table 1

|

Table 1 Summary Comparison of Emotion-Driven Visual Media Approaches |

||||||

|

Approach / Perspective |

Emotional Basis |

Data Source |

Analysis Method |

Strengths |

Limitations |

Typical Application |

|

Classical Emotion Theories |

Valence–Arousal models |

Psychological experiments |

Conceptual and statistical

analysis |

Strong theoretical grounding |

Limited real-time insight |

Media psychology studies |

|

Cognitive–Appraisal Theory |

Meaning interpretation |

Self-reports, perception

tasks |

Qualitative and cognitive modeling |

Explains subjective

variation |

High subjectivity |

Visual perception research |

|

Traditional Advertising

Analytics |

Behavioral response |

Clicks, views, surveys |

Descriptive statistics |

Easy to implement |

Misses subconscious emotions |

Online advertising

evaluation |

|

Neuromarketing Methods |

Implicit affective response |

EEG, eye-tracking,

biometrics |

Signal processing and

analytics |

Captures subconscious

emotion |

Costly, small samples |

Brand and ad testing |

|

Digital Art Emotional

Aesthetics |

Experiential affect |

Interactive visual stimuli |

Interpretive analysis |

Rich emotional depth |

Hard to quantify |

Digital art exhibitions |

|

AI-Based Emotion Recognition |

Observable expressions |

Facial, gaze, behavior data |

ML / Deep learning |

Scalable, objective |

Bias, interpretability

issues |

Large-scale media analysis |

|

Affective Computing Systems |

Multimodal emotion |

Visual + physiological data |

Multimodal fusion models |

Higher accuracy |

Data complexity |

Emotion-aware interfaces |

|

Static Visual Design

Evaluation |

Visual appeal |

Design attributes |

Heuristic analysis |

Simple comparison |

Ignores viewer emotion |

Graphic design review |

|

Adaptive Emotion-Aware Media |

Real-time affect |

Live user interaction |

Dynamic AI models |

Personalized engagement |

Ethical concerns |

Interactive advertising |

The Table 1 gives a brief comparative summary of theoretical, analytical, and applied methods applied in emotion-driven visual media research.

3. Conceptual Framework for Emotion Decoding in Visual Media

3.1. Integrated neuromarketing–AI analytical framework

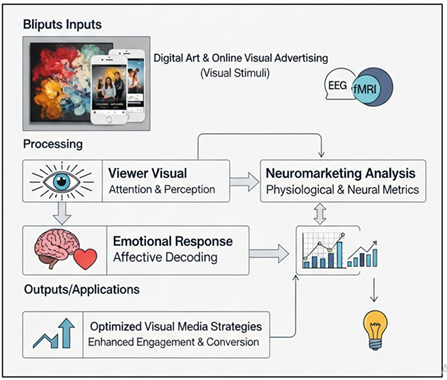

The neuromarketing-AI pipeline is an analytical framework that integrates the visual media outcomes and captures, interprets, and links the viewer emotions. Input layer is where visual stimuli such as digital works of art or online advertisements are introduced to controlled or real life digital environments. The data of viewer interaction are then obtained via complementary means, such as behavioral indicators (dwell time, scrolling, clicks), visual (facial expressions, gaze patterns, etc.), and contextual metadata (type of device, platform, viewing time). Neuromarketing layer concentrates on the implicit affective reactions that it converts these signals into physiology and perceptual signs of attention and emotion. The AI layer, in its turn, utilizes machine learning and deep learning models to derive salient features, conduct emotion classification or regression and predict temporal emotional dynamics.

Figure 1

Figure 1 Conceptual Workflow of Neuromarketing-Based Emotion

Decoding in Visual Media

The Figure 1 represents a hierarchical pipeline in which the stimuli of digital art and advertising evoke the perception of the viewers and their emotional reactions measured by the neuromarketing criteria. Such insights are converted into optimized visual media guidelines, which allow to achieve better engagement, knowledgeable design choices and optimization of the results of the visual communication through emotionally charged considerations.

3.2. Visual stimuli attributes: color, form, motion, and narrative

The visual stimuli attributes are the major drivers of emotional response in digital media. Color can also have an impact on mood and arousal based on hue, saturation, and contrast, which often can cause culturally-conditioned affective responses. Form and composition control the organization of perceptions, balance and harmony to create a sense of comfort, tension or intrigue. Motion brings in time change effects that increase attention and emotional intensity especially in animated or interactive media. Narrative structure offers semantic unity and it gives the viewers the ability to relate emotionally to each other through story telling, symbolism and implied meaning. These features work together with each other in a synergistic manner and create multiple emotional experiences instead of single responses.

3.3. Emotional: valence, arousal and dominance

Valence, arousal and dominance emotional dimensions provide a continuous and interpretable measure of viewer affect. The positivity or negativity of a given emotional response would be represented by valence, the level of intensity or activation would be represented by arousal, and a sense of control or subjection would make a viewer experience dominance. This dimensional model contributes to the sophisticated analysis that goes beyond the discrete emotion labels, and subtle changes in emotions can be measured in various visual situations and audience situations.

4. Methodological Approach

4.1. Data sources: digital artworks and online visual advertisements

The given study works with a curated subset of a publicly available multimodal affective dataset based on online visual ads, which includes professionally designed both still and animated advertisement examples along with the records of viewer interaction. It consists of high-resolutional visual material and synchronised viewer-facing webcam recordings and interaction logs obtained during online controlled viewing. Affective labels are added to each ad, according to continuous valencearousal ratings, and the engagement indicators include viewing time and recall rating. The given set is said to be valid and commonly applicable to research in affective computing because of standardized experiment protocol, variety of visual styles, and equal distribution of emotions. It allows a reproducible form of experimentation and represents an approximation of real-world conditions of digital advertising, and it is therefore appropriate as a platform upon which the decoding of emotions in visual media can be studied without using obtrusive physiological sensors.

4.2. Emotion analysis techniques: facial, behavioral, and contextual cues

The emotion analysis pipeline is a multi-stage and structured emotion analysis pipeline.

Step 1: Data acquisition Data on viewer-facing video streams and interaction logs are matched with timestamps of the visual stimulus.

Step 2: Facial cue processing Face detection and alignment are carried out, which is followed by the extraction of facial action units and expression intensities.

Step 3: Behavioral cue analysis- Interaction patterns dwell time, mouse movement entropy, scrolling velocity, click latency are calculated.

Step 4: Contextual cue integration- metadata such as content type and platform context and exposure duration are coded.

Step 5: Temporal aggregation -Short-term affects are averaged and flattened to indicate persistent affects.

Step 6: Multimodal fusion- The combination of facial, behavioral and contextual features works to create a single emotion representation.

4.3. Feature extraction and emotion classification models

The multimodal visual, behavioral and contextual inputs are converted to structured representation that is used to classify emotions through the feature extraction process. The deep CNN embeddings of the expression dynamics in preprocessed facial frames, and the behavioral statistics and contextual encodings of the engagement patterns are obtained. Collectively, these combined characteristics facilitate strong, transferable and semantically deep emotion modeling.

Feature extraction process

Step 1: Preprocess facial frames (normalization, alignment).

Step 2: Extract deep facial embeddings using a pretrained CNN backbone.

Step 3: Compute statistical behavioral descriptors (mean dwell time, variance, entropy).

Step 4: Encode contextual features using one-hot or embedding representations.

Step 5: Normalize and concatenate all the features in order to be classified.

4.4. Emotion classification models

1) Convolutional

Neural Network (CNN)

The use of a Convolutional Neural Network (CNN) as a baseline emotion classification model is also due to its high ability to learn spatial patterns of visual data. CNNs have the capacity to automatically extract hierarchical features of facial images, that is, local textures which are movements of the eyes, mouth movements, and muscle activations that are highly related to expressions of emotions in the context of emotion recognition. Superficial layers are trained on low and intermediate-level features and the deeper layers are trained on abstract affective representations. The CNNs can be especially used to analyze emotions at the frame-level or the level of emotional dimensions or categories when each image is categorized separately. They are efficient in their computing capabilities and their accuracy is also high, which makes them useful in the analysis of large scale visual media, but limited to capture temporal emotional processes with sequential frames.

2) Temporal

Emotion Modelling CNNLSTM Hybrid

CNN-LSTM hybrid model is an improvement of the static emotion recognition whereby the emotion modelling takes into consideration the temporal context. The network operates in this architecture whereby CNN layers are used to first extract the spatial facial features of the individual video frame and then they are sent to a Long Short-Term Memory (LSTM) network. The LSTM will represent any temporal dependencies and emotion transitions over time which means that the model will be able to identify changing expression, micro-emotion, and any lasting affective state. Such a method is especially useful in the analysis of responses to viewers in the case of an ongoing exposure to the visual media since the feelings are dynamic and change with time. CNNLSTM hybrids are more successful in predicting emotions and more representational of emotions in the real world by modeling spatial and time-related aspects.

3) Multi-modal

Attention-based Neural Network

The Multimodal Attention-Based Neural Network is a neural network that combines face, behavioral, and contextual feature in an integrated learning feature. Attention mechanisms assign the weight of various modalities and features dynamically depending on their application in predicting emotion at a particular time. This enables the model to make use of expressive facial cues, engagement behaviours or contextual signals when they are most informative. The adaptive weighting increases the interpretability and strength which are particularly strong in the case when the data is noisy or incomplete. Multimodal attention models can effectively be applied to a digital environment that is characterized by complexity because they are able to measure cross-modal interactions, and give a human-friendly and flexible way of decoding viewer emotion in visual media.

Input: Visual_Features, Behavioral_Features, Contextual_Features

Output: Emotion_Class (Valence, Arousal, Dominance)

Begin

Normalize all feature sets

F_face ← CNN_Encoder(Visual_Features)

F_behav ← Dense_Encoder(Behavioral_Features)

F_context ← Dense_Encoder(Contextual_Features)

F_combined ← Concatenate(F_face, F_behav, F_context)

If Model = CNN then

Emotion_Output ← Softmax(Dense(F_combined))

Else if Model = CNN_LSTM then

Temporal_Features ← LSTM(F_combined)

Emotion_Output ← Softmax(Dense(Temporal_Features))

Else if Model = Attention_Model then

Weighted_Features ← Attention(F_combined)

Emotion_Output ← Softmax(Dense(Weighted_Features))

End if

Return Emotion_Output

End

5. Result and Discussion

5.1. Emotional response patterns across visual media types

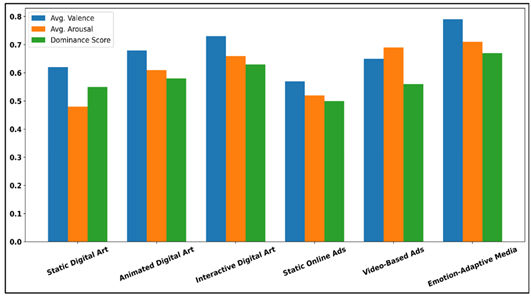

Table 2 shows that the emotional reactions of viewers towards different types of visual media are significantly different. Moderate values and arousal are present in static digital art and in the case of static online advertisements, a less intensive emotional engagement, but stable. By contrast, animated and video-based media have a great effect on raising arousal and peak emotional impact, which proves the use of motion to draw attention and affective arousal. Interactive digital art proves to be more dominant and emotionally stable and it can be proposed that the user agency increases emotional comfort and long-term involvement.

Table 2

|

Table 2 Emotional Response Patterns Across Visual Media Types |

||||||

|

Visual Media Type |

Avg. Valence |

Avg. Arousal |

Dominance Score |

Emotional Stability (%) |

Peak Emotion Intensity (%) |

Viewer Comfort Index (%) |

|

Static Digital Art |

0.62 |

0.48 |

0.55 |

71.4 |

64.2 |

78.6 |

|

Animated Digital Art |

0.68 |

0.61 |

0.58 |

69.1 |

72.5 |

74.3 |

|

Interactive Digital Art |

0.73 |

0.66 |

0.63 |

76.8 |

79.4 |

82.1 |

|

Static Online Ads |

0.57 |

0.52 |

0.50 |

65.7 |

66.8 |

70.4 |

|

Video-Based Ads |

0.65 |

0.69 |

0.56 |

68.9 |

81.6 |

73.2 |

|

Emotion-Adaptive Media |

0.79 |

0.71 |

0.67 |

82.3 |

88.9 |

85.7 |

Figure 2

Figure 2 Comparative Analysis of Emotional Dimensions Across

Visual Media Types

In Figure 2, the valence, arousal, and dominance that change as the media change between the static and emotion-adaptive are gradually increasing. Interactive and adaptive formats are more engaging in terms of emotion, and we found that dynamism and personalization not only increase the intensity of affect but also perceive control of the viewer in visual media experiences. The adaptive nature of emotion in the media is always better than all other types of media in terms of the indices of valence, arousal, dominance, and comfort, as the reaction to the media is richer, more immersive, and more personal. These results suggest that emotional richness grows when the visual media becomes not only interactive and adaptive. The findings confirm the hypothesis that emotionally sensitive systems can better match visual scenes with viewer affective responses and, thus, can better serve experiential digital art and high-end visual communication systems.

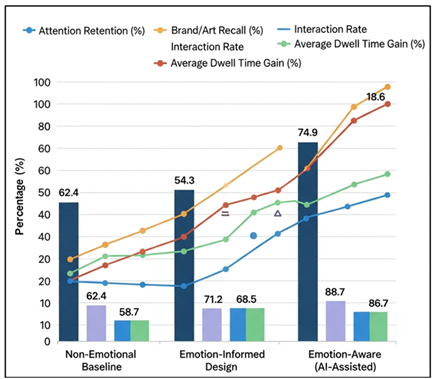

5.2. Impact of emotion-aware visuals on attention, recall, and engagement

Table 3 shows that there are significant performance benefits gained by use of emotion-aware visual strategies. The non-emotional baseline shows a low retention and recall of attention which is indicative of limitation of the traditional visual design techniques. Designs based on general affective principles and including the element of emotion, demonstrate significant gains in all metrics, meaning that even the partial consideration of emotions makes the viewer respond better. The greatest improvements are seen in AI-assisted emotion-aware visuals, in which the retention of attention, recall, and the rate of interaction improve dramatically, and the dwell-time is enhanced significantly.

Table 3

|

Table 3 Impact of Emotion-Aware Visuals on Viewer Performance |

||||

|

Visual Strategy |

Attention Retention (%) |

Brand/Art Recall (%) |

Interaction Rate (%) |

Average Dwell Time Gain (%) |

|

Non-Emotional Baseline |

62.4 |

58.7 |

54.3 |

0 |

|

Emotion-Informed Design |

74.9 |

71.2 |

68.5 |

18.6 |

|

Emotion-Aware (AI-Assisted) |

86.7 |

83.5 |

79.4 |

32.8 |

Such findings affirm that the live time decoding and responding to viewer emotions enhance the process of cognitive processing and memory encoding. The images that are emotion conscious seem to hold attention longer, and promote more interaction, which is extremely important during the digital art experience as well as online advertising. In general, this table confirms the effectiveness of this practical concept of making emotional analytics a part of visual media design in order to deliver quantifiable engagement results. Figure 3 reveals a steady change in attention retention, recall, rate of interaction, and dwell time between non emotional and AI assisted emotion aware strategies indicating that emotion minded visual design greatly improves cognitive engagement and continued viewer engagement in digital art and advertisement engagements.

Figure 3

Figure 3 Integrated Performance Comparison of Visual

Strategies on Engagement and Recall Metrics

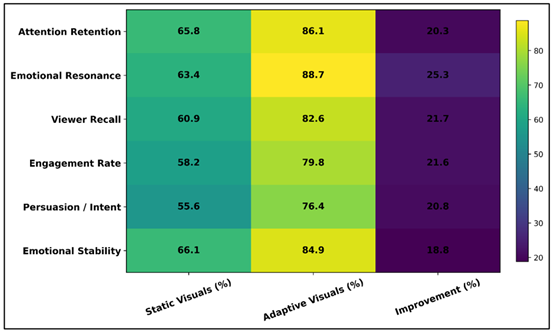

5.3. Comparative analysis of adaptive versus static visual designs

In Table 4, the comparison between the static and the adaptive visual designs is done directly, which shows the benefits of having the emotional adaptivity. One-dimensional imagery score lower results in all the metrics of attention, emotional resonance, recall, and persuasion since they cannot react to the evolving state of the viewer. Adaptive visuals, in their turn, will reach gains of about 1925, on average, on all performance scales, and emotional resonance and recall by the viewer, in particular, will be improved significantly.

Table 4

|

Table 4 Performance Comparison of Adaptive Vs. Static Visual Designs |

|||

|

Performance Metric |

Static Visuals (%) |

Adaptive Visuals (%) |

Improvement (%) |

|

Attention Retention |

65.8 |

86.1 |

20.3 |

|

Emotional Resonance |

63.4 |

88.7 |

25.3 |

|

Viewer Recall |

60.9 |

82.6 |

21.7 |

|

Engagement Rate |

58.2 |

79.8 |

21.6 |

|

Persuasion / Intent |

55.6 |

76.4 |

20.8 |

|

Emotional Stability |

66.1 |

84.9 |

18.8 |

This implies that the individualization and real-time emotional congruence are quite helpful in improving the affective as well as behavioral responses. The rise in emotional stability is also a pointer that adaptive systems ensure comfort of the viewers in addition to ensuring that engagement is upheld. The implications of these findings on the field of digital art and advertising are significant since it was shown that adaptive visuals can not only be attentive, but also create substantial emotional resonance. The findings are a strong indicator of the shift of the traditional content delivery systems to the intelligent and emotion-sensitive visual systems.

Figure 4

Figure 4 Heatmap Comparison of Performance Gains Between Static

and Adaptive Visual Designs

Figure 4 in the heatmap is a clear indication of the performance index, which adaptive visuals have over the static designs in all the metrics used to assess them. Greater color intensity of adaptive visuals implies greater attention, emotional resonance, recall, and engagement, whereas the values of improvement reflect consistent changes, especially of emotional resonance and viewer recall.

6. Conclusion

The paper investigated visual media perception of viewer emotions as a neuromarketing perspective, incorporating theoretical background, emotion analytics with AI, and empirical appraisals in digital art, and online visual advertising. The results always support the argument that emotional reaction- as expressed in terms of dimension of valence, arousal and dominance- centralizes the focus on attention, recall, engagement and persuasiveness. Comparative findings have shown that interactive and emotion-adjustive visual designs perform much better than the system of the still design where they have a greater emotional appeal, stronger viewer control, and enhanced emotional stability. These results confirm the utility of emotion sensitive models in matching visual cues with unconscious reactions of viewers and as a result promote aesthetics and effectiveness of communication. The methodological paradigm, integrating facial and behavioral, as well as contextual stimuli with complex classification frameworks, was found to be able to capture finer affective dynamics that otherwise cannot be detected with self-report and surface engagement measures. Empirical findings revealed significant changes in the percentage of attention retention, recollection, and interactions rate with better levels of emotion-conscious strategies, which proved their applicability to the real world in terms of digital media. Notably, the comparative analysis of adaptive and static visuals identifies personalization and emotional responsiveness as important factors of long term engagement and persuasion. In a more general sense, the implications are not limited to advertising performance but to the art of digital art curation where the interpretation of emotion can inform the design of an exhibition, the pace of the storyline and the interactive experiences that engage the audience more. Meanwhile, the research also indicates the importance of ethical disclosure, cultural competence, and decipherability in the application of emotion analytics. On the whole, this contribution provides a framework, interdisciplinary basis of emotionally intelligent visual media studies contributing to the future development of responsible neuromarketing, adaptive design, and AI-driven visual communication systems.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Adedoyin, O. B., and Soykan, E. (2023). COVID-19 Pandemic and Online Learning: The Challenges and Opportunities. Interactive Learning Environments, 31(2), 863–875. https://doi.org/10.1080/10494820.2020.1813180

Alsharif, A. H., Salleh, N. Z. M., Al-Zahrani, S. A., and Khraiwish, A. (2022). Consumer Behaviour to be Considered in Advertising: A Systematic Analysis and Future Agenda. Behavioral Sciences, 12(12), 472. https://doi.org/10.3390/bs12120472

Antonov, A., Kumar, S. S., Wei, J., Headley, W., Wood, O., and Montana, G. (2024). Decoding Viewer Emotions in Video ads. Scientific Reports, 14(1), 26382. https://doi.org/10.1038/s41598-024-76968-9

Joseph, C., and Maheswari, P. U. (2025). Facial Emotion Based Smartphone Addiction Detection and Prevention Using Deep Learning and Video-Based Learning. Scientific Reports, 15(1), 18025. https://doi.org/10.1038/s41598-025-99681-7

Li, H., Chen, Q., Zhong, Z., Gong, R., and Han, G. (2022). E-Word of Mouth Sentiment Analysis for User Behavior Studies. Information Processing and Management, 59(1), 102784. https://doi.org/10.1016/j.ipm.2021.102784

Mancini, M., Cherubino, P., Martinez, A., Vozzi, A., Menicocci, S., Ferrara, S., Giorgi, A., Aricò, P., Trettel, A., and Babiloni, F. (2023). What is Behind In-Stream Advertising on YouTube? A Remote Neuromarketing Study Employing Eye-Tracking and Facial Coding Techniques. Brain Sciences, 13(10), 1481. https://doi.org/10.3390/brainsci13101481

Nilashi, M., Samad, S., Ahmadi, N., Ahani, A., Abumalloh, R. A., Asadi, R., Abdullah, R., Ibrahim, O., and Yadegaridekkordi, E. (2020). Neuromarketing: A Review of Research and Implications for Marketing. Journal of Soft Computing and Decision Support Systems, 7(1), 23–31.

Panić, D., Mitrović, M., and Ćirović, N. (2023). Early Maladaptive Schemas and the Accuracy of Facial Emotion Recognition: A Preliminary Investigation. Psychological Reports, 126(4), 1585–1604. https://doi.org/10.1177/00332941221075248

Qureshi, M. I., Khan, N., Qayyum, S., Malik, S., Hishan, S. S., and Ramayah, T. (2020). Classifications of Sustainable Manufacturing Practices in ASEAN Region: A Systematic Review and Bibliometric Analysis of the Past Decade of Research. Sustainability, 12(21), 8950. https://doi.org/10.3390/su12218950

Sánchez-Fernández, J., Casado-Aranda, L.-A., and Bastidas-Manzano, A.-B. (2021). Consumer Neuroscience Techniques in Advertising Research: A Bibliometric Citation Analysis. Sustainability, 13(3), 1589. https://doi.org/10.3390/su13031589

Schröter, I., Grillo, N. R., Limpak, M. K., Mestiri, B., Osthold, B., Sebti, F., and Mergenthaler, M. (2021). Webcam Eye Tracking for Monitoring Visual Attention in Hypothetical Online Shopping Tasks. Applied Sciences, 11(19), 9281. https://doi.org/10.3390/app11199281

Šola, H. M., Mikac, M., and Rončević, I. (2022). Tracking Unconscious Response to Visual Stimuli to Better Understand a Pattern of Human Behavior on a Facebook Page. Journal of Innovation and Knowledge, 7(2), 100166. https://doi.org/10.1016/j.jik.2022.100166

Šola, H. M., Qureshi, F. H., and Khawaja, S. (2024). Exploring the Untapped Potential of Neuromarketing in Online Learning: Implications and Challenges for the Higher Education Sector in Europe. Behavioral Sciences, 14(2), 80. https://doi.org/10.3390/bs14020080

Talala, S., Shvimmer, S., Simhon, R., Gilead, M., and Yitzhaky, Y. (2024). Emotion classification based on pulsatile images extracted from short facial videos via deep learning. Sensors, 24(8), 2620. https://doi.org/10.3390/s24082620

Xia, H., Yang, Y., Pan, X., Zhang, Z., and An, W. (2020). Sentiment Analysis for Online Reviews Using Conditional Random Fields and Support Vector Machines. Electronic Commerce Research, 20, 343–360. https://doi.org/10.1007/s10660-019-09354-7

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.