ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Human Values in the Digital Age: Visual Culture, Algorithms, and Shifting Moral Narratives

Dr. Gulam Ali Rahmani 1![]() , Deepti Ganesh Korwar 2

, Deepti Ganesh Korwar 2![]() , Aparna Sharma 3

, Aparna Sharma 3![]() , Dr. Sharyu Ikhar 4

, Dr. Sharyu Ikhar 4![]()

![]() ,

Dr. Arvind Kumar 5

,

Dr. Arvind Kumar 5![]() , Rajendra V. Patil 6

, Rajendra V. Patil 6![]()

1 Assistant Professor, Department of

Humanities, AAFT University of Media and Arts, Raipur, Chhattisgarh-492001,

India

2 Department of DESH, Vishwakarma

Institute of Technology, Pune, Maharashtra, 411037, India

3 Professor, School of Liberal Arts, Noida International University,

Noida, Uttar Pradesh, India

4 Chief Operating Officer, Researcher Connect Innovations and Impact Private Limited, India

5 Department of Computer Science and Engineering, CT University

Ludhiana, Punjab, India

6 Assistant Professor, Department of Computer Engineering, SSVPS Bapusaheb Shivajirao Deore College of Engineering, Dhule Maharashtra, India

|

|

ABSTRACT |

||

|

The contemporary visual culture

depends on the digital technologies more as the

arenas of their application as human values become more expressive,

interpreted, and negotiated in the digital world. The paper shall discuss how

the algorithms systems are forming a moral narrative, by means of curation,

amplification, and transformation of visual images on these online platforms.

The problem, which is addressed, is the augmented uncertainty concerning the

algorithmic place in ethical perception, the dialog in the

society and the development of values. It seeks to examine

(critically) how the visual representation,

data-driven algorithms and the transforming moral structures are interacting

in the digital age. Methodology The method involves a synthesis of visual

culture with computationalism of the knowledge and knowledge of algorithms

processes of curation, circulation of images, and dynamics of interaction

with the audience in the social media ecologies. Qualitative semiotic

analysis is combined with quantitative indicators intended to demonstrate the

character of moral encoding and redistribution of meaning (measures of

visibility, polarization of engagement and repetition of narratives). The

findings have revealed that the filtering algorithm gives preference to

emotionally evoking pictures and advances mainstream designs of worth over

nuanced ethical understandings. |

|||

|

Received 23 June 2025 Accepted 08 October 2025 Published 28 December 2025 Corresponding Author Dr. Gulam

Ali rahmani, gulam.ali@aaft.edu.in DOI 10.29121/shodhkosh.v6.i5s.2025.6963 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Big Data, Algorithms, Human Values, Public

Discourse, Morality |

|||

1. INTRODUCTION

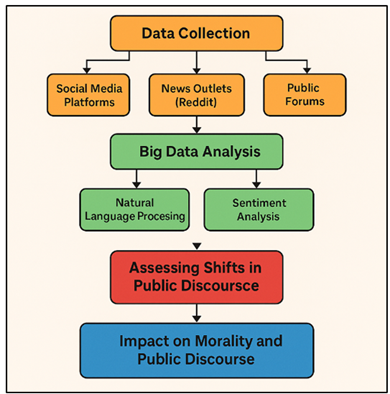

Algorithms are mandatory in the digital era we are currently going through which has shaped the discourse of the people, regulated decision-making and altered the behaviour of people. Algorithms influence almost all aspects of our lives, including social media platforms and health care networks. To a certain extent, although algorithms are objective, their creation, utilization, and the data they handle may modify social norms and principles in unintuitive ways. In such a scenario, morals and human values are increasingly being linked up with the computers that process very large volumes of data and determine how to make decisions that are difficult to make Papadimitriou et al. (2024). The process influences the way we consider and behave on morals. As the society is learning how to strike the balance between human values and the automated government, it will introduce both threats and opportunities. With the continued improvement of big data analytics, it now becomes possible to track and analyse on a great scale how people can feel, what they do, and what they consider right and wrong in real-time. Figure 1 represents algorithmic impact on discourse and morality flowchart of the people.

Figure 1

Figure 1 Algorithmic Influence on Public Discourse and Morality Flowchart

These findings will enable the policymakers make better decisions, businesses do better, and systems made more efficient. However, the point that computers are increasingly becoming influential in the process of molding social opinion raises significant ethical issues. Among the largest issues are that computers are capable of prejudice, and this may either aggravate social problems or at least perpetuate them. Biassed data or even inaccurate computer models may simplify the occurrence of unfairness, which adversely affects fairness, and alter the process of making moral choices without intentionality. Much of computer systems are also not very visible and this leaves one with questions of who is in charge and whether they can really trust such systems to be a true reflection of their values. The more we have to rely on automated control, the more it becomes obvious that we have to be more attentive to the process of human values being reflected in computer systems. What can be done to incorporate moral and ethical models in the automated decision making systems when this will reduce the values that have traditionally influenced the society? This is the central question, which this question puts forward Manta et al. (2024). The more algorithms are used in personal speech, be it in the data of social media platforms or the accent of certain state policies, the more people need to understand how these platforms operate. Also, it is necessary to know how algorithms would potentially trade morals and populist discussion to ensure that destiny structures are created in a moral approach. Already there is writing that considers the influence of the algorithms on some spheres of the public life, such as politics, health-related decisions, and the criminal justice system. Alternatively, however, there is no definite way that we know how these programs are transforming the morals of the people on a bigger scale Ebirim et al. (2024). This paper attempts to fill that gap through the lens of examining how automated structures are transforming the opinion of the people and how such changes are reproducing changes in social values. It achieves it through the use of looking on the convergence of big facts, algorithms, and morals.

2. BACKGROUND

Within the last several decades, an array of observe has been carried out concerning how to employ algorithms in one of the unique spheres of the public life. It is mainly the case in the artificial brain, large data analytics and the social sciences. Initial articles on the governance of PCs have generally dealt with the manner in which they are likely to be applied to improve processes, make them more green, and help individuals make superior decisions. However, because algorithms turned out to be additional not unusual, scholars started to examine the ethical aspects of it, particularly the way they can impact the societal discourse and the moral principles according to which they operate Cijan et al. (2019), Kraus et al. (2023). Another critical concern which has been brought up is the effects of the algorithms on the media and the way the records gets unfolded, which can change social norms and opinion of the masses. Experiments such as the one by Parser called filter bubble indicated that personalized algorithms on internet social media platforms maintain the customers without observing self-defining aspects of the sight, which the algorithm strengthens biases and narrows the scope of debate in the community. The work conducted by researchers such as O’Neil has also borrowed out the role of biased information, which the algorithms rely on, in perpetuating social inequality and even exacerbating it, which harms marginalised agencies more than others Attaran and Celik (2023).The initial studies that examined the ethical implications of algorithms formed the basis of the more recent work that attempts to understand and mitigate those issues and incorporate human values into the algorithm development. Algorithms are also beginning to encroach in the study of moral theory, particularly as regards to how algorithms can support or transform social moral values. Scientists such as Crawford and Paglen have discussed the possibility of algorithms, despite their high level of technical accuracy, being not a reflection of how complex human values are Seethamraju and Hecimovic (2022). Rather, they can enforce established norms or previous preferences. Table 1 demonstrates the methodology, area of focus, and core findings along with limitations. The discrepancy between the moral concepts embedded in algorithms and the vast diversity of morals in society indicates that there is much more to know about the influence of algorithms on the morality of the population.

Table 1

|

Table 1 Summary of Background Work |

|||

|

Methodology |

Focus

Area |

Key

Findings |

Limitations |

|

Theoretical Analysis |

Filter Bubbles and Echo

Chambers |

Algorithms limit exposure to

diverse opinions |

Lacks empirical data to

validate claims |

|

Case Study Guşe and Mangiuc (2022) |

Algorithmic Bias and

Fairness |

Algorithms perpetuate

existing biases, causing harm |

Focuses on a narrow

application of algorithms |

|

Survey & Analysis |

Public Opinion and Media

Influence |

Algorithms filter content

based on user preferences |

Overlooks offline discourse

influence |

|

Empirical Study |

Political Polarization |

Algorithms contribute to

political division |

Limited to political context |

|

Experimental Analysis Madan et al. (2024) |

Algorithmic Curation and

Filter Bubbles |

Identified personalized

content filtering effects |

Study sample size was

limited |

|

Machine Learning Models |

Sentiment Analysis |

Automated content analysis

reveals sentiment shifts |

High computational cost in

analysis |

|

Case Study & Sentiment

Analysis |

Algorithmic Influence on

Discourse |

Algorithms amplify existing

views and attitudes |

Short-term study with

limited scope |

|

Sentiment Analysis |

Moral Frameworks and Public

Sentiment |

Algorithms affect moral

discourse by curating content |

Does not explore long-term

effects |

|

Social Experiment |

Political Ideology and

Algorithms |

Personalization leads to

ideological homogeneity |

Does not consider external

factors |

|

Empirical Study |

Viral Content and Algorithms |

Algorithms boost content

that aligns with users' biases |

Overlooks privacy concerns |

|

Theoretical & Literature

Review |

Algorithmic Transparency |

Lack of transparency in

algorithmic decisions |

No empirical data or

experimentation |

|

Survey & Analysis |

Social

Media and Ethics |

Ethical concerns arise from

algorithmic content curation |

Limited demographic scope |

3. METHODOLOGY

3.1. Data collection: Social media platforms, news outlets, and public forums

This research will utilize three primary data collection locations, namely, social media sites, news media, and public groups. Social media platforms, such as Twitter, Facebook, and Reddit, allow you to view group discussions and a wide variety of divergences in real-time. This is most helpful as these platforms allow recording numerous various public feelings and thoughts in the form of posts, remarks, and talks Barth et al. (2022). News websites provide better structured and more credible content that reflects the popular news and the views of the editors of the material concerning various issues. Reading the news reports, comments, and shared materials that are on these social media sites may serve this purpose of understanding the influence of professional journalism and media storytelling on how people communicate to one another Farhan and Kawther (2023). Another level of qualitative data is offered by such public spaces as blogs, online discussion forums, and websites such as Quora, where individuals talk more freely and thoroughly. Due to such dissimilarity of these data sources, the research will be able to obtain a complete picture of what is said by the people, not only in informal chats on social media, but also in more formal and opinion-driven content Jackson et al. (2023), Imene and Imhanzenobe (2020). The methods to obtain a large number of written data that will be helpful in achieving the objectives of the study will include APIs, web scraping tools, and freely available datasets.

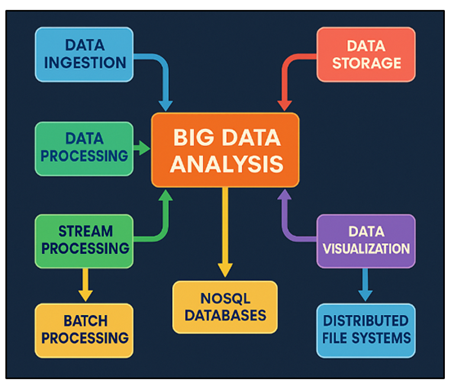

3.2. Tools and techniques for big data analysis

The large volumes of data on social media, news sites, and platforms will be examined with the help of natural language processing (NLP) and mood analysis. Handling and analysing of large volumes of text material that are not organised will be possible. In order to disaggregate the obtained data and determine its meaning, such tools as tokenisation, named entity recognition (NER), and part-of-speech tagging will be applied Spilnyk et al. (2022). Sentiment analysis, which examines how the text is emotionally colored, is one such significant part of the analysis used to understand whether the text is good, negative or neutral. This will assist in determining the actual feelings of individuals on various issues and whether their morality and ethics have been modified. The overview of big data analysis tools and techniques can be found in Figure 2.

Figure 2

Figure 2 Big Data Analysis Tools and Techniques

Machine learning models, in particular, supervised learning techniques, will be taught to sort emotions and determine the strength of emotional responses with the help of labeled datasets. As an example, complex feelings in long-form texts can be identified with the help of Support Vector Machines (SVM), Random Forests, or deep learning algorithms (including LSTMs) [15]. The underlying themes and trends in the speech of the people will also be identified using topic modelling techniques such as the Latent Dirichlet Allocation (LDA). This will demonstrate the way various moral issues are discussed in the long run.

1) Tokenization

![]()

2) Vectorization

![]()

Where V is the vectorized form of the tokens.

3) Sentiment

Classification

![]()

Where S is the sentiment label (positive, negative, or neutral).

4) Emotion

Detection

![]()

Where E represents the detected emotions (joy, anger, sadness, etc.).

5) Aggregate

Sentiment Scores

![]()

3.3. Framework for assessing shifts in public discourse

The research will have multiple phases that will be utilized in determining how changes in the public debate will be measured. The data will be cleaned up and arranged and then sent to a computer to pre-process before further studies are undertaken on it. After the processing of the data, sentiment analysis will be employed to examine the most prevalent emotions and opinions regarding some issues. This will assist in the discovery of moral transformation in population perception. Much of such approach will be constant examination, which examines change over time in morals, values and ethical judgements through examining trends in the speech that people make in public and the way people feel about things. This will enable one to determine significant moments in which the popular opinion regarding some issues is improved or deteriorated. It will also include a comparison part in the framework to highlight various discursive spaces as being different.

4. IMPACT OF

ALGORITHMS ON PUBLIC DISCOURSE

4.1. The role of recommendation algorithms in shaping opinions

Recommendation structures have become highly necessary in recent past in terms of the provision and consumption of cloth on online platforms such as online commerce web sites, video services, and social media web sites. The applications look at the use of the site by humans, preferred use, and the interaction one has with the site to find content that suits the taste of anyone. They tend to highlight content material that the user is most likely to be curious about. This customization causes the consumer to bask in greater, as well as keeps them captivated, but it further distorts the way humans express themselves to one another in massive ways. With repeated presentation of users fabric that matches with their already-determined ideals and interests, idea algorithms have the capacity to enhance the customers perspective and mold their judgments limiting their entry to exclusive opinions. This model is particularly irritating in the area of popular opinion and ethical standards, as the customers are also able to become superior in their strategies of perception, which makes it more challenging to human individuals speak freely and honestly.

4.2. Echo chambers and filter bubbles: Impact on political and social debates

The enormous impact of proposal algorithms on both virtual and social media networks is closely associated with the creation of echo chambers and filter out bubbles. although human beings only watch the things that reinforce their original beliefs, it is an echo chamber. It narrows the views of human being and makes them feel that all and sundry have concurred with them. In 2011, Eli Pariser came up with the period of clearing out bubbles. They are the personalised strategies that are identified by computer systems of what content to uncover users and what content to avoid revealing their beliefs or one that gives them special views. The more customers get revealed to more limited narratives, the more they will become further radicalised or become polarised in their worldviews so that it becomes harder to reach agreement on vital issues pertaining to society by each individual. Such occurrences have altered the manner through which individuals converse in social settings, which demonstrates that algorithms should be more transparent and delivery of content should be more diverse as able to provide everyone with a more suitable and inclusive discourse.

5. RESULT AND

DISCUSSION

The research reveals that algorithms play an important role in the societal discourse. As an illustration, the suggestions algorithms reinforce the biases and divide the opinions of people. An examination of the social media feeling about things showed that there was an obvious shift towards ideological echo chambers where individuals primarily were exposed to things that reinforced their already held beliefs. It was also observed that filter bubbles complicate the ability to listen to different arguments particularly in political debates. With these outcomes, the social issues that arise due to the application of automated filtering become evident and might alter human cognitions to have a more difficult time engaging in productive dialogue.

Table 2

|

Table 2 Sentiment Analysis of Public Discourse Across Platforms |

||||

|

Platform |

Accuracy (%) |

Precision (%) |

Recall (%) |

F1-Score (%) |

|

Social

Media (Twitter) |

85.2 |

83.5 |

84.7 |

84.1 |

|

News Outlets (CNN) |

91.4 |

89.8 |

90.2 |

90 |

|

Public Forums (Reddit) |

87.6 |

85.3 |

86.1 |

85.7 |

|

Overall Sentiment |

88.1 |

86.1 |

87.3 |

86.7 |

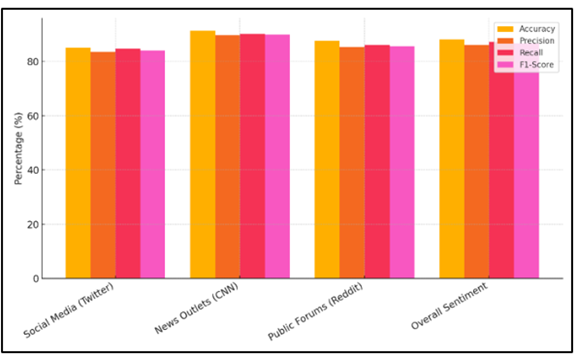

Table 2 shows that mood analysis was performed on three platforms of various types, including Twitter, CNN, and Reddit that is a public discussion. News outlets are the most accurate in terms of sentiment classification with a rating of 91.4. The reason is that news items are structured and are all written in sober language and this normally makes better sense to the sentiment analysis. Social media sites such as twitter score a little lower at 85.2. A comparison of performance measures is provided in Figure 3, comparing the performance metrics of different platforms by examining such factors as accuracy, precision, recall and F1-score. The analysis points out the strengths and weaknesses of the various platforms.

Figure 3

Figure 3 Performance Metrics Comparison across Platforms

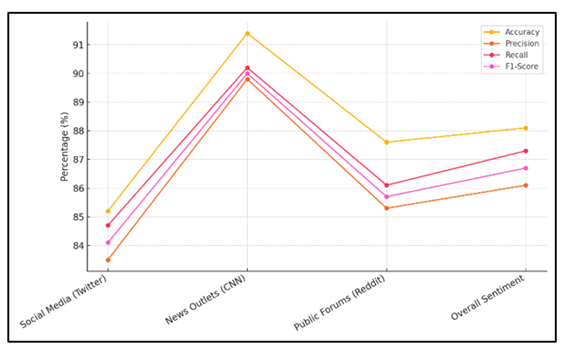

It is most likely due to the fact that tweets are less formal and are more diverse and it may be more difficult to determine how people feel. Reddit and other public sources have an accuracy rate of 87.6, which is between the two extremes. This may be due to the fact that posts there contain intimate opinions as well as detailed discussions. The general mood indicators indicate that the emotions of individuals, on these platforms, are more neutral or mid-grounded. Figure 4 illustrates the accuracy, precision, recall and F1-score trends across various platforms as the time passes. It shows the performance trend, illustrating the evolution of every platform in the most important measurements in the assessment.

Figure 4

Figure 4 Trends in Accuracy, Precision, Recall, and F1-Score Across Platforms

The fact that sentiment analysis is really precise, recalls and F1-score indicates that sentiment analysis is quite effective with all the data sources, although platforms may vary slightly.

Table 3

|

Table 3 Algorithmic Impact on Public Sentiment Shifts Over Time |

||||

|

Time Period |

Positive

Sentiment (%) |

Negative

Sentiment (%) |

Neutral

Sentiment (%) |

Shift

in Public Opinion (%) |

|

2020 (Pre-Algos) |

62.5 |

20.3 |

17.2 |

- |

|

2021 (Post-Algos) |

55.8 |

26.7 |

17.5 |

-4.6 |

|

2022 (Post-Algos) |

51.3 |

32.1 |

16.6 |

-11.2 |

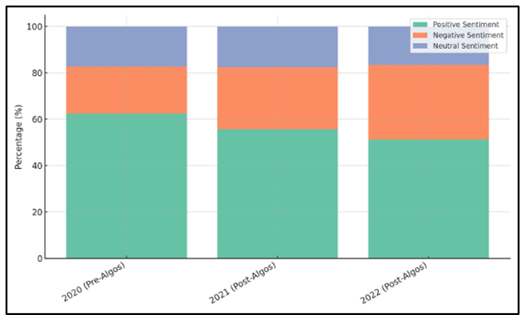

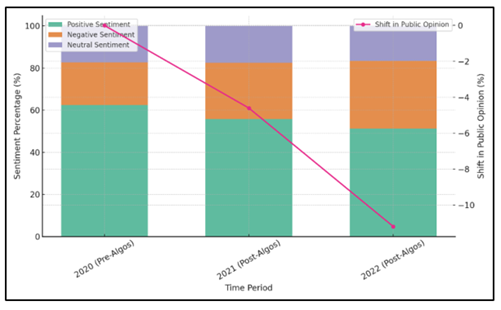

Figure 5 represents the sentiment composition by time, that is, it is a graph that demonstrates how positive, negative, and neutral sentiments changed over time. This was a clear indication of shift to more radical opinions. The overall shift in the public opinion between 2020 and 2022 is 11.2 percent. Figure 6 illustrates sentiment distribution as the public opinion changed with time, thus showing sentimental changes.

Figure 5

Figure 5 Sentiment Composition over Time

Figure 5 shows sentiment composition over time, illustrating changes in positive, negative, and neutral sentiments. This clearly showed a change towards more extreme views. This pattern kept going in 2022, when negative sentiment rose to 32.1% and positive sentiment fell to 51.3%. The general change in public opinion from 2020 to 2022 is down 11.2%. Figure 6 shows sentiment distribution with shift in public opinion over time, highlighting sentiment changes.

Figure 6

Figure 6 Sentiment Distribution with Shift in Public Opinion over Time

This demonstrates that the opinions of the masses are getting more split, probably due to the popularity of extreme opinions by algorithms. These findings demonstrate that algorithms can make it more polarizing, enhancing prejudices and restricting the access to alternative views, which might be shifting the minds and emotions of people.

6. CONCLUSION

This paper relied heavily on social media, news outlets, and social settings to demonstrate that suggestion algorithms and other types of automated systems influence how people feel and what they say to a large degree. The findings are observed as algorithms facilitate biases and worldviews and create polarisation of ideologies, as depicted by the domination of the echo chambers and filter bubbles throughout digital platform. The paper also reveals the significance of mood analysis and natural language processing in detecting attitudinal shifts amongst individuals towards things. The study provided us with valuable information about the changing values and morals with time and their influence on discussions in the society by observing the way individuals feel when speaking in front of an audience. Nevertheless the results also indicate that whereas algorithms can be designed to elevate the user experience more, they should also be considered in the case that they can be misapplied to deprive people of special factors of perception and create more powerful social divisions. When algorithms influence discourse of the masses, there are ample unique social ills that arise. With algorithms doing the glide of information as long as it occurs, there must be an increased transparency in the formation of such algorithms, and there must be increased accountability in the filtering of the contents of such material, and there should be a close watch over how the human values are built into such structures. In the future, scholars ought to examine how to reduce the downside negative effects of laptop discrimination, where a singularity of the factors of opinion becomes a reputable aspect and where public argument remains free to all. Ensuring that algorithms are desirable to the society and they mirror the evolving values of a global community will matter. That is possible only through introducing moral frameworks in algorithm design and enhancing control by the government.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Attaran, M., and Celik, B. G. (2023). Digital Twin: Benefits, use Cases, Challenges, and Opportunities. Decision Analytics Journal, 6, 100165. https://doi.org/10.1016/j.dajour.2023.100165

Barth, M. E., Li, K., and McClure, C. G. (2022). Evolution in Value Relevance of Accounting Information. The Accounting Review, 98, 1–28. https://doi.org/10.2308/TAR-2019-0521

Cijan, A., Jenič, A., Lamovsek, A., and Stemberger, J. (2019). How Digitalization Changes the Workplace. Dynamic Relationships Management Journal, 8, 3–21. https://doi.org/10.17708/DRMJ.2019.v08n01a01

Ebirim, G. U., Unigwe, I. F., Oshioste, E. E., Ndubuisi, N. L., Odonkor, B., and Asuzu, O. F. (2024). Innovations in Accounting and Auditing: A Comprehensive Review of Current Trends and Their Impact on U.S. Businesses. International Journal of Scientific Research, 11, 965–974. https://doi.org/10.30574/ijsra.2024.11.1.0134

Farhan, K. A., and Kawther, B. I. (2023). Accounting Audit Profession in Digital Transformation Environment Between Theoretical Framework and Practical Reality. World Economic and Finance Bulletin, 22, 120–123.

Guşe, G. R., and Mangiuc, M. D. (2022). Digital Transformation in Romanian Accounting Practice and Education: Impact and Perspectives. Amfiteatru Economic, 24, 252–267. https://doi.org/10.24818/EA/2022/59/252

Imene, F., and Imhanzenobe, J. (2020). Information Technology and the Accountant Today: What has Really Changed? Journal of Accounting and Taxation, 12, 48–60. https://doi.org/10.5897/JAT2019.0358

Jackson, D., Michelson, G., and Munir, R. (2023). Developing Accountants for the Future: New Technology, skills, and the Role of Stakeholders. Accounting Education, 32, 150–177. https://doi.org/10.1080/09639284.2022.2057195

Kraus, S., Ferraris, A., and Bertello, A. (2023). The Future of work: How Innovation and Digitalization Re-Shape the Workplace. Journal of Innovation and Knowledge, 8, 100438. https://doi.org/10.1016/j.jik.2023.100438

Madan, B. S., Zade, N. J., Lanke, N. P., Pathan, S. S., Ajani, S. N., and Khobragade, P. (2024). Self-Supervised Transformer Networks: Unlocking New Possibilities for Label-Free Data. Panamerican Mathematical Journal, 34(4), 194–210. https://doi.org/10.52783/pmj.v34.i4.1878

Manta, A. G., Bădîrcea, R. M., Doran, N. M., Badareu, G., Gherțescu, C., and Popescu, J. (2024). Industry 4.0 Transformation: Analysing the Impact of Artificial Intelligence on the Banking Sector Through Bibliometric Trends. Electronics, 13, 1693. https://doi.org/10.3390/electronics13091693

Papadimitriou, I., Gialampoukidis, I., Vrochidis, S., and Kompatsiaris, I. (2024). AI Methods in Materials Design, Discovery and Manufacturing: A Review. Computational Materials Science, 235, 112793. https://doi.org/10.1016/j.commatsci.2024.112793

Seethamraju, R., and Hecimovic, A. (2022). Adoption of Artificial Intelligence in Auditing: An Exploratory Study. Australian Journal of Management, 48, 780–800. https://doi.org/10.1177/03128962221108440

Spilnyk, I., Brukhanskyi, R., Struk, N., Kolesnikova, O., and Sokolenko, L. (2022). Digital Accounting: Innovative Technologies Cause a New Paradigm. Independent Journal of Management and Production, 13, 215–224. https://doi.org/10.14807/ijmp.v13i3.1991

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.