ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Designing Conversational Wellness Interfaces: Emotion-Aware Digital Companions for Mental Well-Being

Suvarna Milind Patil 1![]() , Ankita Srivastava 2

, Ankita Srivastava 2![]() , Manju Kundu 3

, Manju Kundu 3![]() , Dr. Shiney Chib 4

, Dr. Shiney Chib 4![]() ,Dr. Shreyas

Dingankar 5

,Dr. Shreyas

Dingankar 5![]() , Dr. Jaspreet Singh 6

, Dr. Jaspreet Singh 6![]() ,

,

1 Assistant

Professor, Department of DESH, Vishwakarma Institute of Technology, Pune,

Maharashtra, 411037, India

2 Assistant

Professor, School of Wellness, AAFT University of Media and Arts, Raipur,

Chhattisgarh-492001, India

3 Assistant Professor, School of

Liberal Arts, Noida International University, Noida, Uttar Pradesh, India

4 Dean Academics and Research Head,

Datta Meghe Institute of Management Studies, Nagpur, Maharashtra, India

5 Institute of Management and

Entrepreneurship Development, Bharati Vidyapeeth Deemed to be University Pune, Maharashtra,

India

6 Department of Computer Science and

Engineering, CT University Ludhiana, Punjab, India

|

|

ABSTRACT |

||

|

The necessity

to develop conversational wellness interfaces is now an urgent concern in the

development of emotion-sensitive digital companions on mental health. This

paper bridges this gap of generic talking machines and the nuances of

emotional needs of the customers that need constant psychological assistance.

The aim of the study is to present the best human-oriented model of

conversational wellness systems that can detect, decode, and respond to

emotional conditions in a responsive and ethically appropriate manner. The

process has integrated natural language processing, understanding of

paralinguistic cues, and affection computing models to determine emotion of

the user when communication is through text and where the communication input

is multimodal and optional. With the help of such inferences, dialogue

management strategies transform the tone, the pace, the level of empathy, and

cogitates about the prompts simultaneously. The

system is developed in a way that it facilitates

mental health practices, such as emotional validation, reflection of stress,

mood monitoring, and directed coping behaviors and is not explicitly clinical

in its offerings. Future gains on perceived empathy, conversational trust and

continued interactions are experimental compared to non-emotion-aware

interfaces as evaluated by simulated user interactions and direct pilot user

tests. Users allege that they are even more emotionally precise and that they

are better understood in the process of communication. The findings indicate

that the emotion-sensitive conversation design can have a positive effect on

the digital wellness experience under the conditions of transparency, user

control, and privacy-saving strategies. |

|||

|

Received 19 May 2025 Accepted 22 August 2025 Published 28 December 2025 Corresponding Author Suvarna

Milind Patil, suvarna.patil@vit.edu

DOI 10.29121/shodhkosh.v6.i5s.2025.6962 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Wellness Chatbots, Mental Health Support, Sentiment

Analysis, Conversational AI, Emotional Resilience |

|||

1. INTRODUCTION

Over the last several years, the mental health crisis in the world became more severe, and the number of individuals with psychological disorders such as depression, anxiety, and tension has never been higher. According to the World Health Organisation (WHO), mental health issues are among the key causes of failure to work by people globally. Nevertheless, professional mental health assistance is not always readily available, particularly in poor regions, the rural areas, and locations lacking mental health services. The stigma of the mental problems makes people even less willing to seek the help concerning them. The diseases that are not treated have a greater adverse impact on the health and life of people. Those issues have facilitated the process of enhancing health robots in the area of intellectual fitness assistance to become a very intriguing method of providing quick, easy, and customized service Spiegel et al. (2024). Wellbeing robots should transform how mental health care is delivered the usage of natural language processing (NLP) and artificial intelligence (AI). These AI-powered completely talking bots are meant to cause a conversation with users and offer help with the support of emotion tracking, offering emotional support, teaching coping mechanisms, and recommending how to customize intellectual fitness Li (2023). Unlike traditional mental health treatments, that insist on regular in-character classes with a therapist, health robots are convenient 24 hours per day, seven days per week, and able to provide a custom enjoy to human beings everywhere around the world. In the case of people who were unable to receive help in other ways due to social or geographical constraints, this phase of simplicity is particularly helpful Song et al. (2024).

The most massive novel feature of health applications in mental fitness is the ability to identify the feeling of people and control their responses correctly. Different subfields of NLP called sentiment analysis can help those robots to understand whether a person is in a good or bad mood, based on the analysis of their language and responding in a certain way. As an example, when a user flaunts anger or unhappiness, the robot might also learn such feelings and give suggestions on how to overcome such feelings including physical activities of deep breathing or altering the user angle towards bad occurrences. On the other hand, as one expresses enormous emotions, the robot can also assist and market the emotions, which could lead to nicely-being and achievement. The health robot designing relies heavily on ensuring that they can offer assistance 24/7 and 7 days a week. Mental health is an ongoing process; numerous people require low-barrier and regular help in order to take good care of their mental health. The features of daily check-ins and mood tracking, as well as personalized recommendations, make wellness applications quite an essential part of a mental health routine of the user. Such robots make people speak about what they want without being afraid of being judged, hence offering emotional support. This helps children to control their emotions and be more self-aware. Health robots, though useful may have some concerns that should be addressed.

2. RELATED WORK

More and more people are developing wellness apps in order to address mental health issues. Numerous studies have explored the effectiveness of talking bots that are based on artificial intelligence in helping address mental health issues. Woebot, a famous intellectual fitness robotic, is one of the greatest contributions in this field, as it talks to people about their mental health to improve it with using the Cognitive Behavioural remedy (CBT). Woebot is an example of systems that can interpret and respond based on emotional cues through mood analysis and NLP and was shown to have a significant positive impact on individuals with anxiety and depression Chakraborty et al. (2023). The robotic can be of benefit in intellectual fitness therapies, as it can supply promptly, customized assistance and does not discriminate; therefore, it is useful in this scenario. Other chatbots based applications like Wysa and Replika have also been created to help people manipulate their emotions, in addition to Woebot. Wysa, as well as Woebot, is mainly grounded on proven mental tactics. Through providing the offering people equipment to cope with strain, fear, and melancholy, it also capitalizes on synthetic brain to help them manage their mental fitness. Replika, however, focuses more on intellectual support through learning about the user by participating in interactions to provide more specialised answers Zhong et al. (2024), Zafar et al. (2024).

Those web sites emphasize the emerging trend of integrating artificial brain with treatment to offer a highly enclosed readily accessible space to each individual seeking mental health support. These computers are highly dependent on sentiment analysis since it helps them know the mood of a person and change their act. Bickmore et al. Did a test that established how tone-sensitive speak me bots should make customers happier and more involved in turning their tone entirely on how the users had been communicating themselves in an emotional way Boucher et al. (2021). This is because it allows robots to provide answers based on understanding by understanding how people feel. This develops a connection and a relationship with users, which is significant in delivering effective mental health care. Health robots have also raised ethical and privacy issues which have been explored by new research. In particular, handling delicate mental health information, researchers have emphasized the necessity of maintaining a secret of user data and building trust Kamble et al. (2025). The findings, user engagement, analytical method, significant features and methodology have been summarised in Table 1. A number of ways have been suggested to ensure the health robots do not violate moral values and ensure that they do not compromise the privacy and safety of the users when they engage with them.

Table 1

|

Table 1 Summary of Related Work |

|||

|

Methodology |

Key Features |

Sentiment Analysis Approach |

Outcomes |

|

Cognitive Behavioral Therapy (CBT) |

Conversational interface,

mood tracking |

Sentiment analysis based on

NLP |

Significant reduction in

anxiety and depression |

|

Evidence-based psychological

methods |

Personalized coping

strategies, emotional support |

Machine learning-based

sentiment analysis |

Positive user feedback on

mental health improvement |

|

Emotional companionship,

AI-driven Gupta et al. (2020) |

Conversation-based support,

personalized learning |

Sentiment detection through

NLP |

Improved emotional

resilience, user satisfaction |

|

Psychological intervention

via text |

Text-based emotional

support, daily check-ins |

Machine learning sentiment

classifier |

Decreased stress and anxiety

levels |

|

Cognitive Behavioral Therapy (CBT) and Acceptance Commitment

Therapy (ACT) |

Personalized emotional

support, mood tracking |

Sentiment classification

with NLP |

Improved emotional

regulation and mental well-being |

|

AI-powered conversation with

psychological techniques |

Mental health tracking,

daily emotional support |

NLP-based sentiment analysis |

Better engagement in

self-care activities |

|

Health coaching through text

Trofymenko et al. (2022) |

Sleep improvement, emotional

health guidance |

Deep learning-based

sentiment analysis |

Enhanced sleep quality and

mood improvement |

|

Personalized therapy through

chat |

CBT, mindfulness, mood

tracking |

NLP-based sentiment analysis |

Positive feedback on

reducing anxiety levels |

|

Cognitive Behavioral Therapy (CBT) |

Anxiety and stress

management, relaxation techniques |

Sentiment-aware interactions |

Reduced symptoms of anxiety

and depression |

|

AI-driven therapy and

coaching Kuhail et al. (2023) |

Stress management,

mindfulness, sleep support |

Emotion recognition with

machine learning |

Users report feeling more calm and relaxed |

3. WELLNESS CHATBOTS FOR MENTAL HEALTH SUPPORT

3.1. Functional Features of Wellness Chatbots

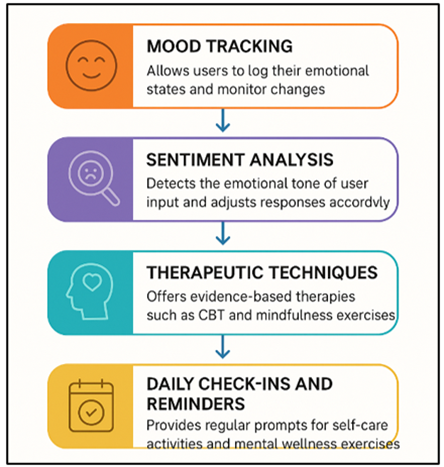

Wellbeing apps are designed to help users to manage their mental health in a fast and personalized manner; the apps offer different useful features. Mood tracking is a significant part of such applications and gives users the opportunity to keep track of their daily or regular mood. The robot analyses these comments to determine trends and give customized advice that will allow the users to observe how their feelings change over time.

Figure 1

Figure 1 Functional Features of Wellness Chatbots

Sentiment analysis is one such aspect that allows machines to detect the emotional undertone of human input be it sad, nervous or stressed and react to it Labadze et al. (2023). It is in this way that the robot can change its tone and thoughts to suit the feelings of the user. Figure 1 presents user support functional features of wellness chatbots.

3.2. Personalized Guidance and Interaction Methods

Health packages will be maximally efficient when they offer personalised hints and approach of user engagement. These robots adjust their reaction to human beings according to previous experiences, decisions as well as mind condition through artificial brain and machine getting to know. Through the means of temper evaluation, the robotic can also identify the emotional kingdom of a consumer and modify its response to reflect empathy and give pertinent feedback of the exact state of affairs. As an example, when an individual complains of unhappiness or pressure, the robot might approve of certainty coping mechanisms along with writing tasks, mindfulness sporting events, or deep breathing. It is through the process of interaction with people over time that the robot becomes more powerful in providing additional useful and personalized support. Most wellbeing applications switch their reaction to questions depending on the individual comments.

4. METHODOLOGY

4.1. Design of Sentiment-Aware Conversational Agents

The goal of designing sentiment-conscious speaking bots is primarily to develop systems capable of understanding the tone of emotion of what people say and understand it. Such bots comprehend human chats the application of natural language processing (NLP) and temper examination to figure out emotional signs including sorrow, joy, anger, or strain. The strategy begins with an instruction in text data in order to demystify the cloth. These include lemmatisation, removal of prevent-words and tokenisation. Most of these models are typically constructed on the basis of deep learning models such as Recurrent Neural Networks (RNN) or Support Vector Machines (SVM) through machine learning methods. Even the robot will be supposed to be cognizant of what is around it to be able to respond to emotional cues itself as well as understand a bigger, emotional context of a conversation in progress.

4.2. Data Collection: User Sentiment Data

Development and coaching of speaking bots which may appreciate how people feel depends on the accumulation of records on how people feel about things. A large chain of textual pieces of information messages as well as every joyful and horrible emotional state of mind allow it to clearly know how individuals are feeling. This information is available on a great number of resources: social media websites, online communities, programs working with mental health, and direct person interaction with the robotic. The sentiment evaluation version has been trained about the usage of sentiment-labeled data: textual content resources which are annotated with emotional labels i.e. happiness, disappointment, indignant or tension. There is a lot of speak me information that is necessary in comprehending various types of communication and expression of emotions in various societies. The accumulation of these facts has to take into account the social issues because the argument of intellectual fitness is quite delicate. The privacy and security of the users information are highly fundamental as a result, the perfect motions need to be implemented to anonymized statistics and guarantee adherence to guidelines that encompass GDPR. The feedback loop of the users and constant facts gathering can help to beautify sentiment evaluation algorithms regularly. This allows the robot to be more efficient in reading the emotional signals. Improvement of the robot’s data and the feedback provided by the users allow the latter to address a variety of emotional needs.

4.3. Implementation of Chatbot Frameworks and Sentiment Models

The required steps to make chatbot frameworks and mood models helpful in mental health diagnosis are to select the appropriate tools and arrange them in a manner that would be rational so that the system could assist. To create the talking robot, you can apply such frameworks as Dialog flow created by Google or Microsoft, or open-source applications such as Rasa. Developers can use such technologies to develop dynamic conversations, control their discursive flow, and add artificial intelligence-based features such as mood analysis. In most cases, mood analysis models are constructed with the help of such technologies as Keras, PyTorch, or TensorFlow. The inclusion of the chatbot architecture ensures that there is ease in communication between the response system and the language model. These models are being constantly tested and improved to ensure that the robot is useful in actual life and is able to respond to different emotions and contexts of conversation.

1) Training

the Sentiment Analysis Model

The sentiment analysis model is trained by minimizing a loss function L(θ) with respect to the parameters θ of the model:

![]()

where y_i is the true sentiment label and ŷ_i is the predicted sentiment for input x_i, and L is the loss function (e.g., cross-entropy).

2) Sentiment

Classification Model Prediction

After training, the sentiment of a new user input x_new is predicted using the trained model:

![]()

where ŷ is the predicted sentiment, and P(s | x_new; θ) is the sentiment probability distribution derived from the trained model parameters θ.

3) Chatbot

Response Generation Based on Sentiment

Once the sentiment is classified, a response r(x_new) is generated based on the sentiment:

![]()

where f_response is the function that generates an appropriate chatbot response based on the sentiment s and input x_new.

5. RESULTS AND DISCUSSION

The emotion-sensitive wellness robot was promising a great deal regarding personalised mental health care. Sentiment analysis assisted the robot in making the right decision to pick up how such emotions worked and modify the reaction to provide helpful remarks and individualised methods to handle issues. In time, users got more engaged and some claimed to have been capable of controlling their moods and managing their feelings. Nevertheless, there are still some issues, such as the way to cope with ambiguous emotional responses and ensure that all the people are sensitive to the cultures. The later versions may be focused on the better user experience and mood recognition.

Table 2

|

Table 2 Sentiment Detection Accuracy Evaluation |

||||

|

Model/Method |

Accuracy (%) |

Precision (%) |

Recall (%) |

F1-Score (%) |

|

Sentiment-Aware Wellness

Chatbot |

92.5 |

90.8 |

93.2 |

91.9 |

|

Traditional Sentiment

Analysis |

85.2 |

83.5 |

84.7 |

84.1 |

|

Basic Emotion Detection

Model |

78.5 |

76.2 |

77.8 |

77 |

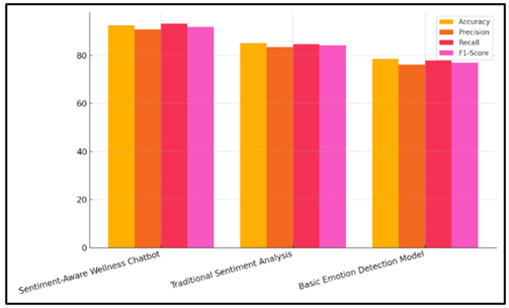

In Table 2, it show the performance of various models of mood recognition by considering significant outcomes such as F1-score, accuracy, precision, and recall. The Sentiment-Aware Wellness Chatbot performs better as compared to the Traditional Sentiment Analysis model and the Basic Emotion Detection Model because of the high accuracy score of 92.5, a precision score of 90.8, a memory score of 93.2, and an F1-score of 91.9. Figure 2 compares model performance metrics of different models.

Figure 2

Figure 2 Comparison of Model Performance Metrics

This indicates that the robot is not only able to label the feelings correctly but also be able to reduce the false positives and false negatives. The Traditional Sentiment Analysis model, conversely, in all measures performs poorer with the accuracy rate of 85.2. This demonstrates that it is not capable of dealing with complicated emotions during oral communication. The Basic Emotion Detection Model performs the least and has an accuracy of 78.5% and lesser F1 scores. This demonstrates the difficulty in searching for complex feelings without much knowledge of the situation. Figure 3 presents cumulative accuracy development of various models with time.

Figure 3

Figure 3 Cumulative Accuracy Progression Across Different

Models

These findings demonstrate the significance of including state-of-the-art models and functionality, which is conscious of sentiment to the robot in an attempt to enhance its ability to general mood.

Table 3

|

Table 3 User Engagement and Satisfaction Evaluation |

||||

|

Model/Method |

Engagement Rate (%) |

User Satisfaction (%) |

Emotional Response (%) |

Support Recommendation

Effectiveness (%) |

|

Sentiment-Aware Wellness

Chatbot |

89.4 |

91.5 |

92.3 |

88.7 |

|

Traditional Chatbot |

75.3 |

72.4 |

74.5 |

68.9 |

|

Manual Mental Health Support |

64.2 |

70.1 |

67.4 |

65 |

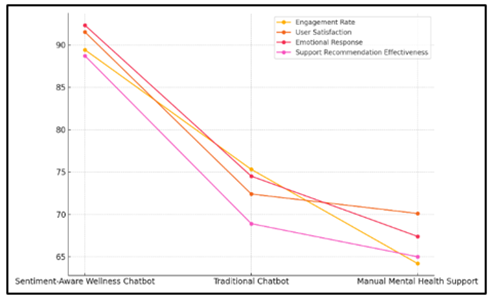

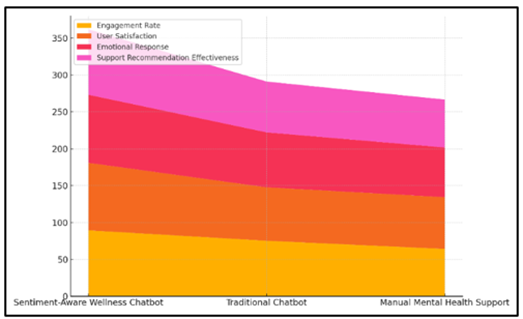

Table 3 presents the comparison of engaged and happy user rates to three types of models, namely Sentiment-Aware Wellness Chatbot, the Traditional Chatbot, and the Manual Mental Health Support. The Sentiment-Aware Wellness Chatbot will never lose to the others on any significant aspect. Figure 4 presents the performance measures of various support models to compare.

Figure 4

Figure 4 Performance Metrics Across Different Support Models

It demonstrates that the use of features that personalise interactions depending on emotional signals is highly successful with the engagement rate of 89.4, the user happiness rate of 91.5, the emotional reaction rate of 92.3, and the rate of success with help advice of 88.7. The Traditional Chatbot is not very successful. It solely captures 75.3% of users and lower user happiness and emotional response ratios and indicates that it is unable to offer personalised, emotional smart care. Figure 5 indicates a cumulative performance of various support models comparison.

Figure 5

Figure 5 Cumulative Performance Metrics for Support Models

Although the Manual Mental Health Support works, it receives lowest marks all across the board. This demonstrates the difficulty in delivering regular, quick, and ubiquitous mental health assistance in contrast to AI-based ones. These findings indicate that systems that consider the feeling of people can be used to retain their interest and satisfaction.

6. CONCLUSION

Wellness robots and in particular, ones with the capability to detect the mood of people, present an alternative approach to addressing the rising mental health issue in the world. These robots are based on artificial intelligence (AI) and natural language processing (NLP) to provide personalised real-time assistance. This facilitates the accessibility of mental health tools by more people. Having a mood analyzer, these robots are able to detect the emotions and respond to them in a manner that makes the people feel that they are being heard, in charge of their feelings and feeling good. This will allow the user to adapt the robot to suit their mood and what the robot says by the user depending on the mood that the user has and the user will be able to receive some advice on how to cope with it and how to control their feelings when it becomes necessary. These robots are available always; this implies that the customers will have someone to consult whenever they are depressed or in a situation where they may not conveniently access mainstream mental health services. The idea of having wellbeing robots that know how people feel has been demonstrated to be promising in making the user interact more and providing useful mental health services. Users have reported the personalised and caring interactions to make them feel more comprehended and motivated. Nevertheless, there remain certain issues, such as the difficulty of properly identifying and responding to more complex facial expressions or the difficulty of managing variation in individual responsiveness to different circumstances depending on their culture or the circumstance they are in. These issues demonstrate the need to continue gathering data, receiving feedback about users, and making minor adjustments over time to the capabilities of the chatbot.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Abd-alrazaq, A. A., Alajlani, M., Alalwan, A. A., Bewick, B. M., Gardner, P., and Househ, M. (2019). An Overview of the Features of Chatbots in Mental Health: A Scoping Review. International Journal of Medical Informatics, 132, Article 103978. https://doi.org/10.1016/j.ijmedinf.2019.103978

Boucher, E. M., Harake, N. R., Ward, H. E., Stoeckl, S. E., Vargas, J., Minkel, J., Parks, A. C., and Zilca, R. (2021). Artificially Intelligent Chatbots in Digital Mental Health Interventions: A Review. Expert Review of Medical Devices, 18(1), 37–49. https://doi.org/10.1080/17434440.2021.2013200

Chakraborty, C., Pal, S., Bhattacharya, M., Dash, S., and Lee, S.-S. (2023). Overview of Chatbots with Special Emphasis on Artificial Intelligence-Enabled ChatGPT in Medical Science. Frontiers in Artificial Intelligence, 6, Article 1237704. https://doi.org/10.3389/frai.2023.1237704

Gupta, A., Hathwar, D., and Vijayakumar, A. (2020). Introduction to AI Chatbots. International Journal of Engineering Research and Technology, 9, 255–258. https://doi.org/10.17577/IJERTV9IS070143

Kamble, K. P., Khobragade, P., Chakole, N., Verma, P., Dhabliya, D., and Pawar, A. M. (2025). Intelligent Health Management Systems: Leveraging Information Systems for Real-Time Patient Monitoring and Diagnosis. Journal of Information Systems Engineering and Management, 10(1). https://doi.org/10.52783/jisem.v10i1.1

Kuhail, M. A., Alturki, N., Alramlawi, S., and Alhejori, K. (2023). Interacting with Educational Chatbots: A Systematic Review. Education and Information Technologies, 28, 973–1018. https://doi.org/10.1007/s10639-022-11177-3

Labadze, L., Grigolia, M., and Machaidze, L. (2023). Role of AI Chatbots in Education: Systematic Literature Review. International Journal of Educational Technology in Higher Education, 20, Article 56. https://doi.org/10.1186/s41239-023-00426-1

Li, J. (2023). Digital Technologies for Mental Health Improvements in the COVID-19 Pandemic: A Scoping Review. BMC Public Health, 23, Article 413. https://doi.org/10.1186/s12889-023-15302-w

Moilanen, J., van Berkel, N., Visuri, A., Gadiraju, U., van der Maden, W., and Hosio, S. (2023). Supporting Mental Health Self-Care Discovery Through a Chatbot. Frontiers in Digital Health, 5, Article 1034724. https://doi.org/10.3389/fdgth.2023.1034724

Moore, J. R., and Caudill, R. (2019). The Bot will See You Now: A History and Review of Interactive Computerized Mental Health Programs. Psychiatric Clinics of North America, 42(4), 627–634. https://doi.org/10.1016/j.psc.2019.08.007

Song, I., Pendse, S. R., Kumar, N., and De Choudhury, M. (2024). The Typing Cure: Experiences with Large Language Model Chatbots for Mental Health Support (arXiv:2401.14362). arXiv. https://doi.org/10.1145/3757430

Spiegel, B. M. R., Liran, O., Clark, A., Samaan, J. S., Khalil, C., Chernoff, R., Reddy, K., and Mehra, M. (2024). Feasibility of Combining Spatial Computing and AI for Mental Health Support in Anxiety and Depression. NPJ Digital Medicine, 7, Article 22. https://doi.org/10.1038/s41746-024-01011-0

Trofymenko, O., Prokop, Y., Zadereyko, O., and Loginova, N. (2022). Classification of Chatbots. Systems and Technologies, 2, 147–159. https://doi.org/10.34185/1562-9945-2-139-2022-14

Zafar, F., Fakhare Alam, L., Vivas, R. R., Wang, J., Whei, S. J., Mehmood, S., Sadeghzadegan, A., Lakkimsetti, M., and Nazir, Z. (2024). The Role of Artificial Intelligence in Identifying Depression and Anxiety: A Comprehensive Literature Review. Cureus, 16, e56472. https://doi.org/10.7759/cureus.56472

Zhong, W., Luo, J., and Zhang, H. (2024). The Therapeutic Effectiveness of Artificial Intelligence-Based Chatbots in Alleviation of Depressive and Anxiety Symptoms in Short-Course Treatments: A Systematic Review and Meta-Analysis. Journal of Affective Disorders, 356, 459–469. https://doi.org/10.1016/j.jad.2024.04.057

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.