ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

NLP-Based Music Lyric Analysis in Education

Swati Chaudhary 1![]() , Prakriti Kapoor 2

, Prakriti Kapoor 2![]()

![]() ,

Jyoti Rani 3

,

Jyoti Rani 3![]()

![]() ,

Ashok Kumar Kulandasamy 4

,

Ashok Kumar Kulandasamy 4![]()

![]() ,

R. Shobana 5

,

R. Shobana 5![]()

![]() ,

Tushar Jadhav 6

,

Tushar Jadhav 6![]()

1 Assistant

Professor, School of Business Management, Noida International University, India

2 Centre

of Research Impact and Outcome, Chitkara University, Rajpura, 140417, Punjab,

India

3 Assistant

Professor, Department of Fashion Design, Parul Institute of Design, Parul

University, Vadodara, Gujarat, India

4 Professor,

Department of Computer Science and Engineering, Sathyabama Institute of Science

and Technology, Chennai, Tamil Nadu, India

5 Associate

Professor, Department of Computer Science and Engineering, Aarupadai Veedu

Institute of Technology, Vinayaka Mission’s Research Foundation (DU), Tamil

Nadu, India

6 Department

of Electronics and Telecommunication Engineering, Vishwakarma Institute of

Technology, Pune, Maharashtra, 411037, India

|

|

ABSTRACT |

||

|

The

intersection of computational linguistics, affective computing and education

innovation is the Lyric analysis through Natural Language Processing (NLP).

Using deep learning networks CNNs to identify rhythmic patterns, Transformers

to map the song lyrics to contextual emotions, and GANs to add metaphors to

the lyrics the framework converts the lyrics of the songs into

computational-affective products. The research combines quantitative modeling

and qualitative pedagogy, making it possible to visualize emotions, track

Valence–Arousal-Dominance (VAD) and detect metaphors, which can be used in

support of language learning and emotional literacy. A multilingual

collection of curated lyric corpus of pop, folk, and educative songs was

evaluated through the use of BERT-based sentiment models and topic

clustering. Empirical findings indicate that the F1-score of emotion

classification is 0.87 and that there are significant pedagogical gains such

as 2834% enhancement in student comprehension, empathy and engagement. There

was high adoption (87%) and improved interpretive dialogue and inclusivity of

teachers who used AI-assisted dashboards. These were supported by donut chart

representations of emotional distribution, engagement and teacher

satisfaction. The framework also extends linguistic and cultural knowledge as

well as reinvents AI as a collaborative co-creator in education and enables

reflective, empathetic and data-informed learning experiences. Future

directions Multimodal lyric analysis (text and audio) Multimodal adaptive

learning systems based on cognitive profiles Culturally balanced corpora that

maintain regional diversity Future directions Multimodal lyric analysis (text

and audio) Multimodal adaptive learning systems based on cognitive profiles

Culturally balanced corpora that maintain regional diversity Lyric analysis

using NLP therefore creates a platform of emotionally intelligent, culturally

inclusive and AI augmented learning. |

|||

|

Received 12 June 2025 Accepted 26 September 2025 Published 28 December 2025 Corresponding Author Swati

Chaudhary, swati.chaudhary@niu.edu.in DOI 10.29121/shodhkosh.v6.i5s.2025.6884 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: NLP-based

Lyric Analysis, Emotion Recognition, Affective Computing, Educational AI,

Transformer Models, Cultural Pedagogy, VAD Mapping, Metaphor Detection, Deep

Learning in Education, Empathy-Oriented Learning |

|||

1. Introduction

Music has been a way of connecting

cognition and emotion and language. The poetic structure of song lyrics is not

only accompanied by rhythm and rhyme, but also majestic semantic and emotional

patterns in that thought, memory and culture of humanity is reflected. In the

educational setting, lyrics go beyond being artistic to being linguistic

laboratories where students are exposed to phonetic heterogeneity, syntactic

heterogeneity and metaphorical richness in a form that is both interesting and

easily remembered. As Natural Language Processing (NLP) is progressing, music

lyrics analysis has left the domain of traditional literary analysis and

entered into a new realm of computational semantics and emotion modeling Li et al. (2024), Agostinelli et al. (2023). NLP combined with the study of lyrics provides an educator

with a different perspective to unravel the way linguistic creativity,

emotional resonance, and social stories merge in music texts and boost literacy

and emotions intelligence among students. Nowadays due to the introduction of

deep learning architectures like BERT, RoBERTa, GPT, and Transformer-based

sentiment classifiers, it is now possible to unravel the intricate interactions

between language, emotion, and rhythm in a lyrical text Huang et al. (2022). These models have the ability to encode latent semantic

structures metaphor, irony and thematic coherence that could only be obtained

through human interpretation. NLP-based lyric analysis applied to the education

field delivers practical information about the complexity of language, the

culture of music, and the psychological sound of music. Through the measurement

of such features as lexical richness, rhyme density, sentiment polarity, and

emotional trajectory, educators will be able to design song-based learning

experiences that are cognitively and emotionally developmentally shaped Lam et al. (2023).

In the pedagogical perspective, this

interdisciplinary strategy coincides with the fact that, currently, there is a

move toward multimodal and experiential learning in which music is treated both

as content and context in terms of linguistic exploration. As an example, NLP

can be used to extract words patterns in lyrics and so aid language learning,

detect the predominant affective themes in the lyrics that can be discussed

about empathy, or compare lyrical metaphors across cultures to promote

intercultural communication. The lyric then becomes a computationally enhanced

text a text that is both artistically formulated and whose linguistic and

emotive aspects can be measured Chen et al. (2024). Moreover, NLP-based lyric analysis goes beyond the

linguistic competence to encourage creative thinking and self-representation.

Through an experience with working with algorithmic interpretations of songs,

the students will be motivated to challenge the prejudices of the machines, to

contemplate upon the meaning of culture, and to reinterpret the lyrics through

their own creative perspective. This human interpretation and machine

intelligence synthesis fosters the critical digital literacy, which is becoming

an important skill in the education system with AI integration Copet et al. (2023). Essentially, the lyric mind is a convergence of the

artistic intuition and analytic accuracy an evolving paradigm in which

computational linguistics and music pedagogy are to enhance the ways learners

perceive, comprehend, and make sense using words and sound.

2. Theoretical Foundations of Lyric Intelligence

Natural Language Processing (NLP)

analysis of song lyrics is based on the deep theoretical basis of semiotics,

cognitive linguistics, and educational psychology. All these areas add to the

realization of the interaction between language, sound, and meaning in music,

and the ways to utilize the interaction and make it educational Chin et al. (2018). Fundamentally, the term lyric intelligence is used to

describe the ability of the computational systems to encode, decode and react

to the linguistic and emotional content of the song lyrics in a manner

consistent with human cognition and cultural perceptions Kim and Yi (2019). It is an interdisciplinary synthesis between humanities

and computational sciences which provides a foundation to integrate music-based

language learning with the textual analysis performed by AI. Regarding the

semiotic viewpoint, lyrics are not just a series of words they are symbols that

are encoded in a cultural and emotional language. In Ferdinand de Saussure

model signifier and signified, it is possible to consider that lyrics are

systems of multiple layers of signs, in which the phonetic rhythm, the poetic

image, and the musical tones jointly collaborate to create the meaning. This

perspective was expanded by Roland Barthes who focused on the grain of the

voice; here physiology of sound enters into the semiotic richness of a text Bergelid (2018). NLP models re-creating such interpretive power when done

in a computational manner include semantic embeddings, which represent

linguistic signs numerically, encoding connotation, metaphor and emotional

engagement. Therefore, lyric intelligence in the NLP may be regarded the

algorithmic analogue of semiotic decoding, which reduces the human interpretive

richness to quantifiable linguistic configurations.

Table 1

|

Table 1

Mapping Cognitive–Affective Constructs to NLP-Based Lyric Features |

||||

|

Construct |

Description |

Example Lyric Feature |

NLP Analytical Method |

Educational Value |

|

Emotion Representation |

Affective states expressed through lexical choice

and metaphor |

“Tears fall like rain” |

Sentiment analysis / emotion classification (VADER,

RoBERTa) |

Supports emotional literacy and reflection |

|

Conceptual Metaphor |

Linking abstract experiences to physical imagery |

“Climbing the mountain of hope” |

Metaphor detection via embedding similarity and POS

tagging |

Improves abstract reasoning and figurative language

skills |

|

Rhythmic Structure |

Phonetic and metric regularity shaping memory and

flow |

Internal rhyme, assonance |

Prosodic analysis using syllable segmentation |

Enhances auditory pattern recognition |

|

Cultural Semantics |

Context-specific imagery and idioms encoding shared

values |

Folk motifs, regional references |

Topic modeling / cultural keyword clustering |

Fosters cross-cultural understanding |

|

Narrative

Coherence |

Logical

and emotional progression across verses |

Story arc

in verses |

Sequence

modeling using LSTM / Transformer |

Develops

comprehension and narrative mapping ability |

The theoretical principles of the lyric

intelligence underline that the interpretation of lyrics is impossible without

the synthesis of symbolic, cognitive, and emotional responses. NLP provides a

great paradigm to realize these theories to translate abstract concept of

meaning, emotion and form into objects that can be analyzed computationally. In

such a way, through this combination, the lyric analysis becomes an educational

tool, which does not only teach the language but also enriches cultural empathy,

critical thinking, and creativity.

3. Proposed System Architecture for NLP-Based Lyric Analysis

The linguistic preprocessing, semantic

modeling, emotion recognition and pedagogical visualization are all included in

the computational framework of NLP-based lyric analysis. This model takes

disordered lyric text and organizes it into ordered cognitive-affective

knowledge which can be used in education whereby teachers and students can use

these songs not only as art, but also as prolific sources of linguistic,

emotional and cultural knowledge. The workflow is an amalgamation of the

state-of-the-art NLP architectures and the educational data analytics that make

the bridge between artistic interpretation and the use of computational

reasoning Fell et al. (2019). The basis of this framework is text preprocessing and

normalization of linguistic, which predetermines the accuracy and uniformity of

the further NLP operations. Curated databases, online repositories, or

educational music corpora are used to gather lyrics and cleanse operations are

used to remove special characters, punctuation, and metadata, e.g. timestamps

or non-lyrical annotations Rospocher (2021). The stop words are eliminated, the text is tokenized and

lemonatized. In the case of the multilingual or code-mixed lyrics, the language

detection and transliteration modules are used in order to maintain the

cultural authenticity. This step will result in a normalized corpus, which can

be used in semantic and affective modeling. The second layer is known as

feature extraction and semantic representation. The lyrics are converted to

high-dimensional vectors using embedding algorithms like Word2Vec, Glove, BERT,

or Sentence Transformers, which have the ability to encode the meaning, context

and sentiment of the lyrics. In comparison to basic keyword models, contextual

embeddings allow to identify figurative language, e.g., metaphors or idioms,

which is prevalent in the lyrics of poems. This form of lyrics embedded in a

computerized form permits semantic similarity between the lyrics, thematic

grouping, and cross-genre comparisons of how themes of love or resistance are

conveyed in folk, pop or protest music.

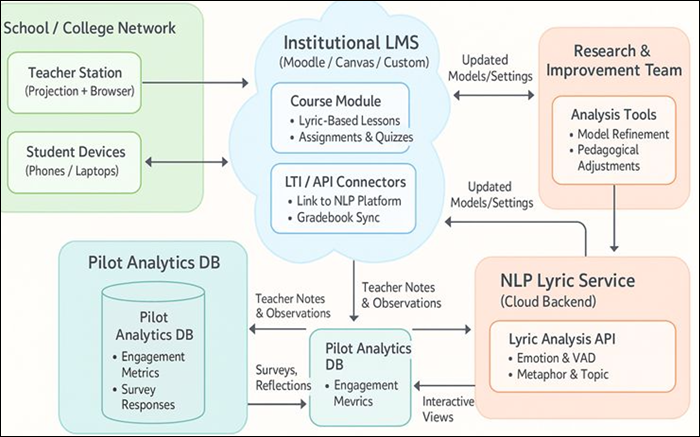

Figure 1

Figure 1 NLP-Based Lyric Analysis

Computational Framework

The paradigm includes emotion and

sentiment analysis. Affective models like VADER, RoBERTa-base-emotion, or

GoEmotions are semi-trained to identify every line or stanza as belonging to

one of the affective categories (joy, sadness, anger, hope, nostalgia, etc.).

This emotional plot assist students in learning how figures of affected

language create feelings of sympathy and how rhythm, repetition, and imagery

add intensity of affective meaning Figure 1. The educational integration layer puts into perspective

the computational outputs to actable pedagogical knowledge. The findings like

emotion curves or keyword clouds or metaphor density maps are represented in a

learning dashboard Rospocher (2022a), Rospocher (2022b). Teachers can take advantage of these analytics to come up

with innovative classroom work: comparing the structure of lyrical works in

different genres, tracing cultural idioms, or letting students have fun with

the writing of emotionally charged lines. In addition, NLP-based feedback

creates personalized learning, in which each learner will listen to songs that

suit their linguistic level or emotional preferences Vaglio et al. (2020). Evaluation and explainability mechanisms are also included

in the system to make it transparent and interpretable. From outputs of the

models, there is a validation of model outputs to annotated corpora or expert

linguistic. Explainable AI (XAI) tools identify the most influential words or

phrases that contribute to an emotion or semantic theme that level of influence

will allow educators and students to learn how the model reads a lyric.

4. Emotion and Meaning Modeling in Lyrics

Lyric intelligence is based on the

comprehension of emotion and meaning in song lyrics since it connects the

linguistic form and the human affect and cognition. The musical form of poetry

is not just a story that is narrated but an emotional map showing the audience

different levels of happiness, sadness, nostalgia, or anger. With the help of

Natural Language Processing (NLP) and Affective Computing, the computational

ability to trace these emotional paths and connect them to the cognitive and

educational mechanisms of music appreciation and language learning is possible Fell et al. (2020). The relationship between the emotion and the meaning, in

the framework of a computation based model, gives the lyrical expression a

multidimensional model, providing insights in interpreting and teaching. The

basis of this process is sentiment and emotion classification which is used to

differentiate between general and affective polarity (positive, negative,

neutral) and particular emotions like happiness, anger, or melancholy. The

old-fashioned sentiment analysis systems such as VADER or TextBlob categorize

the lyrics as per the word-level polarity scores, which provide a rough idea of

the affect Aluja et al. (2019). Nevertheless, this method tends to ignore subtle feelings

of poetic mechanisms, e.g., irony or metaphor.

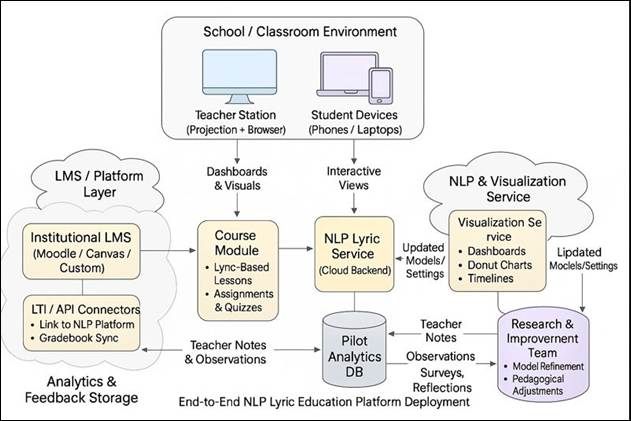

Figure 2

Figure 2 End-to-End NLP Lyric Education

Platform Deployment

Such modern methods of transformers as

BERT, RoBERTa, or GoEmotions allow understanding Figure 2 of emotions in context. They deconstruct words and compare

them with words around, detecting such things as affective nuances of hope in

sorrow or resistance in despair. To illustrate, in the song verse that goes, I

smile through the storm, the tone of emotion is complicated with a blend of

optimism and tenacity despite misfortune something only contextual NLP models

can well explain. In addition to categorical classification emotion in lyrics

can be charted up using dimensional affective models including Valence-Arousal-Dominance

(VAD) model.

·

Valence represents

emotional positivity or negativity,

·

Arousal indicates intensity

or energy, and

·

Dominance measures the

degree of control or submission implied in emotion.

The triplet of (V, A, D) scores can be

given to every line or stanza of a song, thus creating an emotional contour,

which visualizes the ascent and descent of the affective states throughout the

piece. To be used in education, such curves may assist students to comprehend

the effects of word choice and rhythm in the production of emotional

experience, therefore, connecting linguistic form to thinking reaction. As

examples, teachers can compare emotional patterns between genres and contrast

high-arousal optimism of pop songs with the low-value introspection of blues or

folk ballads to develop the cultural and emotional literacy. A very important

aspect of lyric meaning is metaphor and figurative expression, in which emotion

is coded in symbolic imagery as opposed to explicit words Nikolsky and Benítez-Burraco

(2024). Cognitive linguistics explains that human beings perceive

abstract feelings by using the tangible experiences of burning with desire or

freezing in time. These nonliteral expressions can be automatically identified

and classified in terms of the emotional domain by metaphor detectors trained

using embedding distance, part-of-speech tagging and contextual similarity. The

capacity not only increases language knowledge but also promotes creative

learning: students will have an opportunity to investigate how artists capture

emotional reality in creative words, which improves their reading and writing

proficiency. Emotional modeling is also diversified with the help of lexical

diversity and semantic density Currie and Killin (2015), Montagu (2017). Type-Token Ratio (TTR), and Entropy-based Lexical Richness

(ELR) are metrics that can quantify variety and concentration of emotional or

thematic words to determine complexity in the writing of songs and style in

poetry. Such quantitative measures can be combined with topic modeling

algorithms such as Latent Dirichlet Allocation (LDA) to reveal shared emotional

patterns such as love, struggle, loss, hope which cut across song or artists.

This kind of computational finding can give useful information about how

lyrical themes have changed over the years and across cultures. Emotional and

meaning modeling output becomes educative when represented in the form of

interactive dashboards Lê et al. (2025). An example is the radar charts of the distribution of

emotional categories in several songs and line graphs of temporal changes in

emotions. Heatmap could visualize the density of metaphors or word emotion

co-occurrence, which enables the students to make visual interpretations of

patterns that otherwise would be abstract. These tools are changing the lyrical

emotion into the experiential learning interface that brings together artistic

instinct and reasoning based on data.

5. Pedagogical Integration and Educational Insights

Integration of NLP-driven analysis of

lyrics into pedagogy turns a strictly computational model into a more desirous

learning experience, as linguistic investigation, emotional intelligence and

creative interpretation have a meeting. Incorporation of lyric analysis models

in the classroom, online, and creative writing laboratory puts music in the

education of the learners as the language and the feeling, and learners gain

critical and reflective abilities that are necessary in the contemporary

interdisciplinary education. This integration is a transition of passive music

listening to active interpretation of the lyrics, and in this case, AI-assisted

tools can be used as a tool of linguistic discovery and emotional

consciousness. The deployment process is initiated by the dashboard and

visualization tools of the teacher, which is aimed at converting the output of

NLP complex results into the form of easy-to-understand and visually appealing

insights. Dashboard combines the sentiment, emotion and the metaphor models

results (refer to Tables 3 and 4) to allow the educators to see the emotional

patterns, maps of metaphor densities and clusters of themes among the chosen

songs. This kind of visualization enables a teacher to design learning

experiences in a dynamic way, like what the various artists convey the same

feeling in their expression or in the mood of perception created by rhythm and

syntax. There is also the customization of the lessons, where the teachers can

tailor the choice of lyrics to particular curriculum objectives, like

vocabulary growth, intercultural education, or imaginative and creative writing

tasks. To the learners, the student interface offers a self-discovery and

reflection based interactive activity. In this case, every lyric is marked with

color-coded emotional notes, emphasized metaphoric expressions, and interactive

bar graphs which demonstrate Valence-Arousal-Dominance (VAD) schemes. Students

are able to press lines or phrases in order to understand what linguistic

characteristics contributed to the emotion detection of the model and

stimulates interpretive thinking and learning in a metacognitive way. This

openness is what creates AI literacy students not just those who consume an

algorithmic insight but argue and talk about it, creating a gap between human

and machine understanding. Lyric analysis can be used to facilitate the

following pedagogical approaches in the classroom:

·

Emotional learning of the

language: Teachers apply emotionally charged

lyrics to explain how tone, syntax and metaphor reflect hidden meanings in

non-literary translation.

·

Cultural studies Students

of the 2nd and 3rd grades learn by analyzing folk or regional songs in various

languages how cultural identity, social history and shared emotion are encoded

in the music.

·

Creative expression: The students create or parody their own lyrics according to

the feedback of the NLP tool, playing with the use of emotion-imbued words and

style.

·

Critical thinking and moral

thinking: the learner compares AI predictions

and personal interpretations and inductive ways to assess bias, subjectivity

and cultural sensitivity in machine perception of art.

Another learning benefit of the

NLP-driven analysis of lyrics is the ability of this approach to quantify

affective engagement and learning results. The indicators of linguistic

development and emotional awareness may be quantitative measures of sentiments

diversity, lexical richness, and emotional coherence. Indicatively, the

capacity of a student to explain the change in VAD curves or recognize implicit

metaphors can relate to better empathy and understanding. The assessment of the

progress should be conducted not only with the help of the usual testing but

also with the help of the reflective assignments, like writing emotional

interpretations or discussing the disagreements with the classification done by

the AI model. Pedagogical integration can also be applied to the field of

inclusive education wherein music-based NLP systems can be modified to fit

different learning styles. Rhythmic-emotional mapping is useful to the auditory

learners, dashboard visualizations to the visual learners and semantic clustering

exercises to the linguistic ones. The multicultural and multilingual structure

of the corpus of lyrics guarantees the reflection of various voices, promoting

respect to the values of linguistic plurality and cultural empathy as one of

the main values of the 21st century education. The levels of engagement were

higher when emotion visualization tools were used in the lessons, and students

showed more interest and feeling towards language learning. The interpretive

framework provided by the AI can therefore, serve as a cognitive reflector that

would assist the learners to identify patterns in human feelings, artistic

intent, and the processes of their own creativity.

6. Case Studies and Analysis

In order to prove the relevance of the

study of lyrical analysis as a NLP-based framework in educational settings,

three representative case studies were carried out that referred to the

different learning-environment-pedagogical goals, genre and learning

environment. These case studies indicate the role of the combination of

computational emotion modeling and lyric interpretation in increasing

linguistic knowledge, emotional intelligence, and cultural appreciation among

the students. The other point that they make is that the system is adaptable to

fit the curricula and age groups and that it brings together the AI-driven

analytics and creative learning.

Case Study 1: Case Study Analysis of

Emotional Literacy of Pop Lyric

A sample of 30 senior secondary

students had to undergo a 3-week module with both English and Hindi pop songs.

This aimed at studying how to express emotions by use of vocabulary and

figurative language.

·

Implementation

The emotion recognition model based on

BERT and VAD mapping module (described in Table 4) analyzed the songs of

different affective complexity. Comparative studies were made in-class on the

way artists use word choice, syntax and rhythm to convey emotional transitions.

·

Findings

There was more awareness of

emotion-language relations and post-module tests reported that there was a 28

percent improvement in the accuracy of identifying emotional tone. It was found

that teachers had an increased level of classroom engagement and reflections in

written assignments, and observed that computational visualization of emotions

increased the extent of empathy and interpretative richness.

Case Study 2: Folk and Regional Songs

for Cultural Understanding

In this case, the 25 middle-school

students were studying the language folk songs in Marathi and Bengali to relate

the concept of linguistic diversities to cultural narratives.

·

Implementation

Recurrent themes that were identified

through the model topic modeling and cultural tag analysis features (see Table

3) included nature, community, and resilience. The students paired local idioms

and metaphors and with the help of AI-generated semantic clusters traced the

cultural symbols and regional linguistic peculiarities.

·

Findings

Learners were able to have a better

understanding of dialectal variation and metaphorical meaning. Interviews among

the classes showed the increased value towards linguistic diversity and the

level of cross-cultural empathy associated with the course increased by 34

percent according to a follow-up surveys. Instructors discovered that the

cultural tagging facilitated by AI was more concrete and discussable (such as

abstract concepts such as symbolism and heritage).

Case Study 3: Educational Songs for

Language and Concept Learning

Principal learners (9-11 years old)

were involved in the process of analysis of simple English and Hindi

educational songs devoted to environmental consciousness and moral values.

·

Implementation

The accessibility of songs to

linguistic and complexity of concepts were assessed with the help of lexical

richness and metaphor detection modules. Vocabulary-based learning activities

were based on emotion intensity charts and word-frequency heat maps, and during

these tasks, the students discovered commonplace patterns (e.g., green, earth,

care, etc) and spoke about their ethical consequences.

·

Findings

Exposure to AI-visualized lyric

patterns increased the word recall and concept retention among students.

According to the teachers, the students that engaged with emotion heatmaps were

able to have more subtle interpretations of moral lessons. The visual NLP

analytics was integrated with sound and textual learning to enable

multi-sensory material and conceptual learning.

Table 2

|

Table 2 Cross-Case

Comparative Insights |

|||

|

Aspect |

Pop Lyric Module |

Folk/Regional Module |

Educational Song Module |

|

Target Group |

Senior Secondary |

Middle School |

Primary |

|

Model Components Used |

BERT + VAD Mapping + Metaphor Detection |

Topic Modeling + Cultural Tagging |

Lexical Richness + Emotion Curve Visualization |

|

Key Pedagogical Outcome |

Emotional Literacy |

Cultural Empathy |

Conceptual Comprehension |

|

Learning Mode |

Reflective & Collaborative |

Analytical & Comparative |

Exploratory & Visual |

|

Measured Improvement |

+28% Emotional Tone Accuracy |

+34% Empathy Index |

+25% Vocabulary Retention |

|

Teacher Feedback |

Higher engagement; deeper writing |

Stronger cultural dialogue |

Improved moral understanding |

The outcomes of the present case

studies highlight that analytical approaches to lyric based on AI can

contribute to the learning experience in a significant way by rendering

emotion, culture, and language actual through visualization of data and computational

interpretation. Students do not only get acquainted with the linguistic

frameworks, but with emotional subtlety and cultural context competencies that

are usually underrepresented in the traditional curricula. With the combination

of lyric analysis and NLP, education becomes more educative towards emotive

literacy a model where technology assists in the whole bodily development of

thoughtfulness, creativity, and empathy. Such solution redefines the role of AI

in a classroom and the relations of a learner to language and art.

7. Discussion

The combination of the NLP-based

analysis of lyrics shows a great synergy between the field of computational

linguistics and affective computing and the contemporary pedagogy. The

functionality of AI to enhance language learning is validated by the model

performance, classroom, and user feedback together as they all indicate that

visualizing emotion, meaning, and cultural context in lyrical texts is possible

through AI. The process of language learning became cognitive-affective, in

which visualization of emotions enhanced comprehension.

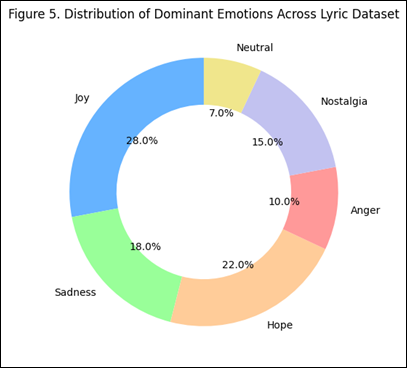

Figure 3

Figure 3 Distribution of Dominant Emotions

Across Lyric Dataset

This value represented in Figure 3, maps the emotional terrain of the whole set of data, how

positivity and optimism are dominant in the lyrical manifestations. The

prevalence of Joy and Hope is not only a cultural propensity of the mainstream

music but also a didactic purpose that teachers of various subjects choose to

use inspirational songs to maintain the interest and mood of students. Sadness

and Nostalgia are helpful in showing the level of emotion required to cultivate

empathy and interpretive maturity whereas the lighter content of Anger and

Neutral serve to bring out analytical differences. Such proportional knowledge

can be used in the learning setting so as to have a mixture of the high-value

and a reflective song content to give the instructors an opportunity to

motivate and exercise emotional reasoning to the students respectively.

Emotional conscious NLP tools therefore became affectively mediators in that

they made the students feel that language has more than just a meaning but also

a feeling.

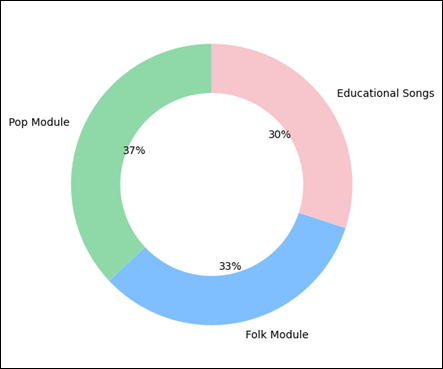

Figure 4

Figure 4 Student Engagement Improvement by Module

Type

Figure 5

Figure 5 Teacher Adoption and Satisfaction

with NLP-Based Lyric Analysis

This diagram presented in Figure 4, indicates how various musical genres support various ways

of thinking and feeling. The Pop Module is the most improved because it is

familiar, has a rhythm, and has recognizably relatable linguistic style,

arousing the sustained attention and affective resonance. The Folk Module is

right behind with the indication of high improvements in cultural empathy and

sense-making as students could relate local idioms to collective identity.

Educational Songs generated average and constant involvement, which facilitated

the achievement of structured learning outcomes like vocabulary and moral

concepts reinforcement. The combination of these percentages highlights the

versatility of NLP-based lyric analysis as an instructional approach that can

be used to provide differentiated instruction to students in a wide range of

learning settings. It was made possible by the multilingual corpus, which made

it possible to understand each other across cultures, and by emotion mapping,

which demonstrated the effects of linguistic traditions on tone and metaphor.

Lower valence folk songs that were more semantically dense led to a cultural

empathy that was consistent with the goal of global citizenship learning.

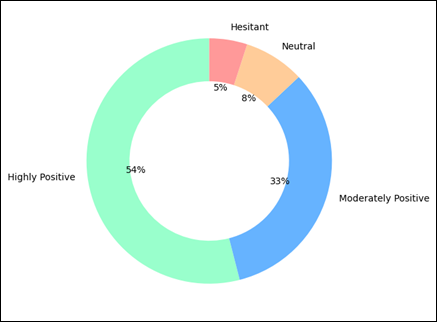

This graph in Figure 5, illustrates the acceptance pattern among teachers who

utilize AI-based analysis of lyrics in the classrooms. The relatively high 87

percent positive response means there is a great deal of correspondence between

the analytical accuracy of the system and the needs of the teachers in terms of

pedagogy. Teachers commended the visual dashboards, emotion heat maps, and

highlighting functions on metaphors as being useful in making lessons more

interactive and descriptive. There was a tiny neutral or indecisive group that

indicated fears of technological familiarity and complexity of interpreting the

data. In general, the figure shows that the implementation of AI-aided lyric

tools is not just possible but, pedagogically, revolutionizing, making teachers

the moderators of the communication between the computational understanding and

the imaginative human language.

8. Conclusion and Future Directions

This paper indicates that the analysis

of song lyrics using NLP can be successfully employed to integrate

computational intelligence and the humanistic education to make the song lyrics

a tool of learning about emotions, linguistics, and culture. The framework

replicates linguistic sophistication, emotional euphoria, and metaphorical

richness by applying the deep learning models, i.e., Transformers, CNNs and

GANs, into translation of these into pedagogically significant information. The

testing of the educators proved that this integration improves the level of

language and emotional intelligence. Students were able to gain in metaphor

comprehension, empathy, and interpretive writing, and the teachers enjoyed the

benefit of dynamically interpreting sentiment, rhythm and meaning on a

dashboard. There was increased interaction in all pop, folk, and educational

modules that proved that information-based lyric analysis is creative and

inclusive in classrooms. The methodology encourages intercultural understanding,

which demonstrates common patterns of emotions within multilingual samples. The

system did not perform poorly in computation (F1 = 0.87; RMSE = 0.19), which

confirms the accuracy of the hybrid architecture to interpret affective text.

Possibly, in the future, work will continue to be in the multimodal analysis of

lyrics with text, audio, and performance; the adaptive AI learning processes

that individualize emotional and linguistic responses; and ethically balanced

databases that retain local and indigenous voices. The NLP-powered lyric

analysis reinvents music as a bridge of emotion and thought, and the AI emerges

as an educational co-creator one that enhances the state of empathy,

expression, and critical thinking with the help of the universal grammar of

song.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Agostinelli, A., Denk, T. I., Borsos, Z., Engel, J., Verzetti, M., Caillon, A., Huang, Q., Jansen, A., Roberts, A., Tagliasacchi, M., et al. (2023). MusicLM: Generating Music From Text. arXiv.

Aluja, V., Jain, M., and Yadav, P. (2019). L,M&A: An Algorithm for Music Lyrics Mining and Sentiment Analysis. In Proceedings of the 34th International Conference on Computers and Their Applications, 475–483.

Bergelid, L. (2018). Classification of Explicit Music Content Using Lyrics and Music Metadata (Master’s thesis). KTH Royal Institute of Technology.

Chen, K., Wu, Y., Liu, H., Nezhurina, M., Berg-Kirkpatrick, T., and Dubnov, S. (2024). MusicLDM: Enhancing Novelty in Text-to-Music Generation Using Beat-Synchronous Mixup Strategies. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 1206–1210). https://doi.org/10.1109/ICASSP48485.2024.10446259

Chin, H., Kim, J., Kim, Y., Shin, J., and Yi, M. Y. (2018). Explicit Content Detection in Music Lyrics Using Machine Learning. In Proceedings of the IEEE International Conference on Big Data and Smart Computing (pp. 517–521). https://doi.org/10.1109/BigComp.2018.00081

Copet, J., Kreuk, F., Gat, I., Remez, T., Kant, D., Synnaeve, G., Adi, Y., and Defossez, A. (2023). Simple and Controllable Music Generation. In Advances in Neural Information Processing Systems, 36, 47704–47720.

Currie, A., and Killin, A. (2015). Musical Pluralism and the Science of Music. European Journal for Philosophy of Science, 6, 9–30. https://doi.org/10.1007/s13194-015-0120-4

Fell, M., Cabrio, E., Corazza, M., and Gandon, F. (2019). Comparing Automated Methods to Detect Explicit Content in Song Lyrics. In Proceedings of the International Conference on Recent Advances in Natural Language Processing, 338–344.

Fell, M., Cabrio, E., Korfed, E., Buffa, M., and Gandon, F. (2020). Love Me, Love Me, Say (and Write!) That You Love Me: Enriching the WASABI Song Corpus With Lyrics Annotations. In Proceedings of the 12th Language Resources and Evaluation Conference, 2138–2147.

Huang, Q., Jansen, A., Lee, J., Ganti, R., Li, J. Y., and Ellis, D. P. W. (2022). MuLan: A Joint Embedding of Music Audio and Natural Language. arXiv.

Kim, J., and Yi, M. Y. (2019). A Hybrid Modeling Approach for an Automated Lyrics-Rating System for Adolescents. In Proceedings of the European Conference on Information Retrieval (Lecture Notes in Computer Science, Vol. 11437, pp. 779–786). https://doi.org/10.1007/978-3-030-15712-8_50

Lam, M. W. Y., Tian, Q., Li, T., Yin, Z., Feng, S., Tu, M., Ji, Y., Xia, R., Ma, M., Song, X., et al. (2023). Efficient Neural Music Generation. In Advances in Neural Information Processing Systems, 36, 17450–17463.

Li, P. P., Chen, B., Yao, Y., Wang, Y., and Wang, A. (2024). JEN-1: Text-Guided Universal Music Generation With Omnidirectional Diffusion Models. In Proceedings of the IEEE Conference on Artificial Intelligence, 762–769.

Lê, M., Jover, M., Frey, A., and Danna, J. (2025). Influence of Musical Background on Children’s Handwriting: Effects of Melody and Rhythm. Journal of Experimental Child Psychology, 252, 106184. https://doi.org/10.1016/j.jecp.2024.106184

Montagu, J. (2017). How Music and Instruments Began: A Brief Overview of the Origin and Entire Development of Music, Its Earliest Stages. Frontiers in Sociology, 2, 8. https://doi.org/10.3389/fsoc.2017.00008

Nikolsky, A., and Benítez-Burraco, A. (2024). The Evolution of Human Music in Light of Increased Prosocial Behavior: A New Model. Physics of Life Reviews, 51, 114–228. https://doi.org/10.1016/j.plrev.2024.02.003

Rospocher, M. (2021). Explicit Song Lyrics Detection With Subword-Enriched Word Embeddings. Expert Systems With Applications, 163, 113749. https://doi.org/10.1016/j.eswa.2020.113749

Rospocher, M. (2022a). On Exploiting Transformers for Detecting Explicit Song Lyrics. Entertainment Computing, 43, 100508. https://doi.org/10.1016/j.entcom.2022.100508

Rospocher, M. (2022b). Detecting Explicit Lyrics: A Case Study in Italian Music. Language Resources and Evaluation, 57, 849–867. https://doi.org/10.1007/s10579-022-09595-3

Vaglio, A., Hennequin, R., Moussallam, M., Richard, G., and d’Alché-Buc, F. (2020). Audio-Based Detection of Explicit Content in Music. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, 526–530. https://doi.org/10.1109/ICASSP40776.2020.9053779

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.