ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Reinventing Digital Illustration with Generative AI Tools

Sonia Riyat 1![]()

![]() ,

Jay Gandhi 2

,

Jay Gandhi 2![]()

![]() ,

Amit Kumar 3

,

Amit Kumar 3![]()

![]() ,

Kaberi Das 4

,

Kaberi Das 4![]()

![]() , Abhijeet Panigra 5

, Abhijeet Panigra 5![]() , D. Jennifer 6

, D. Jennifer 6![]()

![]() ,

Omkar Mahesh Manav 7

,

Omkar Mahesh Manav 7![]()

1 Professor, Department of Management, Arka Jain University, Jamshedpur, Jharkhand, India

2 Assistant Professor, Department of Computer science and Engineering, Faculty of Engineering and Technology, Parul institute of Engineering and Technology, Parul University, Vadodara, Gujarat, India

3 Centre of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab, India

4 Professor, Department of Computer Applications, Siksha 'O' Anusandhan (Deemed to be University), Bhubaneswar, Odisha, India

5 Assistant Professor School of Business Management Noida International University, India

6 Assistant Professor, CSE Panimalar Engineering College, India

7 Department of Engineering, Science and Humanities Vishwakarma

Institute of Technology, Pune, Maharashtra, 411037, India

|

|

ABSTRACT |

||

|

The

advancement of digital illustration has led to a revolution stage whereby it

entails the application of generative artificial intelligence which

integrates the human creativity and the computational creativity. In this paper, the shift

towards generative ecosystems via models such as GANs, VAEs, and diffusion

networks will be considered in relation to the transformation of the

conventional workflows of vectors and raster. It suggests an ambivalent framework

based on which the illustration is regarded as a multidimensional contact

between human mental will and machine learning inference. In

order to estimate the similarity of artwork produced with the help of

AI and human-produced artworks in terms of the aesthetic and semantic

quality, the paper proposes a Creative Performance Index (CPI) as a critical

combination of Fréchet Inception Distance (FID) and CLIP Score and the

human-based measurements of originality and emotional resonance. Through a number of case studies of applications like DALLE,

Stable Diffusion and Midjourney, it has been demonstrated in the paper that coaching of human feedback based on an iteration approach

has a profound impact on artistic containment and richness of ideas. The

findings validate that generative AI does not replace the agency of the

illustrator but expands it to make the creative process adaptive and

symbiotic system of leading to ideas, contemplating on

them, and perfecting them. |

|||

|

Received 03 June 2025 Accepted 16 September 2025 Published 28 December 2025 Sonia Riyat, dr.sonia@arkajainuniversity.ac.in DOI 10.29121/shodhkosh.v6.i5s.2025.6876 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Generative AI,

Digital Illustration, Diffusion Models, Human–AI Collaboration, Aesthetic

Evaluation, Creative Performance Index (CPI) |

|||

1. INTRODUCTION

1.1. Reframing Creativity in the Age of Generative Intelligence

The digital age has mostly altered

creativity, authorship, and expression with the advent of generating artificial

intelligence. Illustration has always been defined as a reflection of the

vision of a man of art as a raw impact of the human imagination with a

masterful hand with its intuition and experience Donnici et al. (2025). But with the scalding appearance of machine learning and

neural generating technologies, things have begun to alter this perception.

These generative AI applications as Stable Diffusion, DALL•E, Midjourney, and

Firefly have become a collaborative rather than a tool capable of converting

textual, emotional, or conceptual messages into visual pictures capable of

competing with the craftsmanship of classical craftsmen. The development

discredits the evolution of creativity as a human capacity and presents it as a

dynamic interaction between the human thought and the computational reasoning Li et al. (2024). The key to this change is a novel form of creative

intelligence one that is both algorithmically precise and aesthetically

responsive in a human manner. In generative illustration, the artist does not

lose a role, he distorts the definition. Instead of letting this process be

done manually, the illustrator has now automated this process by coordinating

this process with prompt engineering, semantic control and a feedback process.

This act of directing and refining machine generated text is a shift between the

making and co-making of some form of dialogic art where imagination is

projected out in terms of computational agency. The creator of the drawing is

also a designer and supervisor of the generative procedure, and the formation

of significance through the subtle manipulation of information, models, and

purpose Zhou et al. (2023).The creative action now is a discussion of inspiration and

algorithmic suggestion where both the human and machine affect the contribution

of the other.

Generative intelligence challenges us,

philosophically speaking, to re-define the concept of originality, authorship

and authenticity. In classical aesthetics, the new was attached to

individuality of human producer. However, when machines are capable of

producing a new visual by considering learnt representations of large datasets,

there is an indistinct boundary between imitation and innovation. Authorship of

the illustrator therefore is not in the creation of each pixel of the visual

image but the conceptual organization and the expressive orientation of the

image. In place of the manual performance of creative work, is what is known as

the deliberate composition whereby the context, constraints and purpose of

narration are regulated by the artist in order to

achieve meaning as opposed to the physical performance of drawing Bhargava et al. (2021).It is this intellectual diversion, the new intellectual

ecology of digital art that creativity has become a kind of exchange between

the human action motivation, the data inference and the actions of models.

Generative AI also redefines the rhythm

of illustration in terms of time and the manner in which people think. What

would have taken hours of sketching, colouring and trial and error to develop

is now able to be generated in seconds with different versions of iterative

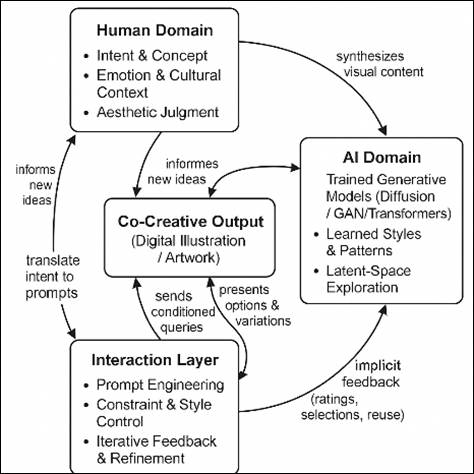

prompts and training of the style as shown in Figure 1 It

promotes democratization of the creative production and allows illustrators,

designers and non-artists to visualize abstract concepts instantly Bartlett and Camba (2024). But the same speed renders the profundity, conscious purpose

and artistic responsibility questionable. The illustrator must then work at the

task of determining the ethical judgment and interpretive authorship wherein

the products created are in correspondence with the cultural, contextual and

personal value rather than reducing art to a novelty machine. Lastly, the

re-invention of digital illustration through generative AI cannot be considered

just a technical breakthrough but more of an epistemic breakthrough into the

technical process of creative knowledge production, sharing, and interpretation

Baudoux (2024). It

positions the illustrator as an imaginative strategy-maker balancing between

creativeness and calculation, emotions and logic, novelty and reorganization.

The generative tools do not purport to any artistic intuition that they are

broadening its reach to a new area where it has not previously been. In this

new paradigm, creativity is interactive dialogue between human and machine

intelligence a type of augmented imagination in which code is brushstroke, data

colour, and prompts poetic stimuli of mental images Avlonitou and Papadaki (2025).

Figure 1

Figure 1 Human–Machine Co-Creation Ecosystem

2. Design Intelligence Framework for AI-Assisted Illustration

The nature of design intelligence when

AI based illustration is developed has changed to more of a humanized

definition that is more of a hybrid be it man-machine reasoning instead of a

man-thinking process. The intelligence in the expanded paradigm is no longer

about producing products that are appealing aesthetically but a blend of

perception, context, adaptation and feedback in a continuous mutually

supporting loop Sáez-Velasco et al. (2024).The Design Intelligence Framework (DIF), of generative

illustration, accounts of the symbiotic association between the synthetic

neural system and the aesthetic feeling of human beings to create expressive,

context-sensitive and significance-driven artworks. The framework transforms AI

into an assistant in the design thinking that will improve the creative

thinking of the illustrator rather than viewing it as a tool. This framework is

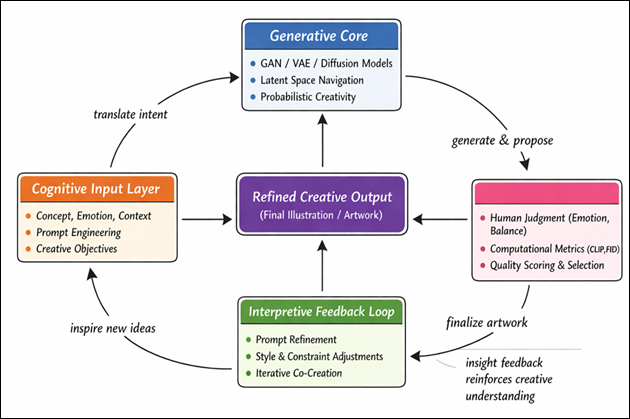

founded on the Cognitive Input Layer, as it demonstrates the conceptual and

emotional something of the artist. Here, creativity is an initial image in the

mind, which is introduced either in a form of text, drawings, or abstract

signification. Human designer contextualizes the goals, contextualization and

constraints of what the system should visualize and the reasons Relmasira et al. (2023). In this stage, the collision of language and imagination

occurs; all the sheer details or metaphors become an information processed by

the AI model as the means to decode and reform in the shape, texture and form.

Most significantly, and most

importantly, above this is the Generative Core comprising of trained AI

architectures GANs, VAEs and diffusion models that are engines of creative

synthesis. This nucleus machine intelligence encodes intent to be visualised; it

prototiles high-dimensional latent spaces in style, color

and shape as mathematical correlations. The system learns that visual coherence

has conceptual depth into which an artist in Figure 2 equally

did. It is interesting to note that this core is not deterministic, it

introduces probabilistic creativity, generating a set of readings which may be

curated or optimized by the human designer, and thus the process is

exploratory, but not prescriptive. The second layer is the Interpretive

Feedback Loop that is the focus of human-AI co-creation. Here the interaction

of the artist with the system output to change the prompts, re-weight the style

effects, or otherwise place other constraints. The AI, on the other hand,

adapts to these types of alterations and, in response to fine-tuning, alters

the pattern of its generation Albar Mansoa

(2024).The feedback cycle is one of the expressions of an adaptive

design conversation, and with each refinement, the understanding between the

artist and the AI will become deeper and deeper. This recursive learning leads

to a mutually empowering intelligence on the part of human beings which humans

learn to be competent in the facilitation and interpretative analysis, and the

system learns to be inventive in its relevance and coherence. Finally, there is

Aesthetic Evaluation Layer that incorporates cognitive, technical and emotional

evaluation. In this step, the equilibrium, symmetry, novelty and emotional

appeal are tested. Artists use visual literacy, and AI can be used to implement

such computational aesthetics principles as CLIP score, indices of color harmony or diversity Rodrigues and Rodrigues (2023). The combination of these evaluations ensures that the

generative process does not merely stand in a position to satisfy human sense,

but even algorithmic integrity.

Figure 2

Figure 2 Design Intelligence Framework for AI-Assisted Illustration

3. The Creative Workflow Dialogue Between Human and Machine

The creative process of the AI-assisted

illustration is an interactive process between the human will and the machine

Generativity, artistic imagination and the computer intelligence merge in an

exploration and refinement process. Unlike the old workflow, where one needs to

sketch, colour and post-process the image, AI-based illustration is cyclic,

conversational, and adjustable. Each phase of the process invites the

illustrator, the AI, to read, respond, and evolve, and transform the

illustration process into a give-and-take of meaning-making process Ning et al. (2024).This discussion does not only change the shape, in which

images are shaped, but also the manner in which

artists perceive creativity as such. The initial phase is conceptual initiation

whereby the artist comes up with an idea or a perception and transforms it into

a semantic or multimodal signal. The step is similar to

traditional ideation phase supplemented by computation semantics Demartini et al. (2024). The

artist considers the impact of language, tonality and explanatory details to

the interpretation of the model. The words that can be discussed as the

language strokes and that guide the AI to a certain aesthetic area are

impression, surreal, melancholy palette, etc. This stage predicts development

of literacy in such form of innovativeness of craftsmanship whereby the artist

is instructed to distort the meaning by the use of text, parameters or

reference images. In the processing of the prompt, the generation response

stage is initiated. The semantic input is decoded by the AI system in this case

which is guided by the diffusion architecture, GAN, or VAE architecture to

generate a few visual candidates. Training data are the input of each product

of the latent-space inference and probabilistic variation Wang and Yang (2024). It is a curative process of the creative artist, he/she

considers each of the versions in its composition, feeling, and correspondence

to the idea. This is a crude inversion of the creative process, that the artist

is no longer judging what the machine is proposing, delimiting a choice,

redefining, and rethinking it, as a rudimentary creative process Morales-Chan (2023). What will be followed next is the interactive refinement

loop and the most dynamic is the human-AI dialogue. The system can only be

taught effectively by artists who adjust their prompts, variations of weights

or other constraints that may be stylistic. In its turn, the model tailors its

output distribution learning to balance between fidelity and originality. This

process of negotiation produces emergent intelligence which is neither fully

human nor fully artificial agent of collaboration which grows with each

interaction. It also is concerned with feedback as a generative process and

every cycle is a learning experience and an experiment of creation AlGerafi et al. (2023).

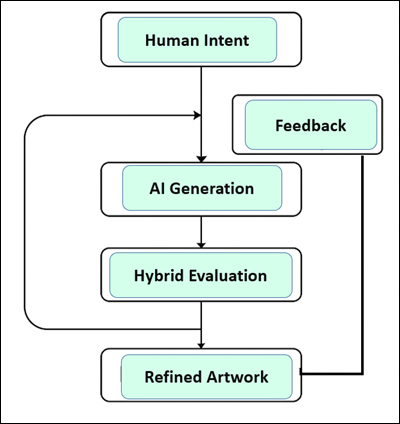

Figure 3

Figure 3 Human–Machine Creative Workflow Loop

The aesthetic judgment and synthesis of

the workflow results in the domination of human sensibility. The artist does

not only reproduce the constructed images as a product of creation but as an

idea a visual reenactment of how machines perceive and how they reproduce the

imagination of humans. The decisions are arrived at through emotion, narrative

fit or compositional resonance in contrast with technical perfection as in Figure 3 Here,

in the majority of cases, artists restore the use of manual intervention in the

creation of new textures, the re-balancing of tones or generations in order to

take back the human hand in the algorithmic system. What we are witnessing are

the two components of the manmade depth and machine level innovation that has

been combined in a hybrid artwork. Simply speaking, this sort of a workflow

transforms the illustrator into a player of creative interlocutor who

participates in interactive dialogue with intelligent systems AlGerafi et al. (2023). It is a conclusion of the bigger philosophy of symbiotic

authorship in which the artwork of art is not only created but it emerges as a consequence of an exchange process. The operation has

produced another form of artistic literacy which is founded on the way to talk

to be witty rather than using a machine. Not only is this workflow more

efficient and creative with diversity but also, it is a tremendous cultural

dislocation in the sphere of thinking about design intelligence, agency, and

authorship in the digital age.

4. Metrics of Creativity and Aesthetic Evaluation

The measure of creativity of the

generative illustration would require a hybrid framework in order to integrate

human aesthetic evaluation and the performance measurements of performance.

Since AI-generated art is created at the threshold between analytical thought

and visual art, it cannot be evaluated in terms of technical accuracy. Instead,

it must consider emotional appeal, conceptual coherence, stylistic freshness

and perceptual balance. The Metrics of Creativity and Aesthetic Evaluation

Framework thus a synthesis of the subjective and the objective, a combination

of the computational hints on the quality of the images and the human biases on

the meaning and the effect. Mathematically, there has been the emergence of a

variety of quantitative metrics to ascertain the level of fidelity, diversity

and semantic accuracy of AI generated images. Fréchet Inception Distance (FID)

is a distance measure that compares the quality of created imagery and the one

produced in the real world, in other words, it is a distance of realism and

consistency. The CLIP Score is a contrastive learning framework to establish

the consistency of the text prompts and the output generated to make certain

that the images created by the AI is a valid interpretation of the idea of the

artist. Meanwhile, Perceptual Path Length (PPL) and Inception Score (IS)

measure the diversity and the fluidity of the generated variations in the

latent space. These numerical measures are imperative in helping to benchmark

the generative models, though, they are only the surface-level performance that

is in many cases insensitive to the emotional presence, not to mention the

symbolic undertones. To supplement these quantitative measurements, human

aesthetic dimension introduces the qualitative measurements, which are founded

on sensory, emotional and creativity. Generative works of art are evaluated or

judged by artists and audiences through the use of novelty, structure, color structure, storytelling and emotional appeal. These

evaluations are subjective and they also happen to be personal, cultural and

contextual sensitivities. In practice, Likert scales are generally used to

assess human judgment of images using a visual attractiveness, thematic

consistency, and innovation scale. These responses can then be added up to

produce a Human Creativity Index (HCI), a summary of the factors of affective

response and conceptual relevance that are, as yet, unable to be measured by

algorithms. A combination of both dimensions forms Creative Performance Index

(CPI) which is a composite measure balancing the accuracy of algorithms and the

translation of art. Mathematically CPI can be determined as:

![]()

Where (α, β, γ) are the

weight of factors that are geared towards technical realism, semantic accuracy

and human perception respectively. This compound formula ensures that

creativity is seen as an experience in totality as something aesthetic and as

something calculated.

Table 1

|

Table

1 Hybrid Metrics Framework for Evaluating

AI-Generated Illustrations |

||||

|

Dimension |

Metric / Indicator |

Measurement Focus |

Evaluation Method |

Interpretation / Creative Relevance |

|

Computational Fidelity |

Fréchet Inception Distance (FID) |

Measures visual realism by comparing statistical

distributions between generated and real images |

Numerical score (lower = better fidelity) |

Indicates how “natural” or photographically

consistent an AI-generated illustration appears |

|

Semantic Alignment |

CLIP Score |

Quantifies correspondence between textual prompt

and visual output |

Cosine similarity between text and image embeddings |

Reflects how accurately the image conveys the

artist’s described concept or emotion |

|

Generative Diversity |

Inception Score (IS) |

Evaluates variability and clarity of generated

samples |

Model-based entropy computation |

Captures richness of visual output and resistance

to mode collapse |

|

Structural Coherence |

Perceptual Path Length (PPL) |

Measures smoothness of latent-space interpolation |

Gradient-based path analysis |

Represents continuity of form and color across

iterative variations |

|

Human Perception & Emotion |

Human Creativity Index (HCI) |

Aggregates subjective ratings on originality,

emotional resonance, and aesthetic balance |

Expert/user Likert-scale surveys (1–5 or 1–10) |

Captures affective and conceptual depth that

algorithms cannot quantify |

This Table 1puts CPI in a position of a non-sectarian benchmark in the

assessment of AI-aided illustration. The computational layer ensures

faithfulness of the evaluation of the images and time-liness

consistency and the human-centred layer maintains the genuineness of the

emotions and aesthetic values. The two coupled up contribute to a

multidimensional creative concept in which emotive sense is not the most but is

co-equal with algorithmic mastery.

5. Case Studies Illustrating with Intelligence

Generative AI in digital illustration

is most likely to find its most feasible application through case studies to

demonstrate how both computational creativity and human direction converge in

the actual working process. These examples suggest that the conceptual purpose

of translational creative performance is transformed into measurable creative

performance by the hybrid structure of assessment based on quantitative (FID,

CLIP) and qualitative (HCI) measures into the index of Creative Performance

(CPI). Another similarity in both examples is that illustrators rely on AI

models as their assistants, but not automatic, as this makes them more

creative, productive, and stylistically diverse.

Case 1] Concept-Driven Image with DALL•E 3.

The former does concept-driven

generation using DALLE 3, whereby artists generated layers of prompts,

including one prompt, which happened to be in a dreamlike city painted in

cubist geometry and bioluminescent tones of color.

The system interpreted these semantic signifiers to create a composition of

architectural abstraction and moods. The images on CLIP scored high 0.87 which

was quantitative and suggested good text image coherence.

Table 2

|

Table 2 Illustrates the Quantitative

and Qualitative Improvements Following Refinement |

||||

|

Evaluation Parameter |

Metric Type |

Pre-Refinement |

Post-Refinement |

Interpretation / Observation |

|

Fréchet Inception Distance (FID) |

Computational |

17.4 |

14.9 |

Visual realism improved after prompt re-weighting |

|

CLIP Score |

Computational |

0.86 |

0.87 |

Strong semantic alignment; minimal variance |

|

Human Creativity Index (HCI) |

Human (1–10) |

6.8 |

8.0 |

Refinement produced better aesthetic balance |

|

Creative Performance Index (CPI) |

Hybrid Composite |

0.68 |

0.83 |

Meaningful improvement through human–machine

dialogue |

However, repetition of compositional

imbalance by human assessors alerted that a high semantic fidelity is no

guarantee that artistic harmony might be achieved. The Creative Performance

Index improved, as measured by prompt adjustment and manual reworking, between

0.68 and 0.83, an important fact confirming how the development of the human

feedback increases the structure and narrative richness.

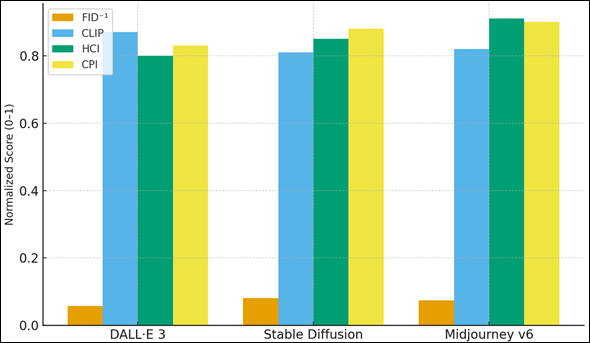

Figure 4

Figure 4 Comparative Creative Performance Across AI Systems

The Figure 4 has provided a comparative bar graph of three significant

systems DALL•E 3, Stable Diffusion, and Midjourney v6 measured in normalized

scores of FID -1, CLIP, HCI, and CPI. The visualization is a clear indicator

that Stable Diffusion performs best in overall technical fidelity (low FID,

high CLIP) and Midjourney v6 creates a higher perceptual creativity (HCI

≈ 0.91) and overall performance (CPI ≈ 0.90) than the rest. DALL•E

3 is less emotionally resonant, but is more

semantically accurate. The equal distribution among these metrics supports the

idea that there is no system predominant in all the dimensions, and the idea

that the creativity in AI-assisted illustration is the result of complementary

competencies can be substantiated.

Case 2] Style Fusion with Stable

Diffusion

The second example is the study of the

style emulation and transformation through a refined Stable Diffusion model

that has been trained on the modern illustration datasets. The experiment was

focused on combining Madhubani folk motifs and cyberpunk aesthetics two

aesthetics that are visually opposite to each other.

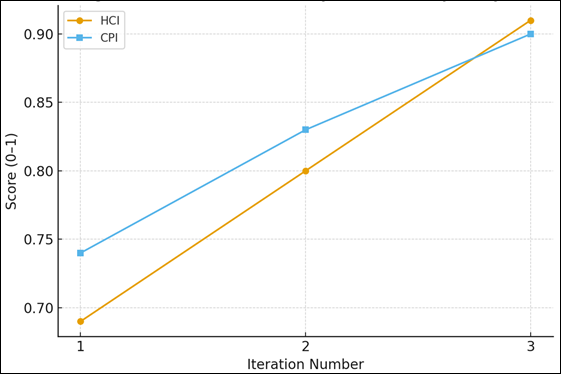

Figure 5

Figure 5 Human Feedback Impact on Creativity (DALL·E 3)

This line graph, which is presented in Figure 5, compares the phases of pre- and post-refinements of DALL•E

3 illustration generation. Both HCI and CPI scores increase significantly after

human-directed prompt correction, shifting the numbers to 0.83 in CPI. The

continuous CLIP correspondence and enhanced human ratings are pointers to the

fact that repeated interaction improves the quality of art without negatively

affecting the semantic integrity. The visual confirmation of the hypothesis

according to which human curation is a necessary enhancer of AI-generated

creativity is contained in the figure.

Table 3

|

Table 3 Summarizes the Metric Balance

within this Hybrid Style-Fusion Scenario |

||||

|

Evaluation Parameter |

Metric Type |

Result |

Weight in CPI |

Interpretation / Observation |

|

FID Score |

Computational |

12.3 |

α = 0.35 |

High fidelity to real-world distribution |

|

CLIP Score |

Computational |

0.81 |

β = 0.30 |

Accurate semantic mapping between hybrid

descriptors |

|

HCI Score |

Human (1–10) |

8.5 |

γ = 0.35 |

Evaluators praised emotional novelty and cultural

fusion |

|

CPI (αFID⁻¹ + βCLIP + γHCI) |

Hybrid |

0.88 |

Strong creative synergy across all evaluation

layers |

|

The model produced startling hybrid

compositions which were measured in terms of both algorithmic and perceptual

measures. Surprisingly, the FID outputs with highest scores did not necessarily

happen to be the most intellectually stimulating, and thus there is a need to

exercise interpretive control in the process of aesthetic judgment.

Case 3] Iterative Narrative

Illustration with Midjourney v6

The third example involves the

iterative storytelling with the help of Midjourney v6 in a series called

Metamorphosis of Memory. One prompt created on the basis of the previous

outputs, forming a chain of transforming visual themes color

fading away into abstraction, form dissolving into emotion. The continuous

cycle inculcated continuity and uniformity between iterations. Whereas there

was no change in CLIP scores (~0.82), the HCI was growing way up to 9.1 as a

result of constant human contact in that the level of emotional engagement and

the depth of the themes were getting better.

Table 4

|

Table 4 Captures the evolution of

creativity across successive iterations. |

|||||

|

Iteration |

FID ↓ |

CLIP ↑ |

HCI (1–10) |

CPI Composite |

Key Observation |

|

1 – Initial Prompt |

15.2 |

0.82 |

6.9 |

0.74 |

Early concept lacked structural cohesion |

|

2 – Refined Prompt |

14.0 |

0.82 |

8.0 |

0.83 |

Improved narrative and color harmony |

|

3 – Final Synthesis |

13.5 |

0.82 |

9.1 |

0.90 |

Achieved expressive storytelling and thematic unity |

Through these works, one similar

finding stems out: generative illustration is an aesthetic negotiation process,

a human sensibility in which the algorithmic potential is measured by the human

sensibility. The interaction between quantitative fidelity and qualitative

intuition will make sure that the creative product is not reduced to the

novelty.

Figure 6

Figure 6 Cultural Fusion and Metric Balance (Stable Diffusion)

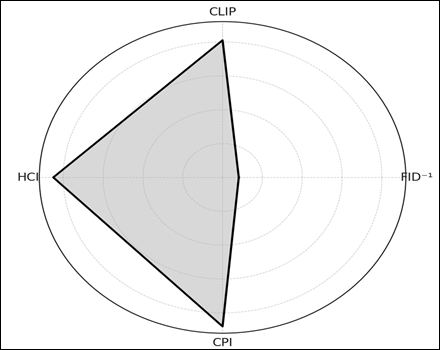

The radar (spider) chart illustrates in

Figure 6 the

balance between four measures FID -1, CLIP, HCI, and CPI of the case of fusion

of Madhubani and cyberpunk in Stable Diffusion. The almost symmetrical form is

an indication of a rounded out performance, which is

an expression of balanced realism, interpretation, and emotional appeal. The

figure highlights the unique ability of Stable Diffusion to generate high

levels of human rated creativity and be able to promote computational accuracy,

which confirms its usefulness in the synthesis of cross-cultural style.

Generative models can suggest an infinite range of variations, but it is the

critical curation of the artist, context, culture, and story purpose that will

turn the potential into valuable works of art. These case studies confirm that

in AI-assisted illustration, there is no mechanical and mystical creativity,

but rather relational, developing in a process of a long-lasting dialogue

between perception, computation, and imagination.

This is a two-line chart showing the

change of HCI and CPI scores through three iterative prompts of the

Metamorphosis of Memory series. The fact is that such an improvement of HCI

0.69 to HCI 0.91 and CPI 0.74 to CPI 0.90 is graphically demonstrated by the

trend. The user feedback is more productive in each of the iterations, which

leads to increased emotional continuity, composition structure, and narrative

coherence. The figure therefore demonstrates how refinement leads to the

development of AI away a generator to a co-creative partner.

Figure 7

Figure 7 Iterative Narrative Improvement (Midjourney v6)

6. Conclusion

The generative AI recreation of digital

illustration is the paradigm shift of manual, crafts to collaborative

cognition. Rather than delegitimizing the authorship of artists, AI augments it

by artists being able to follow broader aesthetic paths by semantic prompting,

probabilistic generation and refinement. The given models, including the Design

Intelligence Framework, or the Creative Performance Index (CPI) prove that the

creativity in the age of AI may be defined as a never-ending compromise between

human will and the potential of algorithms. The combined method of assessment

demonstrates that quantitative indexes such as FID and CLIP ensure structural

faithfulness and semantic harmony, but indexes designed by humans such as HCI

give emotional aspects and conceptual authenticity aspects that are significant

in the art of meaning. The case studies show that not the independent

generation, but the dialogue between a human and a machine, gives the best

results and in which, via repetitive interaction, a certain aspect of common

intelligence of creation is formed. This meeting of calculability and

perception renews the work of the illustrator as runaway to express the

experience as conductor of experience where the new paradigm of creativity is

re-defined where the machine is not an instrument of work but an artistic

partner. As the AI models continue to become more environmentally and

multimodal, the symbiotic authorship of the future of digital illustration will

be the creative practice that can be defined by the technology per se, yet the

emerging conversation between the human imaginations and the machine

cognitions.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Albar Mansoa, P. J. (2024). Artificial Intelligence for Image Generation in Art: How Does It Impact on the Future of Fine Art Students? Encuentros, 20, 145–164.

AlGerafi, M. A., Zhou, Y., Alfadda, H., and Wijaya, T. T. (2023). Understanding

the Factors Influencing Higher Education Students’ Intention to Adopt

Artificial Intelligence-Based Robots. IEEE Access, 11, 99752–99764. https://doi.org/10.1109/ACCESS.2023.3310109

Avlonitou,

C., and Papadaki, E. (2025). AI: An Active and Innovative Tool for

Artistic Creation. Arts, 14, 52. https://doi.org/10.3390/arts14030052

Bartlett, K. A., and Camba, J. D. (2024). Generative Artificial

Intelligence in Product Design Education: Navigating Concerns of Originality

and Ethics. International Journal of Interactive Multimedia and Artificial

Intelligence, 8, 55–64. https://doi.org/10.9781/ijimai.2024.02.004

Baudoux, G. (2024). The Benefits and Challenges of AI Image

Generators for Architectural Ideation: Study of an Alternative Human–Machine

Co-Creation Exchange Based on Sketch Recognition. International Journal of

Architectural Computing, 22, 201–215. https://doi.org/10.1177/14780771231208340

Bhargava, R., Williams, D., and D’Ignazio, C. (2021). How Learners Sketch Data Stories. In Proceedings of the 2021 IEEE Visualization Conference (VIS) (pp. 196–200). IEEE.

Demartini,

C. G., Sciascia, L., Bosso, A., and Manuri, F. (2024). Artificial

Intelligence Bringing Improvements to Adaptive Learning in Education: A Case

Study. Sustainability, 16, 1347. https://doi.org/10.3390/su16031347

De Winter, J. C. F., Dodou, D., and Stienen, A. H. A. (2023). ChatGPT

in Education: Empowering Educators Through Methods for Recognition and

Assessment. Informatics, 10, 87. https://doi.org/10.3390/informatics10040087

Donnici,

G., Galiè, G., and Frizziero, L. (2025). Rethinking Sketching:

Integrating Hand Drawings, Digital Tools, and AI in Modern Design. Designs, 9,

119. https://doi.org/10.3390/designs9050119

Li, P.,

Li, B., and Li, Z. (2024). Sketch-to-Architecture: Generative AI-Aided

Architectural Design. arXiv. https://arxiv.org/abs/2403.20186

Morales-Chan, M. A. (2023). Explorando el Potencial de ChatGPT: Una Clasificación de Prompts Efectivos para la Enseñanza. Universidad Galileo.

Ning,

Y., Zhang, C., Xu, B., Zhou, Y., and Wijaya, T. T. (2024). Teachers’

AI-TPACK: Exploring the Relationship Between Knowledge Elements.

Sustainability, 16, 978. https://doi.org/10.3390/su16030978

Relmasira,

S. C., Lai, Y. C., and Donaldson, J. P. (2023). Fostering AI Literacy in

Elementary Science, Technology, Engineering, Art, and Mathematics (STEAM)

Education in the Age of Generative AI. Sustainability, 15, 13595. https://doi.org/10.3390/su151813595

Rodrigues, O. S., and Rodrigues, K. S. (2023). A Inteligência

Artificial na Educação: Os Desafios do ChatGPT. Texto Livre, 16, e45997. https://doi.org/10.35699/1983-3652.2023.45997

Sáez-Velasco, S., Alaguero-Rodríguez, M., Delgado-Benito, V., and

Rodríguez-Cano, S. (2024). Analysing the Impact of Generative AI in Arts

Education: A Cross-Disciplinary Perspective of Educators and Students in Higher

Education. Informatics, 11, 37. https://doi.org/10.3390/informatics11020037

Wang,

Y., and Yang, S. (2024). Constructing and Testing AI International Legal

Education Coupling-Enabling Model. Sustainability, 16, 1524. https://doi.org/10.3390/su16041524

Zhou, Y., and Park, H. J. (2023). An AI-Augmented Multimodal Application for Sketching Out Conceptual Design. International Journal of Architectural Computing, 21, 565–580. https://doi.org/10.1177/14780771231170065

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.