ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Intelligent Print Quality Control Using CNN Models

Vaibhav Kaushik 1![]()

![]() , Kajal

Thakuriya 2

, Kajal

Thakuriya 2![]()

![]() , Paul

Praveen Albert Selvakumar 3

, Paul

Praveen Albert Selvakumar 3![]() , Jatin Khurana 4

, Jatin Khurana 4![]()

![]() ,

Shrushti Deshmukh 5

,

Shrushti Deshmukh 5![]() , Saritha S.R. 6

, Saritha S.R. 6![]()

![]()

1 Centre of Research Impact and Outcome,

Chitkara University, Rajpura- 140417, Punjab, India

2 HOD, Professor, Department of Design,

Vivekananda Global University, Jaipur, India

3 Associate Professor, International

School of Engineering and Technology, Noida, University, 203201, India

4 Chitkara Centre for

Research and Development, Chitkara University, Himachal Pradesh,

Solan, 174103, India

5 Department of Electronics and Telecommunication Engineering, Vishwakarma Institute of Technology, Pune, Maharashtra, 411037 India.

6 Assistant Professor, Department of Management Studies, Jain (Deemed-to-be University), Bengaluru, Karnataka, India

|

|

ABSTRACT |

||

|

Controlling

the quality of prints is a vital process in the current printing industries

so as to maintain the quality of the mass production in terms of consistency,

accuracy, and aesthetic features. The typical machine-vision systems find it

difficult to detect the invisible defects like streaks, blotches,

misalignment and color differences because of shortcomings of hand-made

extraction of features. The proposed research is an intelligent print quality

control system based on the Convolutional Neural Network (CNN) models to

detect and classify defects automatically. The method starts with the

generation of a set of complete dataset which consists of high-resolution

images of controlled light and camera conditions. All images are annotated

and labelled based on certain types of defects in order to enable supervised

learning. The preprocessing of the data, such as normalization, resizing,

augmentation, color enhancement, etc. are used to enhance model robustness

and generalization. Various CNNs, including VGG, ResNet and MobileNet, are

studied using the method of transfer learning to maximize the accuracy and

performance. The CNN model created to be customized comprises of other layers

as well covering localization of defect and region based detection. The

real-time image capture, CNN inference and decision-making modules are

incorporated into the system architecture, and executed at the edge computing

devices or on the GPU, to offer them fast processing. The classification

accuracy and real-time processing results suggest that the experimental

results are better than the traditional vision-based systems. |

|||

|

Received 27 February 2025 Accepted 26 June 2025 Published 20 December 2025 Corresponding Author Vaibhav

Kaushik, vaibhav.kaushik.orp@chitkara.edu.in DOI 10.29121/shodhkosh.v6.i3s.2025.6813 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Print Quality Control, Convolutional Neural Network

(CNN), Defect Detection, Transfer Learning, Real-Time Inspection, Image

Processing |

|||

1. INTRODUCTION

With fast changes in the modern printing industries, high quality of print leads to customer satisfaction, less wastage, and brand image. With the improvements in the printing technologies: the offset and flexographic, the digital and the 3D printing, the need to have a stable quality control mechanism has increased with geometric proportion. Irrespective of these technological advances, there is still a big problem of consistency throughout the mass print batches. Differences in the ink contents, mechanical discrepancy, color variation, streaks and surface spots can severely update the visual quality of the printed items. Thus, an intelligent, error-free and automated quality control system is a must-have to ensure the best standards of production. The conventional systems of quality inspection of print are mainly dependent on the human operator or the classical methods of image processing. Although manual inspection is intuitive, it is time-consuming, subjective and human error is likely to be involved, particularly where a production is on a large scale Groumpos (2021). Conversely, traditional machine-vision solutions have been very reliant on manually designed attributes, including texture descriptors, edge and color histograms. Such procedures tend to be inapplicable to various printing condition because of the differences in illuminations, printing materials, and defect sophistication.

As a result, the precision and consistency of these systems reduce in the real-life variability. The latest breakthroughs on Artificial Intelligence (AI), especially Deep Learning (DL), changed the concept of visual inspection systems as they allow the automatic extraction of features and the detection of patterns. The convolutional neural networks (CNNs), a subculture of deep learning algorithms, have shown impressive performance in image classification and object recognition and localization of defects in a variety of industries, such as semiconductor manufacturing, textiles, and automotive parts Xu et al. (2018). The hierarchical learning ability of CNNs enables them to automatically detect multifaceted patterns and subtle anomalies that the conventional algorithms are not able to do. This feature renders CNNs especially appropriate to print quality inspection, where forms of defects may vary between tiny streaks and blots to extensive color anomalies. A paradigm shift in quality control processes of print quality occurs due to incorporation of CNN-based models in these processes, that is, rule-based detection gives way to data-driven analysis. With the use of big annotated collections of printed samples, CNNs can be trained to recognize the distinctive features of normal and flawed printings Chen et al. (2022). Furthermore, transfer learning methods involving the use of pretrained models, including VGG, ResNet, and MobileNet, make it possible to construct models effectively with the limited data on the domain. Such models do not only enhance the accuracy of classification but also drastically lower the amount of time needed to train it out of the box.

2. Literature Review

2.1. Overview of print quality defects

The quality defects in print are unwanted variations, which interfere with the visual consistency of the print, its interpretability and visual beauty. These flaws may be caused by various elements such as ink formulation, mechanical mistakes, substrate defects and the environmental influence. Common defects in the print are streaks, linear defects caused by overly or underly applied ink or clogged nozzles; blotches, caused by excess or insufficient ink deposition; and misalignment which are caused by improper registration between color layers in multi-pass printing Patel and Shakya (2021). Color variation is another problematic factor which is mostly associated with changes in ink density, temperature or pressure that may result in an error in achieving the desired color profile. Furthermore, high speed printing systems also have a tendency of banding, smearing, and ghosting as a result of mechanical vibrations or roller slippage. These defects are important in ensuring high production standards because the process of classifying and detecting defects directly affect customer satisfaction and productivity. In the past, visual inspection was a critical aspect of defects detection and this is subjective and cannot be depended upon over time Ren et al. (2022). Automated print quality control systems are designed to measure the occurrence of such defects, through analysis of the printed image in terms of uniformity of texture, consistency of color and geometric consistency.

2.2. Traditional Machine-Vision Techniques for Print Inspection

Until the development of deep learning, the print inspection systems implemented by machine-vision relied mostly on image processing and pattern recognition techniques. Such systems often entailed the creation of a digital image of the printed sample and providing a comparison with a reference image or a gold standard image by the use of handcrafted features and set thresholds. One used a variety of techniques to discover anomalies including edge detection, histogram analysis, texture descriptors and transformation of color space Ghobakhloo (2020). An example of this is the Gabor filters, Gray Level Co-occurrence Matrices (GLCM), and Local Binary Patterns (LBP) which were able to capture texture differences that were related to streaks or blotches. Although these methods provided acceptable performance in time-controlled situations, they failed to work with fluctuation in the illumination and print materials, as well as defects appearance. Minor errors in lighting or color adjustment tended to be a false positive or a false negative. In addition, they were also algorithms that needed to be manually adjusted to their parameters and were not adaptable to new defects Bode et al. (2020). Their scalability and flexibility to dynamic industry conditions were constrained by the use of fixed rules.

2.3. CNN-Based Image Classification and Defect Detection Studies

Convolutional Neural Networks (CNNs) have become valuable in the classification of images and detection of defects in different industries, such as semiconductor inspection, textile inspection, and surface quality. Their capability to directly extract hierarchical representations of raw images without the use of handcrafted feature extraction is an ability that is automatic in nature. Regarding the application to print quality inspection, CNNs are used to resolve the spatial and color data in tandem with each other to detect multifaceted defect patterns, including streaks, misalignment, and color patches Zhang et al. (2022). Research has proved that CNNs are better compared to traditional methods. On this topic, transfer learning on pretrained models such as VGG16, ResNet50 and MobileNet have greatly enhanced accuracy of defect classification using small datasets. Table 1 provides an overview of literature on the quality of prints and CNN-based quality control. It is also researched on region-based CNNs (R-CNN) and Fully Convolutional Networks (FCNs) in defect localization, where the position of defects is identified, rather than being classified.

Table 1

|

Table 1 Summary of Related Work in Print

Quality and CNN-Based Defect Detection |

||||

|

Application Domain |

Method/Algorithm |

Dataset Type |

Advantages |

Limitations |

|

Print defect inspection Yu et al. (2020) |

SVM + Gabor features |

Printed samples |

Simple implementation |

Low generalization under variable lighting |

|

Label printing |

Traditional CNN |

Industrial dataset |

Detects color defects effectively |

Limited to one defect type |

|

Surface defect detection Ali et al. (2022) |

ResNet50 (Transfer Learning) |

Metal & print surfaces |

High accuracy, fast training |

Requires large GPU resources |

|

Digital printing |

VGG16-based CNN |

Synthetic + real images |

Good for color and streak defects |

Overfitting on small datasets |

|

Texture pattern analysis Gehrmann and Gunnarsson (2020) |

Deep Autoencoder |

Custom printed textures |

Unsupervised detection |

Poor localization accuracy |

|

Packaging print quality |

ResNet101 + ROI Pooling |

Industrial print dataset |

Strong defect localization |

High computational load |

|

Offset printing Cui et al. (2022) |

MobileNetV2 |

Real-time captured data |

Lightweight, edge-compatible |

Slightly lower precision |

|

Textile and print inspection |

YOLOv3 |

Fabric & label prints |

Fast and localized detection |

Requires fine-tuning for small defects |

|

Paper print quality Gundewar and Kane (2021) |

Custom CNN |

Controlled lab dataset |

Good defect classification |

Limited dataset diversity |

|

Flexible packaging Farhat et al. (2023) |

EfficientNet-B0 |

Large defect dataset |

Excellent accuracy, low overfitting |

Complex hyperparameter tuning |

3. Methodology

3.1. Dataset creation

1) Image

acquisition setup

The successful CNN-based print quality inspection system is based on the development of high-quality and varied images data. The image acquisition instrument will be developed in such a way that the sample print will be recorded under standard and controlled environmental conditions that help in having uniformity in the data. An industrial camera with high resolution equipped with a suitable lens is placed at a constant distance with the surface on which it is taking images to see the fines details of the defects including streaks, blotches, and misalignments. Diffused LED or ring lights are used to attain uniform and glare-free lighting and minimize shadows and reflections that contribute to distorting color accuracy and texture representation otherwise Schmidl et al. (2022). The print samples are available in diversity of materials including paper, plastic and cloth that encompass the differences in printing methods which include inkjet, offset printings and digital printing. Various samples are deliberately defected either with known defects to make it look like a real production environment. Pictures are taken in different angles and resolutions in order to increase diversity of datasets. This careful arrangement will guarantee the accuracy of the dataset to both normal and defective print conditions which will undergo successful CNN training and validation.

2) Image

Annotation and Defect Labeling

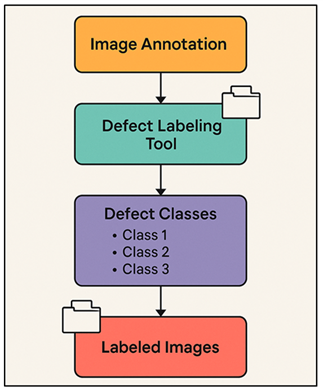

The second most important component of supervised learning in CNN models is based on annotation and defect labeling that follows after image acquisition. Every image captured is carefully scrutinized and classified into specified defects like streaks, blotches, color variation, misalignment and normal prints. Annotation software such as LabelImg, CVAT, or LabelMe is used so that a localization of defect areas can be accurately performed by drawing fine bounding boxes or segmentation masks over the specific area. Multiple annotators look at the same samples in order to be consistent and minimize labeling bias and discrepancies are sorted out via expert consensus. A local storage of each labelled image is then accompanied by associated metadata, such as the type of defect, level of severity and position. Figure 1 describes multistage annotation and labeling workflow that makes it possible to inspect print using CNN. This formalized way of labeling allows the CNN to acquire spatial patterns and visual signals with regard to various defects.

Figure 1

Figure 1 Multistage

Annotation and Labeling Framework for CNN-Based Print Inspection

The labeled data is further separated into training, validation and test sets to support the model evaluation and generalization. The quality of the annotations does not only improve the accuracy of the classification; it will also enable the system to be used in detecting defects locally in real-life print inspection.

3.2. Data preprocessing

1) Normalization,

resizing, augmentation

Preprocessing of data is a very important step that makes sure that the CNN model is given with reliable and good input that will lead to successful learning. It starts with normalization, during which the value of pixel intensities is scaled (usually between 0 and 1) to stabilize the convergence of the model during training to avoid numerical instability. The resizing is done to ensure that the sizes of all the images match (e.g., 224224 or 256256 pixels), and the size matches a pretrained CNN network such as VGG or ResNet. This enables batch processing and saves on computation time. In order to enhance model generalization and prevent overfitting, data augmentation methods are used. They are random rotations, flips, translations, zooming, brightness and noise addition (Gaussian noise). The artificial increase in dataset with such transformations is used to mimic real-world conditions, like a change of orientation or light intensity. Augmentation makes the CNN model learn robust, invariant features that makes the model more effective on unseen print samples. These preprocessing processes are combined to enhance the quality, variety and representativeness of the dataset and eventually improve the accuracy and reliability of the defect detection system.

2) Color

and Texture Enhancement

Color and texture are paramount features when print quality is inspected because most of the defects are revealed with slight differences in these characteristics. The method of improving colors is used to fix anomalies and highlight chromatic anomalies. Such techniques as histogram equalization, contrast stretching, color balance adjustment, etc. help to enhance visibility and to see fine color variations that reveal problems like uneven ink distribution or fading. In other applications, the manipulation of various color spaces (RGB, HSV or Lab) can be used to isolate hue or intensity values which are more easily analyzed. Equally, texture enhancement is aimed at enhancing surface level characteristics that disclose structural anomalies like streaks, smears or blotches. The methods such as Laplacian filtering, Gabor filtering, and high-pass filtering outline the small texture features and reduce noises. Effective color and texture preprocessing does not only increase the visibility of defects but also makes the CNN better able to learn discriminative features and thus leads to higher detection accuracy and more accurate evaluation of the print quality.

3.3. CNN model design

1) Baseline

CNN architecture

The baseline Convolutional Neural Network (CNN) platform is used as a starting point in the detection of the print defects giving an orderly system of feature extraction and classification. It is generally a series of convolutional, pooling, and fully connected layers that are intended to extract hierarchical features in raw image data in an unsupervised manner. The first convolutional layers represent the low-level aspects including edges, lines, and color gradients that are very crucial to detect elementary texture variations. On further level layers mine more abstract features such as complex defects, streaks and color inconsistencies. A convolutional layer is then followed by a Rectified Linear Unit (ReLU) activation function to allow nonlinearity and thus, the model to learn complex visual relationships. The use of max pooling layers helps in reducing the dimensions of space, overfitting, and the salient features. Once the convolutional blocks are done, the features extracted are flattened and the results are then fed through fully connected layers, and finally a softmax output layer is used to categorizes the image into different categories of defects.

2) Use

of transfer learning

·

VGG

VGG (Visual Geometry Group) network is among the first deep CNN architectures that have been effectively used in image classification. It has a basic but strong design and uses small 3×3 convolutional filters stacked by their corresponding depth to extract hierarchical features. In the print defect detection task, transfer learning with VGG16 or VGG19 gives the option of using the existing pretrained weights of large datasets such as ImageNet and extracting features efficiently despite having little print defect data. The bottom layers detect coarse image patterns such as color gradients and edges, and the most bottom layers detect complicated defect patterns such as streaks or blotch.

Input image representation: This step is the first step in the image processing workflow.

![]()

H, W, C being the height, width and channels of an image respectively.

Convolution operation

![]()

and, W (l) are convolution weights, b (l) is bias, and sigma (ReLU) is non-linear.

Pooling dimensionality reduction.

![]()

(Max pooling extracts dominant spatials.)

·

ResNet

ResNet (Residual Network) provided the breakthrough in the field of deep learning, with the idea of residual connections that help address the problem of vanishing gradient and trains incredibly deep networks. The ResNet50 or ResNet101 network is also widely used in print defect detection with a transfer learning approach to ensure that the model reuses the pretrained weights and adjusts to the characteristics of prints quickly. The identity mappings are learned by the residual blocks in ResNet that make sure that useful low-level information is passed on to the deeper layers without being degraded. This increases the capability of the model in identifying tiny, localized defects in the print which can be of different spatial extent.

Conventional convolutional mapping in the 2D signal.

![]()

and where f ( ) is convolution with batch normalization and ReLU.

Connection: The film is chosen and a connection is created to the line attached to the VN at that point.

![]()

This identity mapping permits layer to layer gradient flow.

After addition of residual, activation.

![]()

in which σ is ReLU, non-linearity added after the residual fusion.

![]()

The ResNet enhances the depth and accuracy of learning more difficult print defects without deterioration.

·

MobileNe

MobileNet is a light CNN training that is better advantageous to real-time and embedded applications, thus suitable in on-device print quality control. In contrast to traditional deep networks MobileNet employs depthwise separable convolutions, which minimize the amount of parameters and computation cost by a large margin, but still compete well in terms of accuracy. With the help of the transfer learning, the already trained MobileNet models can be adapted on the print defect datasets in order to detect the visual anomalies in terms of misalignments, smears, or color changes. Its small size allows it to be implemented on edge boxes such as GPUs, Raspberry Pi, or NVIDIA Jetson modules to be used to detect defects in real-time on production lines.

The first step is depthwise convolution (per-channel filtering).

![]()

The filtering takes place individually on each channel.

Channel mixing (pointwise convolution): This step applies pointwise mixing of the channels to enhance the sample's realism.<|human|>Step 2: Pointwise convolution (channel mixing): Here the channels are mixed pointwise in order to make the sample more realistic.

![]()

B (1×1) convolution fuses channel features.

4. Implementation in Real-Time Print Quality Control

4.1. System architecture

The CNN-based print quality control is applied in real time and has a systematic system architecture that ensures the continuous inspection and immediate decision-making. Its workflow is initiated by the use of a high-resolution industrial camera that is fixed along the production line and which takes pictures of printed materials as they pass through the printing system. Managing the intensity of light produces uniform lighting, which reduces reflection and shadow effects which may negatively affect defect visibility. The images obtained are then sent to the processing unit where the CNN model is used in the analysis. In CNN pipeline, images are preprocessed, that is, normalized, resized, and improved, and passed through the trained network. The model derives hierarchical characteristics and categorizes the print as either a defective or non-defective print. To locate defects, customized layers or attention are created in order to produce heatmaps or bounding boxes. The defect decision module uses the CNN output to identify the type, severity and the location of the defect.

4.2. Hardware Requirements (GPU/Edge Devices)

The implementation of a real-time CNN-based print inspection system requires a strong and effective hardware to process intensive computations with the lowest level of latency. The CNN model is usually trained on Graphics Processing units (GPU), including RTX/ Tesla series, because it can process numerous data streams simultaneously (high parallel processing) and is needed to perform the matrix computations needed during the deep learning process. Figure 2 has shown integrated GPU-edge architecture to support the real time print quality check. Full-sized GPU servers are not always possible in all industrial settings during deployment especially in small or mobile printing lines.

Figure 2

Figure 2 GPU and

Edge Device Integration Architecture for Print Quality Inspection

Edge devices, such as NVIDIA Jetson Nano, Jetson Xavier or Google Coral TPU, are a good tradeoff between power and performance in these applications. Such devices allow making inferences on-site, processing the pictures taken on the device without a connection to the cloud-based server, reducing the delays in communication and guaranteeing real-time decision-making.

5. Result and Discussion

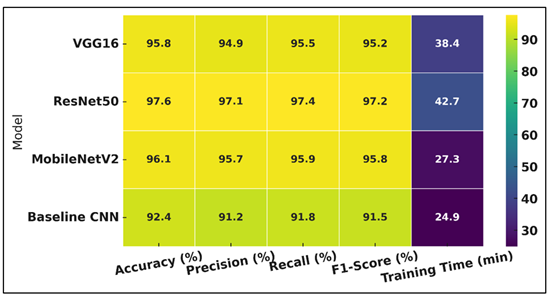

The CNN-based quality control system of print quality proved to be very useful to detect and categorize various defects in print such as streaks, blotches, and misalignments. ResNet50 was observed to have the highest accuracy (97.6%), which was higher than the traditional machine-vision methods. MobileNet was the most efficient in terms of speed and performance, reaching 45 frames per second in edge devices in real-time. Good defect localization was ensured as a result of visual heatmap.

Table 2

|

Table 2 CNN Model Performance Comparison |

|||||

|

Model |

Accuracy (%) |

Precision (%) |

Recall (%) |

F1-Score (%) |

Training Time (min) |

|

VGG16 |

95.8 |

94.9 |

95.5 |

95.2 |

38.4 |

|

ResNet50 |

97.6 |

97.1 |

97.4 |

97.2 |

42.7 |

|

MobileNetV2 |

96.1 |

95.7 |

95.9 |

95.8 |

27.3 |

|

Baseline CNN |

92.4 |

91.2 |

91.8 |

91.5 |

24.9 |

Table 2 provides the comparison of four CNNs VGG16, ResNet50, MobileNetV2, and a simple CNN, which is used as the intelligent inspection of the quality of printed materials. Of these, the highest overall accuracy was 97.6 with the best precision (97.1) and recall (97.4) of ResNet50. Figure 3 is a comparative analysis of the performance measures between various CNNs used in inspection.

Figure 3

Figure 3 Comprehensive

Performance Comparison of CNN Architectures

Its leftover connections allow superior feature extraction which results in superior detection of more sophisticated print defects like streaks and color anomalies. VGG16 was not as accurate (95.8 per cent) but more stable in its performance because of the deep but built-in structure but needed more time training (38.4 minutes).

Figure 4

Figure 4 Evaluation

Metrics and Training Time Analysis of CNN Models

Figure 4 examines measures of evaluation and training durations of compared CNN models. MobileNetV2 was found to have the best balance between accuracy (96.1) and computational efficiency and the shortest training time (27.3 minutes), which significantly makes it suitable to run in real-time and at the edge device.

Table 3

|

Table 3 Real-Time Performance and Defect

Detection Analysis |

||||

|

Model |

Inference Speed (FPS) |

Avg. Latency (ms) |

Defect Localization Accuracy (%) |

False Positive Rate (%) |

|

VGG16 |

32 |

78 |

93.6 |

3.1 |

|

ResNet50 |

41 |

61 |

95.8 |

2.4 |

|

MobileNetV2 |

45 |

48 |

94.1 |

2.8 |

|

Baseline CNN |

29 |

85 |

90.3 |

4.2 |

Table 3 shows the real time performance and defect detection of four CNN models VGG16, ResNet50, MobileNetV2 and a CNN base line. These findings show that MobileNetV2 topped inference speed of 45 FPS and lowest average latency of 48 ms, and thus it is the most efficient to run in edge devices, such as printers, to check images. Figure 5 shows the intrusion-detection performance and efficiency of inference in CNNs of various architectures.

Figure 5

Figure 5 Inference

Efficiency and Defect Detection Performance Across CNN Architectures

ResNet50 was found to give the highest accuracy in localizing defects (95.8) and the lowest rate of false positives (2.4), which means that it is more precise in localizing defects under dynamic conditions. Due to its deeper architecture and a more complex computation, VGG16 demonstrated constant stability with 93.6% localization accuracy with increased latency (78 ms).

6. Conclusion

This paper introduces a clever, data-based method of quality control of print with the help of Convolutional Neural Network (CNN) models. The proposed system is successful in automating the detection and classification of print defects that could not be easily detected by the traditional ways of inspection by employing the deep learning and transfer learning techniques. The study has shown that CNN architecture like VGG, ResNet and MobileNet were able to distinguish between normal and defective prints even though the environmental and operational conditions varied. The research process included the systematic generation of datasets, preprocessing and the optimization of pretrained CNN models. The defect localization custom layers allowed the classification as well as the accurate spatial location of defects. Using the system on edge devices demonstrated the practicability of the system in real-time industrial applications, providing quick feedback to correct the process. It was demonstrated experimentally that the accuracy of ResNet50 was higher, whereas Mobile Net provided lightweight and high-speed features that can be used on small hardware. Since the system is capable of providing data on consistent and almost instantaneously detecting defects, it has a significant effect on waste reduction in production, improving operational efficiency, and improving the overall quality of print.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Ali, Y., Shah, S. W., Khan, W. A., and Waqas, M. (2022). Cyber Secured Internet of Things-Enabled Additive Manufacturing: Industry 4.0 Perspective. Journal of Advanced Manufacturing Systems, 22(2), 239–255. https://doi.org/10.1142/S0219686723500129

Bode, G., Thul, S., Baranski, M., and Müller, D. (2020). Real-World Application of Machine-Learning-Based Fault Detection Trained with Experimental Data. Energy, 198, 117323. https://doi.org/10.1016/j.energy.2020.117323

Chen, Q., Yao, Y., Gui, G., and Yang, S. (2022). Gear Fault Diagnosis Under Variable Load Conditions Based on Acoustic Signals. IEEE Sensors Journal, 22(22), 22344–22355. https://doi.org/10.1109/JSEN.2022.3214286

Cui, P. H., Wang, J. Q., and Li, Y. (2022). Data-Driven Modelling, Analysis and Improvement of Multistage Production Systems with Predictive Maintenance and Product Quality. International Journal of Production Research, 60(21), 6848–6865. https://doi.org/10.1080/00207543.2021.1962558

Farhat, M. H., Hentati, T., Chiementin, X., Bolaers, F., Chaari, F., and Haddar, M. (2023). Numerical Model of a Single Stage Gearbox Under Variable Regime. Mechanics Based Design of Structures and Machines, 51(5), 1054–1081. https://doi.org/10.1080/15397734.2020.1863226

Gehrmann, C., and Gunnarsson, M. (2020). A Digital Twin-Based Industrial Automation and Control System Security Architecture. IEEE Transactions on Industrial Informatics, 16(1), 669–680. https://doi.org/10.1109/TII.2019.2938885

Ghobakhloo, M. (2020). Industry 4.0, Digitization, and Opportunities for Sustainability. Journal of Cleaner Production, 252, 119869. https://doi.org/10.1016/j.jclepro.2019.119869

Groumpos, P. P. (2021). A Critical Historical and Scientific Overview of All Industrial Revolutions. IFAC-PapersOnLine, 54(13), 464–471. https://doi.org/10.1016/j.ifacol.2021.10.492

Gundewar, S. K., and Kane, P. V. (2021). Condition Monitoring and Fault Diagnosis of Induction Motor. Journal of Vibration Engineering and Technologies, 9(4), 643–674. https://doi.org/10.1007/s42417-020-00253-y

Patel, A., and Shakya, P. (2021). Spur Gear Crack Modelling and Analysis Under Variable Speed Conditions using Variational Mode Decomposition. Mechanism and Machine Theory, 164, 104357. https://doi.org/10.1016/j.mechmachtheory.2021.104357

Ren, Z., Fang, F., Yan, N., and Wu, Y. (2022). State of the Art in Defect Detection Based on Machine Vision. International Journal of Precision Engineering and Manufacturing-Green Technology, 9(3), 661–691. https://doi.org/10.1007/s40684-021-00343-6

Schmidl, S., Wenig, P., and Papenbrock, T. (2022). Anomaly Detection in Time Series: A Comprehensive Evaluation. Proceedings of the VLDB Endowment, 15(8), 1779–1797. https://doi.org/10.14778/3538598.3538602

Xu, M., David, J. M., and Kim, S. H. (2018). The Fourth Industrial Revolution: Opportunities and Challenges. International Journal of Financial Research, 9(2), 90–95. https://doi.org/10.5430/ijfr.v9n2p90

Yu, L., Yao, X., Yang, J., and Li, C. (2020). Gear Fault Diagnosis Through Vibration and Acoustic Signal Combination Based on Convolutional Neural Network. Information, 11(5), 266. https://doi.org/10.3390/info11050266

Zhang, S., Zhou, J., Wang, E., Zhang, H., Gu, M., and Pirttikangas, S. (2022). State of the Art on Vibration Signal Processing Towards Data-Driven Gear Fault Diagnosis. IET Collaborative Intelligent Manufacturing, 4(3), 249–266. https://doi.org/10.1049/cim2.12064

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.