ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Motion Capture and AI in Dance Performance Analysis

Nipun Setia 1![]()

![]() ,

Abhishek Kumar Singh 2

,

Abhishek Kumar Singh 2![]()

![]() ,

Lovish Dhingra 3

,

Lovish Dhingra 3![]()

![]() ,

Sonia Pandey 4

,

Sonia Pandey 4![]() , Mohit Aggarwal 5

, Mohit Aggarwal 5![]() , Dr. Lakshman K 6

, Dr. Lakshman K 6![]()

![]() ,

Manisha Tushar Jadhav 7

,

Manisha Tushar Jadhav 7![]()

1 Centre

of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab,

India

2 Assistant

Professor, Department of Management Studies, Vivekananda Global University,

Jaipur, India

3 Chitkara Centre for Research and Development, Chitkara University,

Himachal Pradesh, Solan, 174103, India

4 Lloyd Law College, Greater Noida, Uttar Pradesh 201306, India

5 Assistant Professor, School of Engineering and Technology, Noida International,

University,203201, India

6 Associate Professor, Department of Management Studies, JAIN

(Deemed-to-be University), Bengaluru, Karnataka, India

7 Department of Electronics and Telecommunication Engineering Vishwakarma

Institute of Technology, Pune, Maharashtra, 411037 India

|

|

ABSTRACT |

||

|

It is worth

mentioning that the artificial intelligence (AI) combined with the

application of motion capture technology has transformed the analysis of

dance performances as it is studied and practiced. Motion capture

technologies - both optical technologies with markers and optical

technologies with inertial and visual technologies allow to measure human

movement quantitatively and with high quality on a large scale, which is much

more accurate and objective in comparison with classical methods of observation.

The technologies involve the capture of kinematic, kinetic and spatial

parameter that can be used in the evaluation of biomechanics, archive of

choreography and high-resolution reconstruction of complicated sequences. At

the same time, the AI techniques have become effective to explain this high

dimensional movement data. Machine learning algorithms can be used to

classify steps, styles and performance qualities in an automated way and deep

learning models can identify more subtle patterns that differentiate

artistically expressive or technical skill. The AI-based feedback systems can

also offer corrective feedback, streamline the learning, and increase the

effectiveness of the training. The intersection of Motion capture and AI has

made possible new possibilities including multimodal data fusion, automatic

scoring of skills, and performance tracking in real time. Such advances

favour adaptive coaching mechanisms that are able to react dynamically to

movement patterns of a dancer. Case studies of the recent prototypes of such

research and commercial uses in professional dance, rehabilitation, and

interactive media have been shown to be successful. Even though substantial

gains have been registered, there are still difficulties concerning sensor

less capture accuracy, diversity of datasets and personalization of AI models

to diverse dance styles and bodies. |

|||

|

Received 24 February 2025 Accepted 22 June 2025 Published 20 December 2025 Corresponding Author Nipun

Setia, nipun.setia.orp@chitkara.edu.in

DOI 10.29121/shodhkosh.v6.i3s.2025.6795 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Motion Capture, Dance Analysis, Artificial

Intelligence, Movement Classification, Performance Enhancement |

|||

1. INTRODUCTION

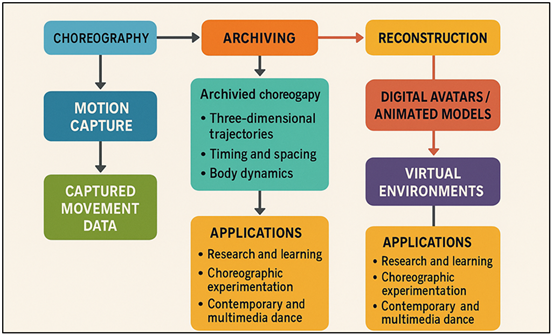

Dance is a multidimensional form of art that combines all three elements of technique, emotion and cultural significance all in one, dynamic medium of human creativity. Historically, the analysis and the assessment of the performance in the dance have been greatly dependent on the observations of experts, subjectivity, and qualitative descriptions. Although these methods are able to capture the artistry and subtlety of dance, it is not always possible to provide accurate, reliable and measurable evaluations of movement. With the modern performance setting becoming more interdisciplinary, it has become clear that strong analytical instruments that can help fill the gap between artistic interpretation and scientific rigor are in order. As an answer to that, the technological innovations, especially, motion capture (MoCap) and artificial intelligence (AI), have provided new avenues of researching dance at a greater magnitude than ever before. Motion capture technology which was first used in the fields of biomechanics, sports science and animation has been continuously used in dance studies. MoCap allows the body to be represented in a highly accurate display of movement, which is difficult to achieve with manual notation or visual estimation by recording the trajectories, velocities and spatial relationships between body segments in real time Zeng (2025). Such systems provide the ability to perform extensive biomechanical testing, objective comparison between performers and accurate documentation of choreography that may otherwise be hard to recreate. Consequently, motion capture has become an important resource to researchers and other educators as well as choreographers, performers, and digital artists in search of new creative expression. Figure 1 describes the system architecture where MoCap data is integrated with AI in order to analyze dances. Concurrent with these activities have been improvements in AI that have brought about a revolution of how complex motion data can be deciphered.

Figure 1

Figure 1 System Architecture for Dance Movement Analysis

Using MoCap and AI

The architecture of the system to analyze the dance movements using MoCap and AI is shown in Figure 1.

Machine learning models can be used to sort dance steps, discover stylistic trends, and detect technical violations, whereas deep learning models are best at discovering nuanced types of expressiveness in large scale data. More often than not, AI systems can be able to reveal trends and relationships which are not obvious to the human eye even when experts are viewing them. With the advancement of AI, a further expansion in its capabilities regarding the ability to offer individual training, intelligent feedback, and objective performance indicators is only starting to expand Zhang and Zhang (2022). The merging of motion capture and AI is a disruptive change in the analysis of dance performances. These technologies can be used together to allow automated assessment of skills, further combination of data with multiple modalities, and real-time monitoring of performance, which incorporates both biomechanical, aesthetic, and expressive aspects of dance.

2. Overview of Motion Capture Technology

2.1. Principles and types of motion capture systems

Motion capture (MoCap) technology is defined as the act of filming down and computerizing human movement into an accurate set of numbers. Essentially, MoCap works with sensors, markers or computer vision methods by following important points or body parts, and then transforming the paths into kinematic descriptions. The basic concept is the fact that one is able to capture movement with a large amount of temporal and spatial resolution in 3D space and thus, enable the researcher, performer and analyst to analyze movement patterns in a much more precise design than they could be able to with traditional visual observation. There are various kinds of motion capture systems with each having different technological principles Chen et al. (2024). Optical markers Systems based on optical markers employ reflective or LED markers on landmarks in anatomy, and are tracked by several high-speed cameras to triangulate positions in space. These are very accurate systems that need to be in controlled studio settings. In contrast, optical markerless systems use the recent computer-vision and machine-learn algorithms to retrieve the skeletal data directly out of the video footage, making them more flexible and accessible and requiring less initial setup. Inertial measurement unit (IMU) is a type of wearable sensor that is made up of accelerators, gyroscopes, and magnetometers used to measure the orientations and accelerations of segments Lauriola et al. (2022). These systems are lightweight, cannot be obstructed and can be used with dynamic conditions. Alternative methods of tracking joint movement are provided by magnetic and mechanical MoCap systems, which are less popular nowadays but can use either magnetic fields or exoskeletal connections to measure joint movement.

2.2. Advantages over Traditional Observation Methods

Various advantages of motion capture technology compared to the traditional observation methods are truly numerous, as it has turned the modes of capturing and analyzing movement as well as its perception upside down. Traditional observation, whether it is by assessment of an expert, a visual process or reviewing of a video, is based on subjective interpretation, lacks precision, and requires manual work Lund et al. (2023). MoCap systems record motion in small spatial and temporal steps allowing an accurate measurement of joint angles, velocities, acceleration, and minute changes in kinemics that may be hard or impossible to detect manually. Such detail assists biomechanical measurement, the detection of technique deviation, and helps to compare the results among performers, styles or training conditions Kakani et al. (2020). Consistency and repeatability is also another major advantage. Table 1 considers systems that combine MoCap and AI in the overall analysis of dance. Making traditional assessments might be more or less based on the experience of the observer, fatigue or even personal judgment. The standardized information provided by MoCap is stable over time, and it is feasible to track the performance longitudinally and to replicate the research results.

Table 1

|

Table 1 Review of Existing Systems and Studies Integrating Mocap and AI for Dance Analysis |

||||

|

MoCap Type |

AI Method |

Dance Application |

Key Contribution |

Limitations |

|

Optical (marker-based) |

SVM Classification |

Step Recognition |

Early automated labeling of

ballet steps |

Requires controlled lab

setup |

|

IMU-based Patrício and Rieder (2018) |

ML Regression |

Movement Quality Analysis |

Developed metrics for fluidity and energy |

Limited stylistic diversity in dataset |

|

Markerless Vision Pattnaik et al. (2021) |

Neural Networks |

Aesthetic + Expressive

Mapping |

Linked emotional intent with

movement shapes |

Hard to generalize emotion

mapping |

|

Depth Sensors |

CNN + LSTM |

Pattern Recognition |

Accurate 3D pose estimation for fast dance |

Sensitive to lighting/occlusion |

|

Optical |

K-NN Classifier |

Cultural Dance Studies |

Preserved traditional dance

through MoCap |

Small participant sample |

|

IMU Vrontis et al. (2022) |

Time-Series ML |

Balance and Stability Training |

Quantified center-of-mass tracking |

Lower precision than optical |

|

Markerless AI MoCap |

Deep Pose Estimation |

Virtual Performance |

Real-time avatar mapping |

Not optimized for high-speed

dance |

|

Hybrid MoCap |

ML Feature Fusion |

Choreography Analysis |

Combined video + MoCap for accuracy |

Complex calibration required |

|

Vision-only Goel et al. (2022) |

PoseNet/MoveNet |

Dance Tracking |

Lightweight, fast pose

estimation |

Limited for floorwork and

rotation |

|

Optical + VR |

Pattern Recognition |

VR Choreography Learning |

Immersive training module |

High equipment cost |

|

Markerless Vision |

Multimodal Deep Learning |

Expressive Gesture Study |

Linked audio + motion

expression |

High computational demand |

|

Optical Kim et al. (2021) |

Algorithmic Mapping |

Choreographic Documentation |

Digital notation of choreographies |

Primarily qualitative visualizations |

|

Vision + 3D Model |

Parametric Body Modeling |

Body Reconstruction |

Accurate dancer shape

modeling |

Struggles with loose

costumes |

|

IMU Suit |

Automated Scoring AI |

Technique Evaluation |

Practical tool for studios |

Drift over long sessions |

3. Applications of Motion Capture in Dance

3.1. Movement quantification and biomechanical assessment

Motion capture technology is highly relevant in the measurement of dance movement and in the detailed biomechanical evaluation. The classic assessment of dance may be based on a subjective visual inspection that may not notice the finer details of changes in the angle of the joints, timing, or distribution of forces. The motion capture system can overcome this drawback because it produces high-quality and accurate kinematic data showing the motion of every part of the body in space Zhou et al. (2020). Equally, rotational motions like turns or spirals may also be examined to gain a better insight on the torque distribution and muscular contraction. The quantification of movement also can be used to make comparative analysis between skill levels, genres, or choreographic styles. With the creation of normative datasets, teachers and clinicians will be able to compare the performance of a dancer with technical standards Wang et al. (2023).

3.2. Choreography Documentation and Reconstruction

Motion capture has also become a very important tool in recording and recreating choreography at levels of accuracy and efficiency never before possible. Conventional tools of recording dance, including written notation schemes, or video recording, are often not able to express the richness of dance, spatial phenomena, and expressive nuances. Figure 2 illustrates a design to capture choreography with the help of motion-capture technologies. MoCap systems address these shortcomings, tracking the fine details of 3D body motions of the dancer, and allowing the archiving of choreographic dance in detail.

Figure 2

Figure 2 Choreography Preservation Architecture Using Motion

Capture

After recording the movement information, choreographic patterns can be recreated using digital avatars or animation models. This enables the researcher, performers and students to approach choreography with an experiential sense of timing, spacing and movement. In contrast to video, which can provide a fixed point of view, motion capture allows to change camera angle and zoom and even playback speed without affecting clarity Wang (2024). This flexibility improves academic study and learning among the dancers who need to internalise complicated sequences. The creative opportunities provided by MoCap-based reconstruction are also helpful to Choreographers. They can experiment with modifications, manipulate spatial patterns or install digital effects as virtual environments allow them to view movement in order to pursue new artistic directions Sumi (2025).

3.3. Training, Feedback, and Performance Enhancement

The motion capture technology is an important innovation in dance training because it renders the dances more precise with regard to the feedback and data-driven improvement of performances. Unlike the old fashioned coaching, where the instructor is the one who provides the feedback depending on his eye and verbal feedback, MoCap does not leave the slightest technical deviations unnoticed; it is objective measurements that show the slightest technical deviations that could not be noticed in the real time. By studying the variables of value such as the alignment of joints, distribution of weight and timestasis, the dancers will be able to gain deeper understanding of their body motion and where it should be corrected Wallace et al. (2024). One of the most radical uses of MoCap in training is the real-time feedback systems. These systems enable the dancers to watch live visuals of their performance in contrast to ideal models or masterpieces. Digital superiminations, skeletal models or AR displays can draw differences between the planned and performed movements. This immediate feedback enhances the speed of learning, increases motor memory and helps in the process of self correction without the regular intervention of an instructor Yang (2022). The long-term performance enhancement with the help of the motion capture data is also supported by the tracking of progress.

4. Role of Artificial Intelligence in Dance Analysis

4.1. Machine learning for movement classification

Machine learning (ML) has emerged as a disruptive technology in the analysis of dances, especially in the identification of movement patterns in various styles, techniques and performance levels. In contrast to conventional observational methods that need the expert interpretation of the results, the ML algorithms train directly on motion information, i.e. joint positions, paths and timing to automatically detect the distinguishing features of particular dance steps or sets of dance steps. Supervised learning models are quite common and the annotated data on dance movements are used to enable algorithms to learn the mappings between the input data and the label. Support vector machine, decision tree and k-nearest neighbor techniques have been used in step recognition, identifying poses and classifying patterns based on rhythms. This computer based classification is important in the differentiation of slight technical variations that might not be apparent to the naked eye. Indicatively, ML models will be able to detect foot placement, turnout variations, or arm pathway variability in ballet exercises. By studying movement dynamics or energy patterns, they are also in a position to distinguish between stylistic categories: e.g. contemporary and hip-hop.

4.2. Deep Learning for Pattern Recognition and Style Detection

Deep learning (DL) extends the analytical processing of artificial intelligence to enable models to understand intricate hierarchical patterns in huge movement information. Contrary to conventional ML models that use manually derived features, the DL models have the benefit of automatically deriving useful spatial and temporal features of raw motion capture data, video recordings, or skeletal sequences. The convolutional neural networks (CNNs) and recurrent neural networks (RNNs) with long short-term memory (LSTM) models are more successful in processing dance, as they prove to be effective on spatial reasoning and time-series prediction. These models are the best at recognizing the subtle aspects of movements that determine a style or an expressive level of the dancer. As an example, a signature of style that can be distinguished with the help of DL algorithms would include fluidity in contemporary dance, percussion isolations in hip-hop or the accuracy of lines in classical ballet. They also get to discern repetition of patterns, rhythms or gestures that bring choreographic identity. Deep learning can extract features that are multi-layered, and those that may not be evident to human viewers. In addition to the stylistic analysis, DL helps in expressive interpretation by identifying the emotional content expressed by the speed, amplitude or the variation in the dynamics. This skill would particularly be useful in dance therapy, creative inquiry into embodied affect.

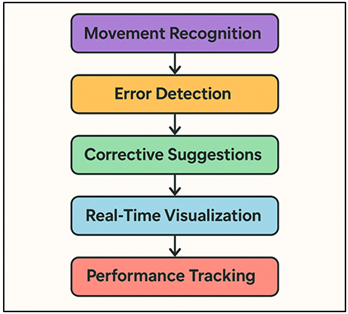

4.3. AI-Assisted Feedback and Correction Systems

Feedback systems supported by AI are one of the most influential applications of artificial intelligence in dance training and refinement of the performance. These systems process real-time movement information, which may be in the form of motion capture, camera tracking or wearable sensors, and give the dancers direct feedback in real-time, which can be acted upon. Through comparison of the performance of a dancer with expert models or pre-created standards, AI algorithms identify cases of misalignment, timing error, and biomechanical inefficiency at a high precision.

Figure 3

Figure 3 Flowchart of AI-Based Movement Analysis System

An example of an AI feedback loop is presented by the movement recognition, identification of errors, and the production of custom correction recommendations. Figure 3 is a flowchart that illustrates steps in movement analysis system with AI. An example here is when one of the dancers always under-rotates when performing a pirouette, the system can indicate lack of torque generation or incorrect spotting. In a similar manner, AI has the capability of detecting incorrect landing technique when performing a jump and suggest changes to the knee position or weight distribution to prevent injury to the dancer. This feedback is improved with real time visualization tools which may be skeletal overlays, augmented-reality markers or energy-flow maps. These interactive features enhance the learning process in motor patterns because the dancers can also control themselves without necessarily adjusting to instructive guidelines. Performance tracking and individual training plans are also advised methods of long-term skill development by AI-assisted systems. Analytics on trends over several sessions will allow the algorithms to pinpoint structural flaws that persisted across the sessions, or spots of improvement that can be progressively optimized to have the dancers improve.

5. Integrating Motion Capture and AI

5.1. Data fusion techniques for comprehensive analysis

Motion capture and artificial intelligence implementation begins with the effective data fusion which is the process of uniting multiple streams of data to the aim of creating the more precise and profound definition of the dances motion. Motion capture systems offer kinematic information with high accuracy and precision and the supplementary information is offered by video, inertial devices, audio and physiological measurements that give a context and enhanced interpretation. The fusion techniques are used to unify these components by matching, harmonizing and balancing these different modalities in a combination that allows AI procedures to analyze them together. A common remedy is feature-level fusion, according to which raw data of disparate sensors are blended into a single distinct feature-set and subsequently managed via machine learning models. This method records complicated associations in regard to motion pathways, time indications and expressiveness. Decision-level fusion, in turn, involves the methods of multiple AI models, so the output may be used to more effectively perform classification or evaluation by exploiting the advantages of the individual models. Recent fusion techniques, such as Bayesian inference, Kalman filtering and deep multimodal schemes are used in minimizing noise, error correction and enhancing the consistency of motion interpretation.

5.2. Automated Skill Evaluation and Scoring Models

Automated skill assessment systems are systems that offer an objective, consistent, and scalable evaluation of the performance of dancing with the help of motion capture and AI. The traditional evaluation is based on the judgement of the experts who are subjective and therefore their evaluation may be varied or constrained by the eyesight. This is solved by AI-based scoring models that work with movement data at a mathematical level, extracting the features that are associated with technical mastery, timing, spatial control, and expressiveness. These systems usually start with deriving key performance indicators based on MoCap data, which may be the angles of joints, the stability patterns, the coordination measures, and even the rhythm synchronization. The machine learning applications will then match the profile of a dancer to professional standards or perfectish models. Regression and classification methods produce quantitative scores, which display areas of excellence and identify areas of technical deficiency. Deep learning models also expand the level of scoring accuracy by identifying non-linear, complicated relationships between movement patterns and skill level. They are able to detect minute anomalies, such as micro-timing mistakes or ineffective routes, which a human eye will not pick. Through experience of large datasets, such models are more and more reliable over time, facilitating standardized consideration through various styles and experience degrees.

5.3. Real-Time Performance Tracking and Adaptive Coaching

Combining motion capture and AI also allows tracking the performance in real-time, meaning that the way dancers get feedback and perfect their technique is changed. AI algorithms process balance, alignment, velocity, and coordination parameters, in real-time after they are detected by constant monitoring of body movement. With this instant processing, dancers can also change the technique during performance, and thus, the training sessions have become more efficient and responsive. Lightweight inertial sensors, markerless vision-based tracking or hybrid MoCap systems are commonly used in real-time systems to make them mobile and usable in studio and on-stage applications. Models based on AI take streams of incoming data and mark them as being out of optimal form, indicate asymmetries or direct dancers to timing variation. Adaptive coaching is a higher order of this ability. Such systems are dynamically adapted to fit the feedback according to the varying skill level of the dancer, his or her physical condition, and performance objectives. As an illustration, when a dancer exhibits better hip alignment but fails to control the upper body, the system will change the focus. In the long-term, AI will be able to detect individual learning styles, suggesting specific exercises or changes that will avoid injuries and improve performance.

6. Case Studies and Current Implementations

6.1. Academic research prototypes

In the analysis of dance performance, academic studies have been instrumental in the development of the integration of motion capture and artificial intelligence. Colleges and cross-functional laboratories have come up with myriads of prototypes that may utilize technical creativity as well as artistic use. The projects usually emphasize on the data of movement in high degree of detail in order to comprehend biomechanical efficiency, style of expression or choreographic composition. Most of the prototypes use marker-based motion capture systems, along with pose estimation, joint mapping, and temporal partitioning algorithms to study the execution of technical parts of the dance, including leaps, turns and transitions by dancers. The use of AI in a research setting is frequently focused on machine learning models that differentiate between different types of movement, identify mistakes, or describe the difference in style between performers. Indicatively, scholars have developed models that can differentiate between a beginner and an expert dancer using rhythmic consistency, joint articulation, and balance mechanisms. Optimistic features have also been learned using deep learning networks directly on raw data, and used in areas like affective computing and embodied cognition. Other prototypes are experimental uses, including dynamic creation of digital avatars or interactive installations in which AI is dynamically responsive to the motions of dancers. These projects show that MoCap and AI can be used to enhance artistic expression and to help in the training and analysis at the same time.

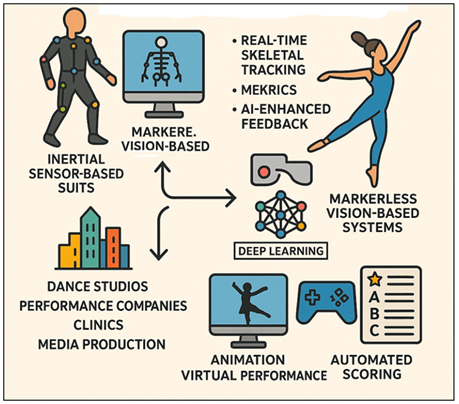

6.2. Commercial Tools and Industry Adoption

Commercial motion capture and AI tools have been growing fast and now sophisticated movement analysis is available to dancers, teachers, and people working in the production industry. Those companies that deal with MoCap, like the creation of optical systems, optical sensors, or markerless tracking systems have also incorporated AI-driven analytics to improve usability and performance insight. Figure 4 concludes the motion-capture technologies and their various uses in industries. These instruments are finding more application in dance studios, performance companies, rehabilitation clinics and interactive media production.

Figure 4

Figure 4 Motion Capture Technologies and Their Industry

Applications

The use of inertial sensor based suits has been particularly popular in terms of cost, portability and ease of use. With these systems, skeletal tracking is delivered in real time and metrics on posture, alignment and efficiency of movement are automatically produced. These data are analyzed using AI-enhanced platforms to provide instant feedback, which renders them useful in training and coaching. Vision-based systems in their markerless form have also become mainstream products, and their deep learning algorithms allow tracking dancers without any wearable component and it is best suited in classrooms, large rehearsals, or a theatrical performance.

6.3. Comparative Analysis of System Performance

It is necessary to compare the performance of different motion capture and AI-integrated systems to identify which systems are more applicable in the various applications of dance. Although high-end optical systems continue to be the gold standard of accuracy, with millimeter level precision and rich biomechanical information, they need controlled conditions and have a huge set up. IMU systems are more flexible and cheaper than inertial measurement unit systems, but more prone to deterioration of accuracy when using in high-speed or rotation because of drift and magnetometer interference. The markerless systems are also very accessible and comfortable although they may be affected by the error of occlusion particularly when the dancers are doing floorwork or where the movements are too intricate to cover the entire body. The combination of AI also has a great impact on the general output of these systems. Machine learning algorithms have the ability to correct error by forecasting missing data, smoothing paths or sensor drift. A pose estimation system based on deep learning can achieve something more video-based, and this is the first step towards markerless capture compared to marker-based capture. However, the quality and diversity of training datasets- datasets that tend to be limited in dance use are also key factors in deciding the success or failure of AI models. It has also performance variation within the measures of evaluation such as robustness, latency, user experience, and computational effectiveness. The low latency and the smooth visualization of the real-time applications are centered on the high requirements of the biomechanical research with high precision and repeatability. Aesthetic fluidity and responsiveness may be more significant than numerical accuracy in the case of artistic installations or tools and techniques of choreography.

7. Conclusion

The merging of motion capture technology and artificial intelligence is another revolutionary approach in researching, practicing and development of dance in future. The combination of these tools helps to fill the gap between the creative and the scientific that has long been present to offer certain new perspectives on the understanding, interpretation and conservation of human movement. The optical, inertial, sensor less or motion capture systems are highly accurate and objective in technique and biomechanics measurement of choreography. This accuracy allows dancers, instructors, scientists and even doctors to investigate attributes of movement that warmly lose human eyes that introduce more appreciation of technical skill and expressive delicacy. This procedure can also be even more effective with the help of artificial intelligence as the latter can process the complex data on motion, extract the appropriate patterns, and generate personalized insights. Machine learning and deep learning models can help to perform automated classification, stylistic analysis, and expressive recognition, and the AI-based feedback systems will offer real-time corrective feedback, which would speed up the process of learning and promote safer and more efficient moving habits. These inventions are not only optimum in terms of their performance, but offer greater creative opportunities to choreographers and digital artists. MoCap, in combination with AI, is being developed because of the creation of data fusion, automated scoring, and adaptive coaching systems. These gadgets ensure that the masses can now afford to analyze quality dances more easily, conveniently, and inexpensively through the availability of the high quality technology.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Chen, Y., Wang, H., Yu, K., and Zhou, R. (2024). Artificial Intelligence Methods in Natural Language Processing: A Comprehensive Review. Highlights in Science, Engineering and Technology, 85, 545–550. https://doi.org/10.54097/vfwgas09

Goel, P., Kaushik, N., Sivathanu, B., Pillai, R., and Vikas, J. (2022). Consumers’ Adoption of Artificial Intelligence and Robotics in Hospitality and Tourism: A Literature Review and Future Research Agenda. Tourism Review, 77(4), 1081–1096. https://doi.org/10.1108/TR-03-2021-0138

Kakani, V., Nguyen, V. H., Kumar, B. P., Kim, H., and Pasupuleti, V. R. (2020). A Critical Review on Computer Vision and Artificial Intelligence in the Food Industry. Journal of Agriculture and Food Research, 2, Article 100033. https://doi.org/10.1016/j.jafr.2020.100033

Kim, S. S., Kim, J., Badu-Baiden, F., Giroux, M., and Choi, Y. (2021). Preference for Robot Service or Human Service in Hotels? Impacts of the COVID-19 Pandemic. International Journal of Hospitality Management, 93, Article 102795. https://doi.org/10.1016/j.ijhm.2020.102795

Lauriola, I., Lavelli, A., and Aiolli, F. (2022). An Introduction to Deep Learning in Natural Language Processing: Models, Techniques, and Tools. Neurocomputing, 470, 443–456. https://doi.org/10.1016/j.neucom.2021.05.103

Lund, B. D., Wang, T., Mannuru, N. R., Nie, B., Shimray, S., and Wang, Z. (2023). ChatGPT and a New Academic Reality: Artificial Intelligence–Written Research Papers and the Ethics of Large Language Models in Scholarly Publishing. Journal of the Association for Information Science and Technology, 74(5), 570–581. https://doi.org/10.1002/asi.24750

Patrício, D. I., and Rieder, R. (2018). Computer Vision and Artificial Intelligence in Precision Agriculture for Grain Crops: A Systematic Review. Computers and Electronics in Agriculture, 153, 69–81. https://doi.org/10.1016/j.compag.2018.08.001

Pattnaik, P., Sharma, A., Choudhary, M., Singh, V., Agarwal, P., and Kukshal, V. (2021). Role of Machine Learning in the Field of Fiber Reinforced Polymer Composites: A Preliminary Discussion. Materials Today: Proceedings, 44, 4703–4708. https://doi.org/10.1016/j.matpr.2020.11.026

Sumi, M. (2025). Simulation of Artificial Intelligence Robots in Dance Action Recognition and Interaction Processes Based on Machine Vision. Entertainment Computing, 52, Article 100773. https://doi.org/10.1016/j.entcom.2024.100773

Vrontis, D., Christofi, M., Pereira, V., Tarba, S., Makrides, A., and Trichina, E. (2022). Artificial Intelligence, Robotics, Advanced Technologies and Human Resource Management: A Systematic Review. The International Journal of Human Resource Management, 33(6), 1237–1266. https://doi.org/10.1080/09585192.2020.1871398

Wallace, B., Nymoen, K., Torresen, J., and Martin, C. P. (2024). Breaking from Realism: Exploring the Potential of Glitch in AI-Generated Dance. Digital Creativity, 35(2), 125–142. https://doi.org/10.1080/14626268.2024.2327006

Wang, Z. (2024). Artificial Intelligence in Dance Education: Using Immersive Technologies for Teaching Dance Skills. Technology in Society, 77, Article 102579. https://doi.org/10.1016/j.techsoc.2024.102579

Wang, Z., Deng, Y., Zhou, S., and Wu, Z. (2023). Achieving Sustainable Development Goal 9: Enterprise Resource Optimization Based on Artificial Intelligence Algorithms. Resources Policy, 80, Article 103212. https://doi.org/10.1016/j.resourpol.2022.103212

Yang, L. (2022). Influence of Human–Computer Interaction–Based Intelligent Dancing Robots and Psychological Constructs on Choreography. Frontiers in Neurorobotics, 16, Article 819550. https://doi.org/10.3389/fnbot.2022.819550

Zeng, D. (2025). AI-Powered Choreography Using a Multilayer Perceptron Model for Music-Driven Dance Generation. Informatica, 49(2), 137–148. https://doi.org/10.31449/inf.v49i20.8103

Zhang, L., and Zhang, L. (2022). Artificial Intelligence for Remote Sensing Data Analysis: A Review of Challenges and Opportunities. IEEE Geoscience and Remote Sensing Magazine, 10(4), 270–294. https://doi.org/10.1109/MGRS.2022.3145854

Zhou, G., Zhang, C., Li, Z., Ding, K., and Wang, C. (2020). Knowledge-Driven Digital Twin Manufacturing Cell Towards Intelligent Manufacturing. International Journal of Production Research, 58(4), 1034–1051. https://doi.org/10.1080/00207543.2019.1607978

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.