ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Generated Compositions in Music Education

Tanveer

Ahmad Wani 1![]() , Shilpi Sarna 2

, Shilpi Sarna 2![]() , Shikhar Gupta 3

, Shikhar Gupta 3![]()

![]() , Dr. Supriya Rai 4

, Dr. Supriya Rai 4![]()

![]() ,

Jaskirat Singh 5

,

Jaskirat Singh 5![]()

![]() ,

Neha Arora 6

,

Neha Arora 6![]()

![]() ,

Pranali Chavan 7

,

Pranali Chavan 7![]()

1 Professor,

School of Sciences, Noida International, University,203201, India

2 Greater

Noida, Uttar Pradesh 201306, India

3 Chitkara Centre for Research and Development, Chitkara University,

Himachal Pradesh, Solan, 174103, India

4 Associate Professor, Department of Management Studies, JAIN

(Deemed-to-be University), Bengaluru, Karnataka, India

5 Centre of Research Impact and Outcome, Chitkara University, Rajpura-

140417, Punjab, India

6 Assistant Professor, Department of Journalism and Mass Communication,

Vivekananda Global University, Jaipur, India

7 Department of Computer Engineering Vishwakarma Institute of

Technology, Pune, Maharashtra, 411037, India

|

|

ABSTRACT |

||

|

With the

introduction of the creative process into the sphere of music learning, the

introduction of the Artificial Intelligence (AI) has changed the way of

composing, analyzing, and learning. The compositions created by AI give

educators and learners new tools that could be applied to generate creativity

and composing, and their interpretation of the musical form. The paper

explains how AI has been used more and more as a

potent application in the field of music education because it has evolved

into a neural network-based network, such as AIVA, Amper Music, and MuseNet.

The research question examined in the paper with the assistance of a

mixed-methods research incorporating surveys, interviews, and classroom

activities is how the AI-assisted tools impact the engagement, creativity,

and compositional abilities of students. The findings indicate that

AI-generated music can help to enhance the appreciation of the compositional

processes and experimentation and collaboration. Nevertheless, the study also

finds obstacles, such as the presence of ethical issues relating to

authorship, the possible excessive dependence on technology, and the

necessity to establish a balance between intuition and algorithms. Case

studies demonstrate effective educational applications, focusing on the use

of teacher facilitation and curriculum integration to make AI pedagogical

potential the most effective way to utilize it. |

|||

|

Received 24 February 2025 Accepted 21 June 2025 Published 20 December 2025 Corresponding Author Tanveer

Ahmad Wani, tanveer.ahmad@niu.edu.in

DOI 10.29121/shodhkosh.v6.i3s.2025.6794 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Artificial Intelligence, Music Education,

AI-Generated Composition, Creativity, Educational Technology |

|||

1. INTRODUCTION

1.1. Background of Artificial Intelligence in creative arts

Increasingly, Artificial Intelligence (AI) has been a disruptive influence in the creative arts, as it has transformed traditional understanding of authorship, creativity, and artistic output. Although originally created as a computational problem-solver, AI has since been developed into a creative partner which can generate visual art, literature, film scripts, and musical compositions. Its ability to handle large volumes of data, identify patterns, as well as imitate the creative aspects of thinking, allows artists to experiment in new areas of expression without being restricted by the constraints of human abilities. AI algorithms have the potential to learn based on large datasets of music and recreate styles, harmonies, and emotional expressiveness, which used to take years of human experience to build. The crossroads of AI and art has spawned an interdisciplinary aspect of inquiry that combines technology, psychology, and aesthetics, one that questions the human and machine-based creativity Ma et al. (2024). Artists are adding AI as a joint creator, where machine learning models are used to generate art, filter compositions or create interactive art experiences. As opposed to substituting the human imagination, the AI expands it and provides new possibilities of experimentation and interpretation. With this synergy expanding, it will require reconsidering the learning structures to equip the upcoming generation of artists to collaborate with and not oppose intelligent creative technologies Briot and Pachet (2020).

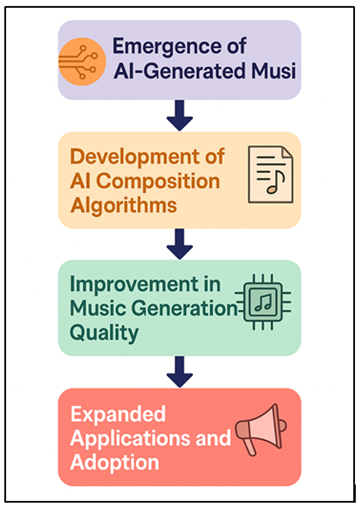

1.2. Emergence of AI-Generated Music and Its Growing Role

The advent of AI-generated music is a major advancement in the development of technology as well as musical creativity. Algorithms that created structured pleasing music as aesthetic music were demonstrated by early systems of the 1950s, including the Illiac Suite by Lejaren Hiller. With time, improvements in machine learning and neural networks have facilitated AI to create elaborate melodies, harmonies, and even entire orchestral versions that cannot be distinguished with human compositions Ji et al. (2023). Figure 1 shows how AI-generated music has been changing its role in contemporary education. Current AIai such as the MuseNet artificial intelligence system, AIVA, and Amper Music, evaluates large musical composition databases to produce original music of any genre, mood, or style.

Figure 1

Figure 1 Evolution and Growing Role of AI-Generated Music in

Education

The instruments have found their way into both professional and academic environments with musicians and students equally finding an opportunity to experiment with composition outside the standards of traditional pedagogy. The use of AI is not limited to composition, but it supports the creation of music, its organization, and even its analysis, giving a clue about how it is organized and how creative it is Hernandez-Olivan and Beltran (2021). Practical experimentation is what can be used to help learners bridge the gap between analysis and composition in an educational setting with the aid of AI.

1.3. Importance of Integrating AI in Music Education

The application of AI in the context of music education is essential to equip students to succeed in a modern creative environment that is highly technological. Conventional music education is focused on theory, performance and composition and does not always offer the necessary tools to support personalized and adaptive learning. The solution to this gap is presented in AI technologies that provide interactive platforms to evaluate, mentor, and motivate students in real-time. As an example, AI-based systems may study the writing of a student, propose harmonic progression, or create an accompaniment that will be aligned with the personal abilities Civit et al. (2022). This integration does not only lead to increased exploration of the creativity, but it fosters analytical thinking and problem solving. Additionally, AI promotes inclusivity as learners of various backgrounds can be given a chance to engage in music creation without the need to acquire a lot of technical or instrumental training. Pedagogists also find AI useful because it provides information on student performance through data, whereas they can make more qualified pedagogical decisions. However, AI promotes a more profound change in pedagogy, it makes students perceive music as active interplay between human emotion and computation intelligence Herremans et al. (2017). Through integrating AI into the educational system, the schools will be able to prepare a generation of performers that will not only be talented creators but also critical thinkers who are able to solve ethical, technical, and artistic problems.

2. Literature Review

2.1. Historical development of AI in music composition

The history of AI in music composition is dated to the 1950s when the first experiments revealed that computers were able to create structured music. The high point was the composition of Illiac Suite (1957) by Lejaren Hiller and Leonard Isaacson with the ILLIAC I computer. This composition is commonly regarded as the first major work by a computer, a composition utilizing algorithmic rules, stochastic mechanisms as well as early conceptions of generative grammar to create four-movement string quartets Zhu et al. (2023). As an example, during the later 20th century, there were projects like Experiments in Musical Intelligence (EMI) that employed style-analysis of classical composers to create new music in their stylistic resemblance - showing that computers could learn the style of composers and compose music that reminded them of human composers Wen et al. (2023). These initial algorithmic experiments would over decades develop into more advanced structures due to the availability of computing power and to the maturation of knowledge on the subject of music theory. Scientists came up with formal procedures, probabilistic networks, constraint-based procedures and finally machine-learning and neural-network based music generation systems.

2.2. Overview of Existing AI Music Generation Tools and Technologies

The current state of AI music generation utilizes a diverse array of technologies, including rule systems and deep learning, to generate music of diverse genres, styles and sophistication. One of the most notable programs of the recent years is AIVA (Artificial Intelligence Virtual Artist) that enables users, who are both amateurs and experts, to create original compositions based on more than 250 different styles. Users are able to add MIDI or audio influences, edit the generated tracks and export in various formats Briot et al. (2019). Amper Music was the other platform that was widely used. Amper offered easy to use user-friendly interface and allowed users to choose a mood, genre, instrumentation, tempo, and allow the AI to create a custom instrumentals track. It was a union of an algorithmic organization and a library of human-recorded samples, which made it accessible to the user with no serious musical background. The original company was bought, and the original service was modified, but its history highlights the importance of how AI democratized the process of music creation by non-experts Wu et al. (2020). In addition to these, there are numerous other AI applications and platforms (e.g., in modern guides) which run on neural networks, deep learning or probabilistic algorithms to produce melodies or harmonies or entire compositions - even entire scores fit to be notated or performed. Since the simple sample-based set-up, to the sophisticated neural generation, the AI music tools domain can be considered as a variety of technological solutions Guo et al. (2021). Table 1 is a summary of the previous research on AI-generated compositions used in music education. The tools differ in the level of complexity, ease of use, customization (style, instrumentation, tempo), and target audience (amateurs to explore their creativity to experts to get a boost in their workflow).

Table 1

|

Table 1 Summary of Related Work on AI-Generated Compositions in Music Education |

|||

|

Technology Type |

Key Benefits |

Challenges |

Future Trends |

|

Generative AI and adaptive

learning tools (composition assistants, intelligent tutoring) Yu et al. (2023) |

Personalized learning,

real-time feedback, adaptive ear-training and theory tools |

Risk of over-reliance;

digital divide; necessary educator training; ethical and pedagogical

implications |

Growth of AI-based tutoring

and assessment; expansion into VR/AR for immersive learning; increased need

for AI-literacy among educators |

|

Symbolic generation, audio generation, hybrid models

(GANs, Transformers, diffusion, etc.) |

Extensive compositional and sound-design capabilities;

high-fidelity audio; diverse styles/genres |

Limitations in originality, long-term structure,

evaluation of “quality,” copyright/data-set issues |

Hybrid symbolic-audio generation likely to become

standard; more robust evaluation metrics; better tools for education and

creative workflows |

|

AI-powered language models

(GPT-style) used for composition guidance, creativity prompts Zhang et al. (2023) |

Enhanced creativity, novel

ideas, support for divergent/convergent thinking; expands composer’s toolbox |

Over-dependence;

risk that AI output dominates human input; requirement for pedagogical

framing and oversight |

Growing use of GPT-like

tools for composition teaching; more mixed-modality prompts (text, audio,

theory) to stimulate creativity |

|

Generative AI as a “scaffold” and creative partner Afchar et al. (2022) |

Lowers technical barriers; supports expressive growth;

builds confidence and self-regulation |

Ethical/pedagogical challenge: ensuring AI supports —

not replaces — human creativity; transparency in process |

AI frameworks designed for youth — focus on agency,

autonomy, and creative growth; emphasis on scaffolded learning rather than

output generation |

|

Multi-agent AI systems for

symbolic music analysis and feedback Messingschlager and Appel (2023) |

Enhances pattern

recognition, provides explainable feedback, assists composition and analysis

pedagogy |

Issues of transparency,

cultural bias, limitations of evaluation metrics for creative quality |

More modular, explainable AI

agents likely to be adopted in classrooms; greater emphasis on hybrid

evaluation standards combining human and AI-generated criteria |

|

Generative AI integration guidelines for classrooms |

Promotes responsible, ethical use; raises AI literacy

among teachers and students; encourages human-centered creativity |

Need for teacher training; need for assessment

frameworks; equity and access concerns; data-privacy issues |

Increased institutional adoption; development of

curriculum standards for AI + music; advocacy for policy and regulation

around AI in arts education |

|

AI-assisted creative and

teaching tools (interactive, technological integration) Williams et al. (2020) |

Enhances accessibility,

enables creative and interactive music lessons, supports technological

fluency |

Institutional challenges:

resource allocation, integration into curriculum, balancing tradition with

innovation |

Universities likely to

increase AI-integrated music courses; development of hybrid classical-AI

curricula; focus on preparing students for contemporary music industry |

3. Methodology

3.1. Research design

The research design is a mixed-methods one as both qualitative and quantitative research methods are combined in this study in order to have a thorough picture of AI-generated compositions in music education. The qualitative aspect will involve forming the experiences of educators and students on using AI tools, including the impressions of creativity, learning, and pedagogical change. The information required in this section is collected by using in-depth interviews, open-ended questionnaires and classroom observations. The quantitative facet, conversely, assures the efficacy of AI-aided learning with the help of performance indicators, creativity ratings, and engagement rates prior to and subsequent to the application of AI tools in teaching music. An amalgamation of these methods will make data to be triangulated and this will ensure reliability and validity and will also facilitate the connection of the subjective perceptions and the objective outcomes. The suggested hybrid model would provide a broad view of the effects of AI integration on music creativity, cognition, and teaching actions. At that, it does not only describe the measurable impact of AI in the learning environments, but also the nuances of human experience that accompany the introduction of technology to the creative learning environment

3.2. Sample Selection (Students, Educators, Institutions)

The study sample is a heterogeneous sample which entails various individuals representing various educational backgrounds and settings in music. The secondary school learners, university and music conservatoire trainees are selected to examine the impact of AI-generated compositions on the learning outcomes at different levels of expertise. Teachers (music teachers and professors, the extent of their acquaintance with AI technologies) are the participants, as well, who will receive even more information on the teaching strategy and the perceived and modified level. The traditional music courses and technology-oriented units are the involvements of the institution and, hence, the vision is not biased. The sampling technique is purposive and stratified where the participants are chosen based on their involvement in the music-making or education and willingly use AI tools such as AIVA, Amper Music, and MuseNet. The variety of participants allows having a multidimensional perception of AI reorganizing the dynamics of teaching and learning in various environments. Ethics such as informed consent and data confidentiality is highly observed. The sample size will be used to attain data saturation, which will guarantee the adequacy of the quantitative data as well as qualitative information representing the population besides upholding the research integrity and academic rigor.

3.3. Data Collection Tools

Several data collection instruments are used to collect both quantitative and subjective data. Quantitative research will be based on surveys, which will be distributed to students and educators and help to evaluate their level of familiarity, interest, and perceived benefits of AI-generated compositions. The computers employed in these surveys are Likert scales and open-ended feedback to balance both numerical and qualitative data. Interviews are accompanied by more qualitative data, they pay attention to the participants experience, emotional reactions and creative product of the process of working with AI tools. Semi-structured interviews provide the flexibility of the conversation but consistency in the main themes of the research. The experiments are realized in classrooms, as the participants engage directly with AI composition platforms - creating, analyzing and performing AI-assisted works. Moreover, it draws attention to the ways in which the learning environment with the use of technology could be empirically assessed without sacrificing the creative and interpretive spirit of the musical pedagogy.

3.4. Data Analysis Techniques

Data received is interpreted qualitatively and quantitatively to get a full interpretation. Descriptive and inferential statistics statistical analysis is done on the statistics of surveys and experimental results. The trends in student engagement, level of creativity and learning results are established with indicators of mean scores, standard deviations and correlation tests. The processing of numerical data may be done by means of software (SPSS or Excel, etc.). Qualitative data analysis thematic analysis Transcripts of interviews and notes of observations are analyzed with the help of codes and categorization, which reveal general patterns, sentiments, and conceptual relationships. Thematic analysis and content analysis are some of the techniques applied to interpret the contextual sense of the responses of the participants. The cross-validation of qualitative and quantitative results can be done to ensure the validity of the findings, but triangulation would improve the consistency of the interpretations. The advantage of this two-layered analytical framework is that it is not only statistically accurate but also insightful, hence, the research will not only demonstrate the impact of AI-generated music on education but also explain the reasons why it influences creativity and engagement in this way.

4. Role of AI-Generated Compositions in Music Education

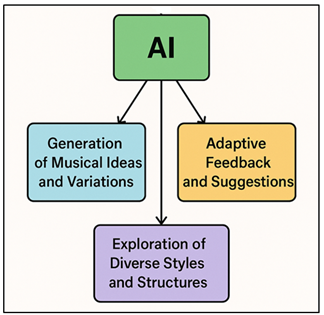

4.1. Enhancing creativity and improvisation among students

The use of AI-compositions is central to the encouragement of innovation and improvisation as it offers students a wide range of musical opportunities and corrective feedback. Contrary to the classical approaches that provided the theoretical education or the supervised practice under the tutor, AI tools provide an open and experimenting environment where a learner can test a limitless number of different musical combinations. Figure 2 demonstrates the AI mechanisms to increase student creativity and musical improvisation. Such systems as MuseNet and AIVA are able to compose the music based on various genres and moods, so students can examine various harmonic patterns, rhythms, and melodies.

Figure 2

Figure 2 Mechanism of AI Support in Developing Student

Creativity and Musical Improvisation

It is due to the interaction with AI outputs that the student is stimulated to experiment: manipulate generated music, mix styles, or include his/her interpretation, thereby establishing an independent creative mode. Furthermore, AI will be able to propose different chords, rhythmic changes, or melodic shapes that will challenge students to be creative and innovate. Improvisation, which is perceived to be a complex skill, becomes easier to approach with the assistance of AI-generated accompaniments, which can adjust to the tempo and style of the learner. This promotes immediate creative interaction between the machine and human being in the form of an energetic musical collaboration.

4.2. Supporting Personalized Learning Experiences

The incorporation of AI in music education has a great impact in the personalization of learning to suit the varied abilities, preferences, as well as the learning speed of the learners. Conventional education is commonly based on normative approaches that might not suit individual innovation or development of talents. Artificially intelligent systems, in its turn, compare the music style of each student, the level of the performance, and their theoretical knowledge to provide personalized feedback and privateized learning tracks. As an example, AI composition programs are able to create compositions that suit the level of the student, i.e., simplifying the rhythms in the case of a beginner or making them more complicated in the case of experienced learners. This plastic ability makes learning challenging and available. Moreover, AI platforms have the opportunity to monitor the progress, determining the strengths and possible areas of improvement and helping educators create more efficient interventions. Individualized suggestions e.g. the choice of genres, tempo or even harmonic variations allow students to listen to music that suits them and broadens their creative boundaries with time. Also, AI can be used to provide self-paced learning, which gives students the opportunity to experiment and write without the input of an instructor. Such independence inspires trustworthiness and further interest. Finally, personalization with the help of AI reinvents music education as a new and personal process, where technical skill and creative identity are cultivated. Through incorporation of adaptive algorithms into curricula, teachers will be able to turn classrooms into inclusion schools where artistic development of each learner is personally fostered and honored.

4.3. Facilitating Composition and Analysis Skills

The compositions that AI creates are effective pedagogical instruments to achieve composition and music analysis skills. Introducing students to algorithmically generated pieces, teachers are able to demonstrate the ideas of harmony, melody, rhythm and structure in the real time and the abstract theory turns into the interactivity. Such tools as Amper Music and MuseNet enable learners to break down and manipulate AI-generated compositions and see the effect of the algorithmic changes on the music results. AI also helps to speed up iterative learning, as the student is able to write, analyze and revise the work in one virtual location and seek immediate feedback on structural or harmonic consistency. Integrating both the accuracy of analytical and the inventive search, AI elevates the process of music composition to the level of a dynamic process of creation, contemplation, and refinement. It is through this kind of integration that not only are highly skilled composers being created but also those who are critically thinking as well as musicians who are able to interpret evaluate and innovate both in human musical structures as well as on the other hand the algorithmic models of music creation.

5. Challenges and Concerns

5.1. Ethical and authorship issues

Among the greatest issues of AI-generated compositions in the field of music education is one regarding ethics and authorship. It is a confusing legal and philosophical question to establish ownership of AI-assisted works: who is the composer of the piece, the student, the teacher, or the algorithm? Because AI systems, such as AIVA and MuseNet, are developed on the principle of creating content relying on enormous data bases of already existing music, the issues of originality, copyright violation, and originality of creative works arise. These systems tend to be educated through the analysis of the copyrighted material, therefore, turning the line between the inspirations and imitations thin. Educationally, students need to be mentored to understand the ethical consideration of the use of AI-generated music and how between creation and curation. Also, academic integrity is endangered by the situation when the student presents the AI-produced works as his personal ones without considering the role of the tool. The institutions would, therefore, need to formulate explicit attribution, ethical, and transparency policies in AI-aided creation. The other issue is that of cultural bias, since AI tools that are trained on the databases of Western music might override non-Western culture, which in turn will support cultural homogenization.

5.2. Potential Dependency on Technology

Since AI improves learning it is a threat of the technological addiction of students and teachers. As AI can simplify such intricate musical processes as harmonization, orchestration, or even rhythm writing, students may gradually rely on algorithms rather than learn the basics of compositional or analytical skills. Over reliance can suppress creativity and learners will be brought down to passive consumers of machine generated content people, rather than creating. This quandary is comparable to a larger problem on the plane of online education in which convenience is likely to dominate over critical discourse. Also, the impossibility to get out of the perfection by AI could set unrealistic expectations of art that would not allow experimentation or endorse error by humans which is essential in cultivating the creative skills. Educators may also lose their role as teachers by accidentally using AI to plan or assess a lesson and lose their freedom. The threat is not associated only with the loss of skills, technology failures, data security, or bias of software, which could result in the interruption of the learning process. Therefore, there is a high need to encourage the balanced programs that could integrate AI as a secondary help of human teaching and imagination, instead of an alternative.

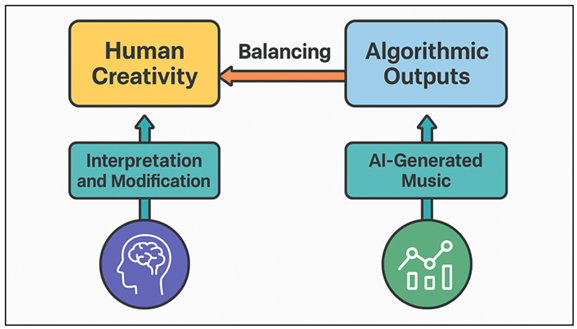

5.3. Striking a Balance between the Human Creativity and the Algorithms

The key issue of human creativity and algorithmic generation is one of the greatest problems of the modern music education. The artificial intelligence systems can generate music of technical depth, yet they lack the amount of emotional instinct, of situational sensitivity and expressiveness which are central to the artistic expression of humans. The problem of AI may outperform personal expression and make students adopt algorithmic aesthetics because of the lack of attention to this issue unless the position of AI is assumed thoughtfully. Teachers should, therefore, ensure that they create a setting in which AI is seen as a partner and not a replacement. Educational institutions ought to discourage students to accept and transform AI-generated work as creative stimulants but not as finished products. Such a transformation turns AI into a mirror, and by encouraging learners to doubt what makes human music unique in a machine creativity era, it will be possible to consider how to contextualize it. Also, this balance enables saving emotional authenticity and cultural diversity, though algorithms are not able to recreate them.

Figure 3

Figure 3 Model of Balancing Human Artistic Input with

Algorithmic Music Generation

Teachers are extremely important in positioning AI as an extension rather than substitution of human potential. Figure 3 presents a balance between the human input and the algorithmic music generation. Institutions may foster critical awareness, as well as technical competence by inserting philosophical discourses on creativity, emotion and authorship in music training programs.

6. Case Studies and Practical Applications

6.1. Examples of AI tools used in classrooms

Over the past few years, a number of AI-based platforms are already implemented in the classroom of music classes and have reshaped the manner of composition, analysis, and perception of music by students. Amper Music, in particular, offers a simple interface with which a learner is able to create a friendly soundtrack by choosing genre, mood and tempo. It enables students to immediately feel the impact of alterations to the musical parameters to the overall feel of a composition, and support theoretical understanding by practical discovery. Another popular tool that is used to teach composition and orchestration is AIVA (Artificial Intelligence Virtual Artist). Its capacity to produce symphonic or film-like works introduces the students to the complicated harmonic techniques and prompts them to analyze the logic of AI compositions. MuseNet is an extension of this practice created by OpenAI, producing a multi-instrumental music in many genres, such as classical, jazz, pop, and it allows cross-genre analysis and creativity. These tools are applicable in classrooms, and it may be used to encourage individual and group work when students develop AI-aided work and contrast human and machine creations.

6.2. Analysis of Student Engagement and Learning Outcomes

Case-studies indicate that introduction of AI-generated composition tools has a huge increase in student engagement and learning in music education. In communication with AI systems, learners are more motivated, more curious, and ready to be creative in their experiments. In comparison with conventional lessons based on theories, AI-enabled systems provide immediate feedback and engagements that facilitate the perception of abstract musical ideas as something concrete and easy to relate to. As an illustration, Amper Music or MuseNet users claim to have a higher degree of confidence when composing because they are able to visualize harmonic progression, manipulate the melodic structures, and review the results immediately. This practical interaction fosters independence and ownership to the creative procedure. The quantitative research results of classroom trials show results as far as the musical analysis, creativity scores, and structural harmony learning are concerned. In addition, collaborative AI initiatives improve learning among the peers, since students engage in discussing and evaluating the outputs of algorithms and develop critical thinking and aesthetic awareness.

6.3. Educators’ Perspectives and Feedback

The attitude of educators towards the introduction of AI-generated compositions in the music education process can be characterized as mostly positive but subdued. Most teachers admit that AI applications such as AIVA and MuseNet can be used to increase the effectiveness of the instruction process since they introduce dynamic teaching assistance that facilitates boring theorems. With the examples created by AI, teachers have the opportunity to present chord progressions, modulations, and variations of style in real-time, which was formerly only possible through live demonstration or pre-recorded material. There is also more participation in the classroom where teachers testify of more participation of students who were not initially attracted to the theory of music and those who were intimidated by it. Nonetheless, other teachers are worried about academic honesty and the ability to make students be critical instead of passive consumers of the algorithm. Pedagogically, teachers insist on the necessity to portray AI as the helper instead of the authority and to make students review and improve the machine-made compositions. In their feedback, teachers emphasize the significance of professional development, which prepares educators with the technical competencies accordingly needed to stand a chance in using AI in their learning programs.

7. Conclusion

The introduction of AI-generated music within music education represents another step forward toward a new phase of creative pedagogy the one that will eliminate the gap between human creativity and computational intelligence. The students and teachers can access an active learning process, which facilitates exploration, innovation, and critical thinking regarding music structures with the help of the latest technologies, such as AIVA, Amper Music, and MuseNet. The given research also brings to the fore the reality that AI does not just enhance creativity and improvisation, but also tailors the learning experiences and develops compositional and analytical skills. Making music more democratic, AI would allow students of different backgrounds to be involved in artistic productive process, regardless of the technical abilities and mastery of instruments. However, such critical aspects as authorship, originality, and dependence on technology are also presented in the study. Prominently developed compositions can be created with the help of AI, yet not as emotional as human capabilities, or context-sensitive. Therefore, it is significant that teachers should be in a position to train learners to use AI as a teamwork tool, as one that augments the human creative process, and not to kill it. This kind of balance will imply that technological advancement will not serve as a restraint in the advancement of art.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Afchar, D., Melchiorre, A., Schedl, M., Hennequin, R., Epure, E., and Moussallam, M. (2022). Explainability in Music Recommender Systems. AI Magazine, 43(2), 190–208. https://doi.org/10.1002/aaai.12056

Briot, J.-P., and Pachet, F. (2020). Music Generation by Deep Learning: Challenges and Directions. Neural Computing and Applications, 32(4), 981–993. https://doi.org/10.1007/s00521-018-3813-6

Briot, J.-P., Hadjeres, G., and Pachet, F.-D. (2019). Deep Learning Techniques for Music Generation: A Survey (arXiv:1709.01620). arXiv.

Civit, M., Civit-Masot, J., Cuadrado, F., and Escalona, M. J. (2022). A Systematic Review of Artificial Intelligence-Based Music Generation: Scope, Applications, and Future Trends. Expert Systems with Applications, 209, Article 118190. https://doi.org/10.1016/j.eswa.2022.118190

Guo, Z., Dimos, M., and Dorien, H. (2021). Hierarchical Recurrent Neural Networks for Conditional Melody Generation with Long-Term Structure (arXiv:2102.09794). arXiv.

Hernandez-Olivan, C., and Beltran, J. R. (2021). Music Composition with Deep Learning: A Review. Springer.

Herremans, D., Chuan, C.-H., and Chew, E. (2017). A Functional Taxonomy of Music Generation Systems. ACM Computing Surveys, 50(5), Article 69. https://doi.org/10.1145/3108242

Ji, S., Yang, X., and Luo, J. (2023). A Survey on Deep Learning for Symbolic Music Generation: Representations, Algorithms, Evaluations, and Challenges. ACM Computing Surveys, 56(1), Article 39. https://doi.org/10.1145/3597493

Ma, Y., Øland, A., Ragni, A., Sette, B. M. D., Saitis, C., Donahue, C., Lin, C., Plachouras, C., Benetos, E., Shatri, E., et al. (2024). Foundation Models for Music: A Survey (arXiv:2408.14340). arXiv.

Messingschlager, T. V., and Appel, M. (2023). Mind Ascribed to AI and the Appreciation of Ai-Generated Art. New Media and Society, 27(6), 1673–1692. https://doi.org/10.1177/14614448231200248

Wen, Y.-W., and Ting, C.-K. (2023). Recent Advances of Computational Intelligence Techniques for Composing Music. IEEE Transactions on Emerging Topics in Computational Intelligence, 7(3), 578–597. https://doi.org/10.1109/TETCI.2022.3221126

Williams, D., Hodge, V. J., and Wu, C. Y. (2020). On the Use of AI for Generation of Functional Music to Improve Mental Health. Frontiers in Artificial Intelligence, 3, Article 497864. https://doi.org/10.3389/frai.2020.497864

Wu, J., Hu, C., Wang, Y., Hu, X., and Zhu, J. (2020). A Hierarchical Recurrent Neural Network for Symbolic Melody Generation. IEEE Transactions on Cybernetics, 50(6), 2749–2757. https://doi.org/10.1109/TCYB.2019.2953194

Yu, Y., Zhang, Z., Duan, W., Srivastava, A., Shah, R., and Ren, Y. (2023). Conditional Hybrid GAN for Melody Generation from Lyrics. Neural Computing and Applications, 35(5), 3191–3202. https://doi.org/10.1007/s00521-022-07863-5

Zhang, Z., Yu, Y., and Takasu, A. (2023). Controllable Lyrics-To-Melody Generation. Neural Computing and Applications, 35(27), 19805–19819. https://doi.org/10.1007/s00521-023-08728-1

Zhu, Y., Baca, J., Rekabdar, B., and Rawassizadeh, R. (2023). A Survey of AI Music Generation Tools and Models (arXiv:2308.12982). arXiv.

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.