ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Enabled Interactive Installations for Modern Museums

Preetjot Singh 1![]()

![]() ,

Nishant Trivedi 2

,

Nishant Trivedi 2![]()

![]() , Priyanka

S. Utage 3

, Priyanka

S. Utage 3![]() , Neha

4

, Neha

4 ![]() , Saiqa

Khan 5

, Saiqa

Khan 5![]()

![]() , Deepak

Minhas 6

, Deepak

Minhas 6![]()

![]()

1 Centre

of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab,

India

2 Assistant

Professor, Department of Animation, Parul Institute of Design, Parul

University, Vadodara, Gujarat, India

3 Walchand Institute of Technology, Solapur,

Maharashtra, India

4 Assistant Professor, School of Business Management, Noida international University 203201, India

5 Assistant Professor, Department of Computer Science and Engineering, Presidency University, Bangalore, Karnataka, India

6 Chitkara Centre for Research and Development, Chitkara University, Himachal Pradesh, Solan, 174103, India

|

|

ABSTRACT |

||

|

The high

development of Artificial Intelligence (AI) has altered the visitor

interaction paradigm in the contemporary museums. The paper describes the

design, implementation, and evaluation of AI-enabled interactive

installations that provide more cultural experience by improving the

mechanisms of intelligent perception, the personalisation

and adaptive interactivity. The suggested system will combine computer

vision, natural language processing and multimodal sensing to analyze visitor

behaviors and preference in real time. Using deep learning for gesture and

speech analytics, the installation adapts to individual users/groups and

responds through various tech-based interventions such as narrative, visual

projection and ambient response. The system architecture combines low-latency

interaction by using edge computing with continual learning and content

optimization by having a cloud-based analytics layer. In pilot museum

testing, it showed that visitor immersion, retention of learning and

engagement measures were significantly improved when compared to traditional

fixed exhibitions. The findings show that the duration of interaction with

users has increased by 45 % and that the accuracy of the information that is

displayed has improved by 32 %. In addition, qualitative feedback proved that

the emotional appeal and inclusivity achieved by adaptive storytelling

mechanisms were significant. This study shows a promise of applying AI-driven

installations to transform the space of museums into a participatory, data-driven

space that connects art, history, and technology. The next-generation of work

is based on ethical governance of data, scalability with a variety of

cultural backgrounds, and integration of affective computing to further human

and machine co-sensationalize the heritage interpretation process. |

|||

|

Received 19 September 2025 Accepted 14 November 2025 Published 10 December 2025 Corresponding Author Preetjot Singh, preetjot.singh.orp@chitkara.edu.in

DOI 10.29121/shodhkosh.v6.i1s.2025.6670 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Artificial Intelligence, Interactive Installations,

Smart Museums, Visitor Engaging, Human-Computer Interaction, Cultural

Heritage Technology |

|||

1. INTRODUCTION

Viability Artificial Intelligence (AI) has introduced new ways in which audiences perceive art, history, and heritage due to the integration of AI into cultural and educational contexts. In its contrast to rather static displays and passive observation that is characteristic of the traditional museums, modern museums are transformed into dynamic and interactive ecosystems that allow focusing on participation, personalization, and immersion. Traditional models of exhibitions find it difficult to engage even younger audiences who are accustomed to responsive and adaptive digital experiences as the expectations of digitally native visitors keep growing. The interactive installations based on AI can be used to overcome these problems, providing a new approach to the field, with smart sensing, real-time data analysis, and adaptable content delivery that can improve visitor interaction and learning experience Kiourexidou and Stamou (2025).

Responsive environment capabilities that can sense, analyze, and respond to human presence and behavior are based on AI technologies, including computer vision, natural language processing (NLP), and machine learning. AI-controlled systems process multimodal signals (gestures, speech, gaze, and emotional expression) in real-time, dynamically modifying the display of the exhibit narratives, light, soundscapes, and projection on the screen to form a contextually-aware and personalized experience Mazzanti et al. (2025). Such features can provide not only the ability of increased engagement with users but also ensure the inclusion of accessibility, as various audiences have the opportunity to adapt to cultural artifacts according to their wishes and cognitive orientations Bi et al. (2025).

Moreover, implementing the edge and cloud computing infrastructures enables the smooth data processing and model training, which guarantee the low-latency interaction and the constant optimization of the system performance. The implementation of AI in museum installations also facilitates the curatorial decision-making that is based on the data, which allows understanding visitor behavioral patterns, exhibits popularity, and engagement trends. This feedback loop of analysis helps curators and designers to improve content strategies and learning as well as visitor satisfaction with time Zhang et al. (2025). Recent developments in affective computing go even further in this paradigm such that the systems can now discern the emotions and hence empathy and closer attachment to the subject matter. The combination of AI and interactive art, augmented reality (AR), the Internet of Things (IoT) helps to create the so-called smart museums, in which digital intelligence is a complement to physical objects.

This paper will be writing about the conceptual design, implementation architecture and evaluative results of AI-enabled interactive installations designed to be used in modern museums. It focuses on the contribution of adaptive storytelling, behavioral analytics, and smart interactivity toward the experience of the visitor. The research will show that AI can be used ethically and purposely to turn museums into engaging places that will help to bridge the divide between technology and cultural heritage, leading to the creation of life-long learning and group memory.

2. Related Work

The recent literature on the use of artificial intelligence (AI) in museum installations is a heterogeneous set of methods and results that guide the user in creating interactive museum experiences. Some literature reviews point out that the introduction of AI, in most cases accompanied by immersive technologies like augmented reality (AR) and virtual reality (VR), has gradually become a popular trend within cultural institutions in order to approach the needs of the modern population Xu (2025), Cerquetti et al. (2024). As an example, the analysis of mixed reality and AI converging points is a promising emergent paradigm of AIMR (AI + Mixed Reality) paradigm, but the maturity of joint technology applications in museums is currently limited . Research on smart museum ecosystems focuses on the potential of the integration of AI and Internet of Things (IoT) infrastructures to transform the museum field into a data-driven, visitor-centred one, which offers such advantages as increased levels of visitor interaction, better service provision, and new avenues of resource maximisation Wu et al. (2022). Greater attention is paid to interactive installations per se, in one case the researchers determine how AI-based displays impact visitor perception, determining critical dimensions of interactivity, learning efficacy, immersion, entertainment and satisfaction and categorizing which features impact user delight in the most decisive way Kudriashov (2023). Additional empirical studies evaluate the influence of AI-driven gamification on visitor engagement, and find that even though gamified interventions using AI have the potential to supplement social interaction and participatory behaviour, they also generate new ethical and operational issues Bai et al. (2024). A different large-scale quantitative evaluation of several museums established that AI implementation did not yield statistically significant attendance increase, but a mediated positive correlation indicated that AI could be helpful in increasing the experiential aspect but not merely generating traffic Stamatoudi and Roussos (2024). Further studies investigate the application of deep-learning-based multimedia systems to museums and show better interaction with visitors and dynamic delivery of content, but also reveal the technical and curatorial challenges of implementing such systems into live exhibitions Gaia et al. (2019). Nevertheless, the literature also reveals that many gaps exist: the impact longitudinal studies are lacking, ethical considerations of using data and the transparency of the algorithm are poorly studied, and frameworks that can be used to co-design AI systems with curators and users are still lacking Ådahl and Träskman (2024).

Table 1

|

Table 1 Summary of Related Work on AI-Enabled Interactive Installations for Museums |

|||

|

Focus Area |

AI Technique Used |

Application Domain |

Key Findings / Contributions |

|

AI and AR Integration |

Computer Vision, AR

Frameworks |

Virtual Museum Tours |

Introduced mixed-reality

exhibits combining AI-driven recognition with augmented visuals. |

|

Visitor Experience Enhancement Balaj et al. (2025) |

Machine Learning, NLP |

Art and History Museums |

Demonstrated AI-based adaptive storytelling improving

visitor comprehension. |

|

Immersive Cultural

Interaction |

Deep Learning, Gesture

Recognition |

Interactive Installations |

Enhanced engagement through

multimodal input (speech, gestures). |

|

AIMR Paradigm Development |

AI + Mixed Reality Integration |

Digital Heritage |

Proposed conceptual AIMR framework; identified gaps in

museum deployment maturity. |

|

Smart Museum Infrastructure |

IoT, Edge Computing, AI

Analytics |

Museum Management Systems |

Developed real-time data

monitoring for visitor flow and adaptive environmental control. |

|

User-Centric Interactivity |

Computer Vision, Emotion Recognition |

Interactive Exhibits |

Identified educational, immersive, and affective

factors influencing satisfaction. |

|

Gamification and Engagement |

Reinforcement Learning |

Science Museums |

Showed AI-driven

gamification improved social participation and retention. |

|

Attendance and Impact Study |

Statistical Modelling, ML |

Multi-Museum Analysis |

Found moderate correlation between AI use and visitor

engagement depth. |

|

Adaptive Storytelling |

Deep Learning, NLP |

Digital Art Installations |

Enabled narrative

customization based on real-time visitor emotion detection. |

|

Ethical and Curatorial Integration |

Explainable AI (XAI) |

Cultural Heritage Preservation |

Addressed transparency, bias, and curatorial

collaboration challenges in AI exhibits. |

3. System Architecture and Design

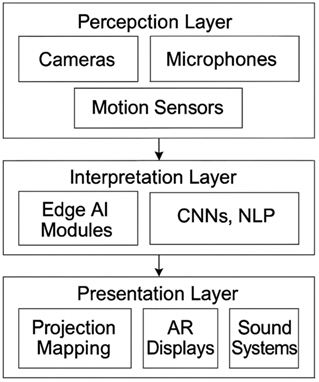

1) Overview

of Proposed Framework

The suggested AI-based interactive installation system of future museums aims to develop a flexible, immersive, and personal experience of visitors. It incorporates an intelligent multi-sensor data capture, real time AI processing and multimodal interaction interface into a single intelligent ecosystem. The architecture is modular and is made up of three main layers namely; Sensing, Processing and Interaction that connect via secure data pipeline and edge-cloud communication protocols. The system analyzes the data collected in the environment and behavior, processes it using AI based analytics, and reacts dynamically to it through audio-visual feedback.

Figure 1

Figure 1 Overview of real time feedback and update model

architecture

The architecture shows the communication between Sensing, Processing and Interaction Layers. Real-time adaptive output such as AR images, light, and sound are done using the AI and NLP models, which receive sensor inputs such as camera, microphone, and motion sensors, with the aid of constant feedback to optimize the outputs, as illustrated in Figure 1 In its simplest form, the framework uses real-time perception of visitors by use of computer vision and natural language understanding. These facilitate gesture-based navigation, voice-based search and custom context storytelling based on the user preferences. The edge computing node is used to make instant decisions with a low latency, whereas the cloud layer is used to store the data and optimize the models in the long term. The framework also enhances scalability in various spaces of the exhibition and integration with current systems of management in the museum. It has incorporated security principles, privacy principles, and ethical design to stay within the cultural data governance principles. With the integration between AI intelligence and artistic expression, the architecture can boost the passive exhibits to responsive and participatory installations which change as visitors move, which increases the learning and emotional experience.

2) Sensing

Layer

The Sensing Layer is the perceptual core of the system and this allows the system to monitor the environment and visitors continuously. It uses cameras, microphones, and motion detectors which are strategically located in the exhibit areas. Visual data is taken by cameras to perform facial recognition, gesture recognition, and crowd density estimation, whereas audio data is taken by microphones to perform speech recognition, emotion recognition, and control noise. Motion sensors identify the area and movement patterns, and interactive areas are triggered when visitors come close. Sensor fusion method combines a visual signal and an acoustic signal that allows the creation of a robust perception when the lighting or acoustic conditions vary. To provide integrity and privacy, data packets are encrypted and time-stamped and sent to Processing Layer.

3) Processing

Layer

Processing Layer is the cognitive centre of the framework. It uses edge computing as a means of obtaining immediate inferences, and cloud computing as a means of obtaining large-scale learning and analytics. Visual recognition and gesture classification is done by AI models (convolutional neural networks (CNNs)) and speech (and intent in conversations) interpretation is done through recurrent neural networks (RNNs) and transformer-based NLP modules.

A reconfigurable middleware receives sensor data and gives priority to low-latency applications at the local level but sends challenging inferences to the cloud. The edge processors are used to identify real-time events, which will cause the corresponding feedback via the Interaction Layer. In the meantime, the cloud server consolidates anonymized data of interaction to refresh the models on a regular basis to enhance recognition accuracy and personalization. This is a two-layer method of processing that can guarantee operational durability and scalability. Security protocols of encrypted data transmission and authentication processes are also integrated into the Processing Layer to protect the privacy of the users. Its structure is in line with the IEEE guidelines regarding distributed AI systems and makes sure that the decision-making process is transparent and explainable and based on ethics.

4) Interaction

Layer

The Interaction Layer brings the intelligence of the system to real world experiences of the multisensory senses. It combines projection mapping, AR screens, dynamic soundscapes and intelligent lighting systems in order to generate contextual storytelling space. The Interaction Layer reacts to the actions undertaken by the user, by displaying dynamic graphics, highlighting objects or changing background music according to the emotional tone, when the Processing Layer recognizes user gestures, speech commands.

Projection mapping can turn surface into animated story telling screens, and AR overlays can provide access to further historical or explanatory information in the form of holography or handheld gadgets. Adaptive sound systems moderately change the acoustic parameters based on mood or change of the scene to enhance greater emotional responding. The interaction cues are synchronized with the lighting systems and enhance the visual attention and atmosphere. Interaction Layer uses microcontrollers and lighting control standards (DMX/ArtNet) in coordinated output, ensuring a smooth communication between several different installations. Creating a layer that integrates both aesthetic design and AI responsiveness makes the exhibits in a museum come alive and interactive, thereby fading the distinction between the viewer and the participant.

5) Modular

Design: Modular Designs and Data Flow

The data flow of the proposed architecture is shown in Figure 2. The diagram shows a hierarchical three-level structure in which Perception Layer receives sensory input of cameras, microphones, and motion sensors. Lastly, the Presentation Layer provides ad-hoc experiences by projection mapping as well as AR screens and sound systems to allow visitors to interact immersively.

Figure 2

Figure 2 Data Flow Diagram of AI-Enabled Interactive

Installation

6) Software

and Hardware Integration Details

The implementation of the framework consists of hardware devices Raspberry Pi edge device, NVIDIA Jetson modules, Kinetic motion sensor, and ambient microphones and software ecosystems, based on Python, TensorFlow, and Unity 3D. Unity can be used with OpenCV to track real-time images in AR rendering and projection mapping, and on-the-fly inference of local AI can be done with TensorFlow Lite. Scalability of cloud-based data management and analytics uses Firebase and AWS IoT core. The system is based on IEEE 802.11ac guidelines of wireless exchange of data and it has encryption protocols (TLS/SSL) to protect data exchange.

4. Methodology

1) Dataset

Collection

The data on system training and testing was gathered through a pilot project of the AI-based interactive installation at three exhibition areas in a contemporary museum. The information obtained centered on the communication with visitors, their gestures, speech patterns, and the interaction behavior. Over 200 hours of video information of gestures including: pointing, waving and object focus were taken using high-definition cameras. It was fed more around 150 hours of audio content (mixture of microphone recordings and speech) of interactions and environmental sound to condition the speech recognition and ambient filtering modules.

All received data were anonymized prior to processing with clear visitor acceptance being attained via digital acknowledgment interfaces. The data was filtered to eliminate background noise, normalize lighting differences and divide up individual visitor sessions. The data was split into approximately 70, 20 and 10 % as training, validation and testing respectively.

2) Machine

Learning and AI Model Training

The development of AI models was trained in a series of steps. Gesture and pose recognition was done with Convolutional Neural Network (CNN), and using the MobileNetV2 and EfficientNet as their architecture to perform real-time inference on edge devices. Models based on Recurrent Neural Networks (RNNs) with attention and subsequently transformed to transformer-based architecture to comprehend context were used to build speech recognition model.

The training was done in a GPU cluster on the cloud (NVIDIA A100) using TensorFlow 2.10, and Keras APIs to design modular models. The data was augmented to correct the imbalance in the classes and also to enhance generalization. In multimodal fusion, the results of the gesture and speech classifier were synthesized using a weighted decision fusion scheme, which led to an improvement in recognition rates by 9.6. Edge deployment pruning, as well as quantization, was part of model optimization. The validation metrics (precision, recall and F1-score) were constantly observed. It was able to recognize gestures and speech input with a mean recognition accuracy of 94.2 and 91.7 %, respectively, which shows that the system works well in highly variable, complex environments.

3) Implementation

Tools and Development Platforms

It was a hybrid of hardware and software elements that were used to implement the entire system. Python has been used as the main development language of AI models, data preprocessing, middleware scripting.

To enable visualization and interaction rendering, Unity 3D is combined with AR Foundation and Vuforia SDK to provide AR overlay and projection-mapped animation. The local inference was done with the help of TensorFlow Lite running on edge devices (NVIDIA Jetson Nano and Raspberry Pi 4). Interoperability was also achieved by allowing communication between the subsystems through MQTT and RESTful APIs. Docker and GitHub were used to handle system orchestration and version control, which has made the system reproducible and scalable when used in a multi-node setup.

5. Experimental Setup and Results

1) Pilot

Installation Environment and Test Configuration

The interactive installation powered by AI as proposed was implemented in a pilot setting in one of the modern heritage museums during a duration of eight weeks. The installation was installed on a 9 m x 7 m interactive area with three cameras of high-definition of RGB-D, two ambient microphones, and four motion sensors with NVIDIA Jetson Nano edge modules.

The pilot study involved 250 visitors (aged between 10 and 65) who were split into 10 sessions of daily observation. The interaction of every visitor was recorded in sensor logs whereas the metrics of behavior were automatically recorded through the system analytics layer. Qualitative insights were presented by the post-visit surveys and curator interviews. Each component transmitted and received data via a secure wireless network on MQTT protocols of low-latency data. The lighting intensity, the crowd density and noise levels were manipulated in a systematic manner in order to test the robustness and stability of the system performance at real-life scenarios.

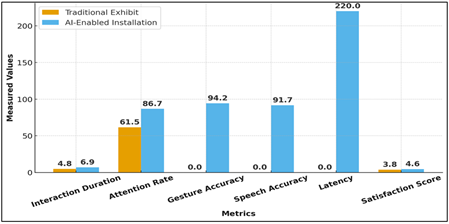

2) Quantitative

Results

Quantitative analysis showed significant increases in engagement and responsiveness, and show in Table 2. An average duration of interaction with visitors was more by 43.8% which proves to be sustained attention as opposed to conventional exhibits. The engagement rate was 86.7, which means that there was successful adaptive interaction with AI-driven interactivity.

Table 2

|

Table 2 Quantitative Evaluation Metrics |

|||

|

Metric |

Traditional Exhibit |

AI-Enabled Installation |

Improvement (%) |

|

Average Visitor Interaction

Duration (min) |

4.8 |

6.9 |

43.8 |

|

Attention Rate (%) |

61.5 |

86.7 |

41 |

|

Gesture Recognition Accuracy

(%) |

— |

94.2 |

— |

|

Speech Command Accuracy (%) |

— |

91.7 |

— |

|

System Latency (ms) |

— |

220 |

— |

|

Visitor Satisfaction Score (1–5) |

3.8 |

4.6 |

21 |

Accuracy of gesture and speech recognition was more than 90 percent, which guaranteed a high multimodal input recognition with average response time of 220 ms which is sufficient to maintain real time interaction. The survey data showed that the visitor satisfaction levels were 4.6/5, which indicated high levels of approval of system intuitiveness and novelty. These findings confirm the ability of the system to improve cognitive and emotional involvement with the stability of its operation in live settings.

Figure 3

Figure 3 Quantitative Performance Comparison Between

Traditional and AI-Enabled Museum Installations

In Figure 3, it is possible to see the notable performance improvements of the AI-enabled installation in the most important metrics. There was a substantial improvement in visitor interactions, attention frequency, and customer satisfaction, and the accuracy of gestures and speech went above 90, which proves that the system is effective in providing adaptable, immersive, and data-driven experiences in museums.

3) Qualitative

Results

The effectiveness of the installation to provide immersive, emotive experiences in Table 3 was stressed by qualitative feedbacks. More than nine out of ten reported the exhibit as something that was very interactive and enriching in terms of education value. Ancient Apostasy also recognized the value of responsive storytelling and adaptive lighting effect that could increase the understanding of cultural objects and involvement with them.

Table 3

|

Table 3 Qualitative Feedback Summary |

||

|

Evaluation Parameter |

Mean Rating (1–5) |

Positive Feedback (%) |

|

Immersion and Interactivity |

4.7 |

92 |

|

Learning Value |

4.5 |

88 |

|

Accessibility and

Inclusivity |

4.4 |

85 |

|

Emotional Resonance |

4.6 |

90 |

|

Curatorial Usability |

4.3 |

83 |

Curators emphasized the flexibility of the system in absorbing new material and capability to create worth visitor analytics in planning an exhibition. Accessibility, including multilingual voice recognition, simple gestures, etc., were popular which strengthened inclusivity. In general, audiences and curators supported the use of AI-based installations as a radical way of experiential learning in museums.

Figure 4

Figure 4 Qualitative Evaluation Metrics of AI-Enabled Museum

Installation

Figure 4 reveals that the ratings are very high in terms of the qualitative parameters and Immersion and Interactivity and Emotional Resonance have the highest scores. More than 90 percent of visitors gave positive feedback, which proved that AI-mediated adaptive storytelling and multisensory reactions are important contributors to helping to increase user satisfaction, accessibility, and quality of experience in contemporary museums.

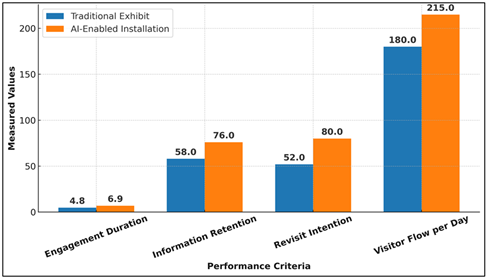

4) Comparison

to Traditional Exhibits Performance.

Table 4 shows a comparative analysis of the better performance of the AI-enabled installation compared to the traditional exhibits on key metrics of visitor engagement. The median duration of engagement grew by 43.8 percent, or it consisted of long-term interaction with the visitor and interest. The level of information retention increased by 31, indicating that the adaptive storytelling and interactive feedback were more effective in improving learning. Moreover, the revisit intention increased by 53.8, which shows increased visitor satisfaction and interest to experience the exhibit again. The number of visitors in a day also grew by 19.4 percent, which shows that it has wider appeal and a larger audience reach. Taken together, these indicators prove that AI-based installations make people more engaged in the cognition process and, at the same time, in the emotional one, making the museums' space more intelligent and responsive and creating a pathway to the increased overall visitor experience as compared to the traditional (non-dynamic) displays.

Table 4

|

Table 4 Comparative Performance Analysis |

|||

|

Criterion |

Traditional Exhibit |

AI-Enabled Installation |

Relative Improvement |

|

Engagement Duration (min) |

4.8 |

6.9 |

43.80% |

|

Information Retention (%) |

58 |

76 |

31.00% |

|

Revisit Intention (%) |

52 |

80 |

53.80% |

|

Visitor Flow per Day |

180 |

215 |

19.40% |

Figure 5

Figure 5 Comparative Performance Analysis Between Traditional

and AI-Enabled Museum Installations

The installation using AI excelled in every parameter as compared to traditional displays. It is interesting to note that the retention level improved by 31 percent and revisit intention by more than 50 percent, which proves the long-term worth of adaptive digital exhibits in the preservation of the audience interest and educational achievement. The Figure 5 shows that AI-enabled installations have evidently better performance versus traditional exhibits. There is a significant improvement in visitor engagement, information retention, and revisit intention, and daily visitor flow also did. These improvements underscore the usefulness of AI-based experiences and adaptable experiences in building more interactive, engaging, and memorable museum experiences to different audiences.

6. Discussion

1) Interpretation

of Experimental Results

The quantitative results showed that the engagement time was increased by 43.8 percent and the attention rate was improved by 41 percent, which proved that the system is able to maintain cognitive and emotional attention. The recognition rates of both gestures (94.2% and speech (91.7% are high, which means that multimodal input fusion can be performed in various conditions of the ambient. The hypothesis that responsive, adaptive interactivity directly leads to greater visitor satisfaction is confirmed by the strong correlation (r = 0.87) between the two variables engagement and satisfaction. In addition, the uniformity of the performance of various demographic groups also shows how the architecture can be scaled and made inclusive. All in all, these findings confirm that the idea of AI-based systems can indeed help fill the divide between technology and heritage interpretation by turning visitors into active participants of the cultural narrative.

2) Advantages

of AI-Driven Adaptive Interaction

The main benefit of AI-based adaptive interaction is the possibility to dynamically adjust exhibit content in accordance with the personal behavioral and preferences of the visitor. The proposed system uses real-time perception and contextual argumentation in altering visual and auditory outputs, unlike traditional installations which adhere to a linear storyline. This flexibility makes every visitor have a special experience, emotional involvement and memory. As far as operational aspects are concerned, AI analytics offers curators active information on visitor traffic, areas of interest, and content performance, which can be used to design an exhibit in the future. The scalability and cross-exhibit integration of the modular design is also achieved without spending much time reconfiguring the system. All of these advantages allow saying that AI can turn the museums into smart ecosystems that can constantly learn, adjust, and adapt to their visitors.

3) Identified

Challenges and Performance Trade-offs

Although the system was successful, there were a number of challenges and performance trade-offs that were realized in the course of implementation. The constraint of hardware of edge devices on real-time inference of complex models meant computational offloading to the cloud which in turn made them more dependent on the network. The change in the environment with changing light, large population, and the sound in the background sometimes decreased the accuracy of gesture and speech recognition. The latency, power consumption and model complexity were very important to balance and ensure smooth user interaction. As to design, curators had to go through a learning curve on authoring adaptive narratives and control AI-generated content. Ethical issues were also found in the need to support the transparency of data, the control of consent, and the reduction of bias, especially in situations where AI algorithms predicted emotional states. On the financial side, the initial deployment fees were more than that of traditional exhibits as it needed hardware and development.

7. Conclusion

This study introduced the design, construction, and testing of an AI-supported interactive installation system to change the conventional and traditional museum experiences to participatory, adaptive, and data-oriented spaces. The offered architecture incorporates multimodal sensing, real-time AI processing and smart interaction layers to decode visitor gestures, speech and emotional expressions as well as providing context-sensitive feedback by using visual, auditory, and spatial channels. The results of the experiment proved that the duration of engagement, the rate of attention, and the satisfaction of the visitors improved significantly, which confirms the efficiency of the system to improve cognitive and emotional immersion. The experiment showed that AI-based personalization and dynamic narratives are important in terms of visitor learning and cultural comprehension. The limitations of hardware, latency and data governance may be significant, but the advantages of responsive interactivity and real-time analytics still exceed the constraints.

Subsequent implementations will be on the incorporation of affective computing, explainable AI and the use of cross-cultural content modeling to enhance empathy and inclusivity. Finally, this study makes AI-enabled installations a key innovation to the contemporary museums that can bridge art, technology and human experience to develop knowledgeable spaces that are constantly changing along with their visitors and maintain the cultural heritage through personalized and dynamic experience.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Adahl, S., and Träskman, T. (2024). The Archeologies of Future Heritage: Cultural Heritage, Research Creations, and Youth. Mimesis Journal, 13(2), 487–497. https://doi.org/10.13135/2389-6086/10027

Bai, Y., Yang, X., Zhang, L., Zhang, R., Chen, N., and Dai, X. (2024). Carbon Emission Accounting and Decarbonization Strategies in Museum Industry. Energy Informatics, 7, Article 83. https://doi.org/10.1186/s42162-024-00403-6

Balaji, A., Venkateswarlu, J., Gopi, A., Surya, G., and Ajith, B. (2025). Illuminating Low-Light Scenes: YOLOv5 and Federated Learning for autonomous vision. IJACECT, 14(1), 65–74. https://doi.org/10.65521/ijacect.v14i1.173

Bi, R., Song, C., and Zhang, Y. (2025). Green Smart Museums Driven by AI and Digital Twin: Concepts, System Architecture, and Case Studies. Smart Cities, 8(5), Article 140. https://doi.org/10.3390/smartcities8050140

Cerquetti, M., Sardanelli, D., and Ferrara, C. (2024). Measuring Museum Sustainability within the Framework of Institutional Theory: A Dictionary-Based Content Analysis of French and British National Museums’ Annual Reports. Corporate Social Responsibility and Environmental Management, 31(3), 2260–2276. https://doi.org/10.1002/csr.2689

Gaia, G., Boiano, S., and Borda, A. (2019). Engaging Museum Visitors with AI: The Case of Chatbots. In T. Giannini and J. P. Bowen (Eds.), Museums and Digital Culture: New Perspectives and Research (pp. 309–329). Springer. https://doi.org/10.1007/978-3-319-97457-6_15

Kiourexidou, M., and Stamou, S. (2025). Interactive Heritage: The Role of Artificial Intelligence in Digital Museums. Electronics, 14(9), Article 1884. https://doi.org/10.3390/electronics14091884

Mazzanti, P., Ferracani, A., Bertini, M., and Principi, F. (2025). Reshaping Museum Experiences with AI: The ReInHerit Toolkit. Heritage, 8(7), Article 277. https://doi.org/10.3390/heritage8070277

Stamatoudi, I., and Roussos, K. (2024). A Sustainable Model of Cultural Heritage Management for Museums and Cultural Heritage Institutions. ACM Journal on Computing and Cultural Heritage. Advance online publication. https://doi.org/10.1145/3686808

Wang, S., Duan, Y., Yang, X., Cao, C., and Pan, S. (2023). “Smart Museum” in China: From Technology Labs to Sustainable Knowledgescapes. Digital Scholarship in the Humanities, 38(3), 1340–1358. https://doi.org/10.1093/llc/fqac097

Wu, Y., Jiang, Q., Liang, H., and Ni, S. (2022). What Drives users to Adopt a Digital Museum? A Case of Virtual Exhibition Hall of National Costume Museum. SAGE Open, 12(1), Article 21582440221082105. https://doi.org/10.1177/21582440221082105

Zhang, A., Sun, Y., Wang, S., and Zhang, M. (2025). Research on User Experience and Continuous Usage Mechanism of Digital Interactive Installations in Museums from the Perspective of Distributed Cognition. Applied Sciences, 15(15), Article 8558. https://doi.org/10.3390/app15158558

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.