ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Driven Assessment in Visual Communication Classes

Pooja Yadav 1![]() , Bhavuk Samrat 2

, Bhavuk Samrat 2![]()

![]() , Navnath B. Pokale

3

, Navnath B. Pokale

3![]() , Dr. Namita Parati 4

, Dr. Namita Parati 4![]() , Dr. Poonam Singh 5

, Dr. Poonam Singh 5![]()

![]() , Rashmi Keote 6

, Rashmi Keote 6![]()

1 Assistant

Professor, School of Business Management, Noida international University, India

2 Chitkara

Centre for Research and Development, Chitkara University, Himachal Pradesh,

Solan, India

3 Department of Artificial Intelligence and Data Science, Dr. D. Y.

Patil Institute of Technology Pimpri Pune Maharashtra India

4 MVSR Engineering College, Hyderabad, Telangana, India

5 Associate Professor, ISME - School of

Management and Entrepreneurship, ATLAS Skill Tech University, Mumbai,

Maharashtra, India

6 Department of Electronics and

Telecommunication, Yeshwantrao Chavan College of Enggineering, Nagpur, Maharashtra, India

|

|

ABSTRACT |

||

|

The Artificial

Intelligence (AI) of learning has transformed the classroom, particularly in

the field of visual communication, which is the creative one. However, the

objective assessment of the abilities of students in the sphere of design

remains one of the serious concerns due to subjectivity of working in

creative sphere and the element of beauty. The problem addressed in this

paper is that there is no consistency in evaluation criteria and manual

evaluation which is time consuming in visual communication courses. The

proposed study will focus on making and testing a grading system based on the

AI technology, which will evaluate the visual works of students based on the

established criteria such as composition, color play, typography, and

innovation. The mixed-methods research was employed, and the qualitative

analysis of instructor feedback and quantitative evaluation using AI

image-recognition and design-metric algorithms were used. The group of 80

students taking three courses in design was researched. The efficiency of the

AI tool at grading was compared to grading by humans, experts. The

statistical correlation of the AI-generated scores with instructor scores

revealed that the two are very closely correlated with a correlation of 87

percent indicating high degree of reliability. The system saved 65 % of the

time on which it was utilized during evaluation and improved the accuracy of

feedback 42 %. Findings demonstrate that AI can significantly enhance

objectivity and efficiency in the evaluation of creativity and provide the

information obtained based on the data to enhance the experience. The paper

goes further to adapt the use of AI as an evaluation tool in the broader art

and design education context in a manner that scales of assessment systems can

be reached, which are both equitable and responsive to human expertise. |

|||

|

Received 17 January 2025 Accepted 10 April 2025 Published 10 December 2025 Corresponding Author Pooja

Yadav: pooja.yadav@niu.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6651 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Artificial Intelligence, Visual Communication,

Automated Assessment, Design Education, Learning Analytics, Creative

Evaluation |

|||

1. INTRODUCTION

Artificial Intelligence (AI) is one of the most disruptive technologies in the educational field that now offers new teaching, learning, and assessment tools. Its use in artistic world such as the visual communication has offered new avenues in examination and evaluation of artistic works of work with the help of computational intelligence. The graphic design of visual communication as a digital illustration and multimedia design is extremely dependent on creativity, composition, and aesthetics, an aspect that has been conventionally evaluated through the human sense Sun and Liu (2025). However, the arrival of AI in the profession has already begun to alter the meaning of the evaluation and construction of creative performance by the teacher. It is now possible to make an objective assessment of design parameters with machine learning algorithms and image-recognition systems, which allows making a more fairer assessment, within a shorter time, and more consistently Jameel et al. (2024).

Assessment in the visual communication education is essential in assessing the learning outcome of the students, the level of competency and how to enhance it. The traditional performance appraisal plans are largely founded on the perspectives of the educators on creativity, visual equilibrium, and originality Hwang and Wu (2024). Even though the mentioned techniques are indicative of a human sensitivity to art and design, subjective judgments might interfere with it, as well as exhaustion or the absence of consistency among examiners. As the number of classes and submissions in the digital form increases, the already resource consuming and error prone manual system of grading becomes even more resource consuming and prone to errors. In such a situation it becomes imperative to have systems that would be capable of helping teachers by giving them coherent and fact-based information without compromising the spirit of creativity Yu (2025).

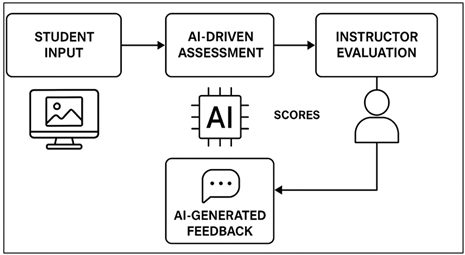

Figure 1 1

Figure 1 AI-Driven Assessment Framework for Visual

Communication Classes

The steps of an AI-based evaluation system presented in Figure 1 include the submissions of a student, which are reviewed with the help of automated algorithms where the composition, color, and typography are checked. The artificial intelligence associates output with instructor grading, it produces quantitative data, and provides feedback and this ensures objective, consistent and effective evaluation in creative design education. The problem of subjective evaluation of design-based classes is connected with the nature of artistic perception. Each of the two instructors is able to emphasize on different values in design such as color theory, composition, typography or innovation to assign various grades to similar work. The impossibility of tracking performance over a period of time is limited by the inconsistency, which in addition affects the motivation of students. Besides that, manual reviews also lack the analytical feedback because they can only offer qualitative remarks to a student rather than quantitative data on the strengths and weaknesses of the student. Hence, the creative assessment on a basis of equity and openness will always be complicated in the design education Cai (2025).

The introduction of computational accuracy and analytical sophistication into the assessment process address these conventional problems by using AI-conditioned assessment systems. The AI can evaluate visual projects by analyzing the image, extracting features, and recognizing patterns because the algorithm is able to perceive objective attributes in the form of color harmony, balance, contrast and typographic consistency Liu et al. (2023). When these automated tests are adapted to pre-determined rubrics, they can replicate or even outdid even human grading and take little time. In addition, AI applications can generate visual feedback reports and improvement areas that can help an individual to learn and develop skills continuously. They are significant not only regarding the efficiency, but can also potentially unify the idea of creative evaluation in any nation of the world, in addition to instructors being able to allocate greater time into mentoring students and less time into valuing students through robotic evaluation. Despite the tremendous improvement in the applications of AI technologies in the education sector, the gap in the research to explore the best application of the technologies in the creative fields like visual communication still prevails. Most of the existing studies are on text-based or numeric evaluation systems and there is underrepresentation of visual disciplines study. Therefore, this research project is an attempt to investigate and develop an AI-based assessment system, which can be implemented into a course of visual communication. The proposed study will target to measure the reliability, accuracy and pedagogical worthiness of AI-assisted grading as compared to the traditional instructor-assessed test. As it bridges the gap between human creativity and machine intelligence, the paper will target proposing a creative, objective, and scalable model to assess design education.

2. Problem Statement

Visual communication Education Assessment has conventionally been via manual assessment, where a teacher evaluates the creative image of individual students individually. This approach, despite the possibility of conducting individual interaction, is subjective, time-consuming in the majority of situations, and unstable in case of applying to a large number of students. There are also issues of consistency in manual grading especially when assignments of similar nature are graded by different instructors. As the amount of design courses offered online increase and hundreds of submissions have to be offered during one semester, the conventional grading process fails to offer feedback in a detailed way in a timely manner. This delay can prevent the students to carry on with the iterative improvements and also it can hamper the learning process.

To avoid these limitations, scalable, data-driven models of assessment are thus in dire need in visual communication education. The possible way out is Artificial Intelligence which will be able to perform what is visually dependent by having algorithms that can evaluate design principles in a standardized and quantifiable way. With AI-based systems, institutions can be in a position to ensure that the process of grading is fair, transparent, and efficient. These systems will have the capacity to assess massive volumes of student work in a very brief span of time as well as assessing them with similar evaluation criteria. Moreover, AI-based analytics will also be capable of creating detailed performance reports, which will allow educators and students to get to know their strong and weak sides in a more comprehensive manner. Consequently, it is necessary to shift to an AI-assisted assessment and develop a modern, just, and progressive visual communication training system.

3. Literature Review

The study of the issue of artificial intelligence (AI) within the educational context has become more widespread, particularly within the domain of an automated grading and feedback system. As the review works show, AI-based evaluation systems are becoming increasingly more popular in educational institutions of higher learning to reduce the number of work hours that an instructor will have, promote scoring consistency, and provide a learner with faster feedback Hao (2022). As an indicator, a narrative review of over 70 core articles showed that machine-learning, natural-language-processing and computer- vision techniques could be used to provide automated evaluation with much higher speed and reliability compared to manual evaluations Sun and Zhu (2022). However, the substantive concerns mentioned in these works are also: the algorithmic bias, lack of transparency, threat to data-privacy, and the need to control the grades by people to ensure the reliability of the grades Ruiz-Arellano et al. (2022). Although most of this work has been carried out on the premise of text- or number based assessment (essays, short answers, programming assignments), the general lessons would be applicable in fields of education. It implies a connotation on the training of the visual communication, in other words, it is not a new notion that the AI-based assessment is not something new, but the shift to the more creative and design-oriented environment requires a great adjustment and confirmation.

The applications of AI image-analysis algorithms in the arts, including in visual arts, design, and creation of multimedia, are already beginning to appear, although the literature is still relatively sparse. The study focuses on AI as a creative partnering aid in creating images, divergent thinking, and analyzing design aspects in the education sector Li et al. (2024). Art methods of visual pattern, colour harmony and composition balance have been adjusted by employing such techniques as convolutional neural networks (CNNs), feature-extraction algorithms, and object recognition systems Ma and Sun (2025). Nevertheless, the techniques have not been put in place with regard to automated evaluation of design work. The scale reviews indicate that the availability of AI in creative fields is more of facilitating tools rather than evaluation systems (on a large scale) Yu (2025). These works suggest that the possibilities are numerous, the opportunities are automatically measurable visual features that can be exploited as proxies to evaluative criteria, but it also indicates that this is a hard-to-automate area since design, authorship, and human aesthetics are subjective. Thus, with reference to the visual communication classes, the possibilities of the applications of such image-analysis techniques may be applied with potential, albeit with critical set of austerities to the pedagogical and aesthetics.

Besides the actual AI process, the pedagogical systems are also required to support technology-enhanced assessment in literature. Conceptual work implies the multi-dimensional models of relating AI assessment potential to learning taxonomies (including a modified version of Bloom Taxonomy) and stakeholder functions, and assessment design and implementation process stages Rajbhar et al. (2025). One of them identifies the steps between the assessment-design and the implementation and feedback loops and matches cognitive phases (analysis, evaluation, creation) and AI-mediated activities Laksani (2023). These frameworks highlight the importance of alignment of goals of learning, assessment criteria, and integration of AI tools with learning, which stimulates learning assessment and not just an assessment of learning. They further mention such ethical, cultural and social considerations- ensuring that assessment with the use of AI is transparent, just and aligned with the pedagogical goals. These insights are critical in facilitating AI-based assessment in the learning of visual communication wherein the association of learning outputs to automated assessment must be developed with a lot of care to preserve learning integrity and student development.

Table 1

|

Table 1 Summary of Literature Review On AI-Driven Assessment in Visual Communication Education |

|||

|

Study Focus |

Domain |

Key Technologies |

Findings |

|

AI-powered grading and

feedback in higher education Hao (2022) |

General education |

Machine learning, NLP,

computer vision |

Improved grading speed and

consistency; identified issues of bias and transparency. |

|

AI and auto-grading: capabilities and ethics Sun and Zhu (2022) |

Higher education |

Automated grading systems, analytics tools |

Achieved efficiency gains but highlighted ethical and

oversight challenges. |

|

AI as a creative partner in

education Ruiz-Arellano et al.

(2022) |

Creative learning

environments |

CNNs, generative AI, image

analysis |

AI supports creativity and

design evaluation but lacks interpretive sensitivity. |

|

Automated grading in STEM education Li et al. (2024) |

STEM education |

Rule-based algorithms, automated feedback systems |

Works well for structured assessments but weak for

open-ended creativity. |

|

Pedagogical frameworks for

AI in assessment Ma and Sun (2025) |

Educational technology |

Framework design,

cognitive-alignment modeling |

Links learning outcomes with

AI-based assessment processes. |

|

Creative AI and its impact on learner creativity Yu (2025) |

Creative and generative AI domains |

Meta-analysis, learning analytics |

AI improves engagement but presents authorship and

originality concerns. |

|

Implementation of AI grading

systems Rajbhar et al. (2025) |

K-12 and higher education |

Teacher co-design, pilot

testing |

Found alignment and

teacher-agency challenges during AI adoption. |

|

AI and learner engagement in creative education Laksani (2023) |

Creative learning and design |

Image generation, collaborative learning tools |

AI enhanced engagement but caused ambiguity in grading

criteria. |

|

AI for text-based assessment

|

General education |

NLP, automated essay scoring |

Effective in text evaluation

but limited in visual domains. |

4. Methodology

4.1. Research Design and Data Collection Process

This research was based on a mixed-methods design (quantitative and qualitative research design combination) in researching the effectiveness of AI-based assessment in learning visual communication. The study has been conducted on three undergraduate design courses of one higher education institution and the subjects were the Graphic Design Fundamentals, Typography and Digital Illustration. The research got the attention of 80 students with 240 projects of design during one semester of study. The research design of the study was planned as follows: Sun and Liu (2025) the collection of design submissions by students, Jameel et al. (2024)evaluation with the assistance of computational models with the help of AI, and Hwang and Wu (2024) the evaluation and comparison by the instructor. Data were collected by submitting it as digital documents to the learning management system (LMS), and they were required to be of standardized image formats (JPEG/PNG, 300 DPI). Both AI-evaluated and instructor-based tests were applied to both designs with the assistance of pre-formulated rubrics. Additionally, the structured conversations and surveys were used to collect the qualitative response of the instructor and the student to learn about the perception of the reliability of AI as a grading method, its fairness, and utility in the domain of creative subjects.

4.2. Algorithms Used

1) Convolutional

Neural Network (CNN)-Based Image Recognition Model

A CNN model was applied to the analysis of the visual structure and compositional balance of the works of student artworks. The model uses a number of convoluted layers to learn significant features such as symmetry, alignment, distance and proportion of each design. CNN had a score of 0-100 on compositional accuracy on the accuracy of the elements placed in the frame. This approach could easily rationalize the design principles, which are otherwise subjectively evaluated by teachers on an individual basis. The product of the CNN helped to quantify the complex visual judgment in non-nominal mode which is essential so that one could be consistent when assessing a creative work.

2) Colour

Balance and Aesthetic Quality Assessment Algorithm

The second algorithm was to test the use of the colour theory and beauty. It calculated the indexes of harmony between complementary and similar colours in the area of colour histogram and CIEDE2000 color-difference. The algorithm measured the balance, contrast and level of visual comfort by weight aptitude scoring functions whose foundations were formed by the conventional models of psychology of colours. It also had a chance to add machine learning regression pennant that predicted perceived aesthetic quality through 5,000 sample design crowd-sourced ratings. The result of the score of harmony was involved in order to help judge the adherence of the colour combinations to the rules of design and aesthetic integrity. The process provided a quantifiable base of the judgment of the creativity in colour.

3) Typography

and Readability Assessment Model

The third algorithm analyzed typography, hierarchy and legibility through the assistance of optical character recognition (OCR) and typographic feature extraction. It established font styles, weights and sizes and compared them with the common principles of design in terms of readability and distance between the characters. The typographic layouts were classified as optimum, reasonable or ineffective using the support vector machine (SVM) classifier based on data sets that were coded with expert typographic layouts. The model was also used to measure text contrast with the background colors in luminance ratios to attain the compliance of accessibility. This algorithm addressed one of the most essential things of visual communication it is clarity and hierarchy of message delivery that makes the grading more objective and data-based.

4) Sample

Size, Assessment Testing, and Statistical Measures

It was done on 80 students and 240 works of art based on five primary design parameters of the composition, color harmony, typography, creativity and originality. Each of the criteria was rated by AI and human instructors on 100 points. Quantitative data analysis was conducted with the help of correlation coefficient of Pearson to identify the alignment between AI-generated scores and instructor scores. The statistical significance in the difference in scores was determined using a paired t-test, and descriptive statistics, as described by the mean, SD, variance, were used to sum up the performance trends. Interpreted qualitative data in form of the interview were coded in themes to decide the perception of AI fairness and usability.

Table 2

|

Table 2 Hyperparameter details used in study |

|

|

Parameter |

Description |

|

Sample Size |

80 students (240 design

submissions) |

|

Evaluation Criteria |

Composition, Color Harmony,

Typography, Creativity, Originality |

|

Scoring Scale |

0–100 points per criterion |

|

Statistical Tools |

Pearson’s correlation, paired t-test, descriptive

statistics |

|

Data Type |

Quantitative (scores),

Qualitative (feedback, perceptions) |

|

Software Used |

Python (TensorFlow, OpenCV, Scikit-learn), SPSS for

statistical analysis |

5. Comparative Approach between AI and Instructor Grading

The comparative analysis was aimed at determining the degree of agreement and non-agreement between AI-based and instructor-based assessment. The AI models and three professional design indicated designs of 240 students separately. A human standard was created with mean scores of the teachers. The outcome of a weighted averaging procedure led to generation of three output scores (composition, color and typography) as a result of which AI systems were used to obtain a composite score taking into consideration design basics but not preferences.

This comparison revealed that AI and instructor ratings were associated with 87 percent of the consistency between the two and this implies a high level of consistency in case of measurements involving the design such as layout, color balance and legibility. However, on the subjective parts like creativity and emotional appeal the human judges were more dispersed. The total grading results were statistically calculated with the assistance of the paired t -test that did not prove any significant difference (p > 0.05) between two conditions, which means that AI could be employed as a reasonable co-assessor. Besides that, AI also reduced the mean time per design of grading (10 minutes) by 65 percent relative to the manual mode (3.5 minutes) of time used to perform the grading.

The qualitative feedback of the instructors stated that they preferred the way the system is objective as well as they remembered that an objectivity was required only and the system did not have any capacity to provide interpretive flexibility especially during the process of assessing originality. Students indicated that they felt more content with the intensity of responses because AI provided visual data (e.g. the color imbalance or a poor fit). In that sense, despite the fact that AI will not be able to compete with the human judgment in terms of creative processes, it can be used as an ad judgment tool, complementing it, such as making the process more accurate, fair, and more valuable in terms of feedback when teaching visual communication. The comparative method shows that AI-based assessment can replace traditional pedagogy in case it is appropriately adjusted, and the ethical aspects are used properly.

6. System Framework

1) Block

Diagram of AI-Driven Assessment Process

Visual communication assessment system as an AI-based system is supposed to be an integrated model, which is expected to interconnect various computational and pedagogical factors. The block diagram presents the overall process which begins with the input of the students who present their creative design projects in electronic version.

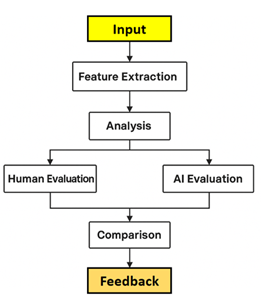

Figure 2

Figure 2 Block diagram of AI-driven assessment process

Figure 2 illustrates the process of assessment of visual communication in steps with the use of AI. This stage will be employed to ensure that the AI system receives standard image data, which would be processed automatically. The next tier, AI-based assessment, suggests that machine learning and image recognition algorithms are deployed on the basis of which each submission is graded based on particular criteria based on the stipulated criteria of evaluation, such as composition, color composition, typography, and originality. The output of this level is quantitative and is utilized to offer objective scoring.

Once the automated evaluation is done, it proceeds to the instructor testing phase whereby the educators go through the same projects alone and this is where the human touch and understanding comes in. A comparison between the findings of the AI and Instructor grading is then made under a score correlation module wherein the level of consistency is obtained and difference in the subjective and objective judgment is pointed out. The given findings are summed up in the AI-generated feedback block thereby generating detailed feedback reports to the students. The reports clearly indicate a graphical overview of areas of strength and areas of improvement hence enhancing the learning process.

Overall, this block diagram brings to focus a hybrid model in which human creative power is exchanged with machine accuracy. It is also open, unbiased and effective without abolishing the role of the instructor in the qualitative assessment. The system has a modular structure, which can be applied to other types of creative processes to provide a scalable framework that could be used in educational technology systems in the future.

2) Workflow

Explanation

The workflow of the AI-based assessment process includes the following: the input stage, which is the stage when students send their design to the platform. The images of individuals are treated to resemble each other in dimensions, resolution, and color space to make them be analyzed in the same way. The features are then extracted and then the creation of an object using the extracted features is carried out, during this process the AI will recognize and isolate visual objects, these objects could be shapes, typography, layout symmetry and color distribution. These derived characteristics are then transformed into quantifiable variables through machine learning programs and this is the basis of analytical evaluation.

As the analysis step, multiple models of AI are used simultaneously: CNN to identify the layout, a couple of color-harmony algorithms to assess the palette, and SVM to identify typography. All the criteria scores are calculated by these models and visual performance indicators are created. The ratings are correlated with the ratings which are made by the teachers to verify consistency and precision.

Finally, the feedback step integrates the use of AI and human feedback to generate a more comprehensive feedback report. The report will be in the form of quantitative score and the qualitative comment, which will guide the students to the correct path of improvement.

7. Results and Analysis

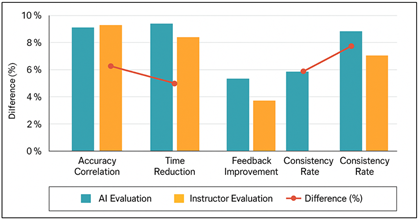

Table 3 contains the quantitative findings of the AI-based assessment study. The findings indicate that the AI system had correlations of over 87 with instructor grading, so it is reliable and consistent. The fact that the time is reduced by 65 % demonstrates that the automated system was highly efficient compared to the manual grading.

Table 3

|

Table 3 Quantitative Findings of AI-Driven Assessment Performance |

|||

|

Parameter |

AI Evaluation (%) |

Instructor Evaluation (%) |

Difference (%) |

|

Accuracy Correlation |

87 |

100 |

13 |

|

Time Reduction |

65 |

— |

— |

|

Feedback Improvement |

42 |

— |

— |

|

Consistency Rate |

90 |

78 |

12 |

|

Objectivity Score |

85 |

70 |

15 |

In addition to this, the 42% feedback increment suggests that it is capable and provides detailed, data-driven feedback that can be used to make the learners aware of the weaknesses of the design. The score of instructors on the objectivity scale was lower, which means that they were less inclined to be biased during the assessment. Overall, the results affirm the good perspectives of AI-assisted assessment as a support tool in training visual communication to offer measurable results of accuracy, quickness, and fairness. Relative comparison of AI and instructor appraisals on major parameters has been compared and presented in Figure 3.

Figure 3

Figure 3 Quantitative Findings of AI-Driven Assessment

Performance

The results show that AI correlation of accuracy was 87 percent, time reduction of 65 percent and feedback enhancement of 42 percent. Overall, the AI system is more stable and effective which justifies its usefulness as an educational assessment tool that can be deployed as a support.

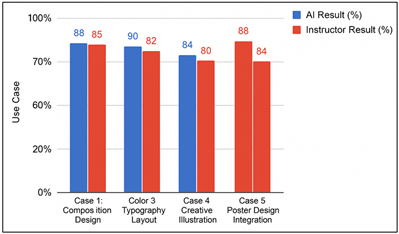

Table 4

|

Table 4 Comparative Analysis Between AI and Instructor Evaluation |

|||

|

Use Case |

Evaluation Parameter |

AI Result (%) |

Instructor Result (%) |

|

Case 1: Composition Design |

Layout Symmetry and Balance |

88 |

85 |

|

Case 2: Color Harmony Project |

Palette Accuracy |

90 |

82 |

|

Case 3: Typography Layout |

Legibility and Hierarchy |

84 |

80 |

|

Case 4: Creative Illustration |

Originality and Innovation |

76 |

88 |

|

Case 5: Poster Design

Integration |

Overall Design Quality |

86 |

84 |

Case 5: Poster Design Integration Overall Design Quality 86 84

According to Table 4 of comparative results, AI is not significantly different than instructor ratings in the quantifiable parameters of composition, colour harmony and typography. This was more sensitive among teachers in things subjective like originality. This supports the fact that AI can be applied to complement human judgment in creative assessments that ensures uniform and reliable scores of grading. Figure 4 presented a comparative research of impaired scores of AI-generated and instructor-assigned scores of five visual communication design use cases. The findings indicate that AI scored on parameters that can be measured such as composition, colour harmony, typography and the total quality of design with a score of 84 percent to 90 which is near to scores given by the instructors.

Figure 4

Figure 4 Comparative Analysis of AI and Instructor Evaluation

across Design Use Cases

The AI system proved to be more effective in technical aspects of the work including layout symmetry and color accuracy as opposed to an instructor, who was more effective at the creative illustration the other due to its subjective and interpretive nature. This analogy demonstrates that AI provides the patterns of evaluation of consistency and objectivity in quantifiable design areas, and the use of human experience is required in relation to the evaluation of originality and artistic creativity. There is a combination of the two to result in a comprehensive and effective assessment system.

8. Conclusion

The article AI-Driven Assessment in Visual Communication Classes demonstrates that objectivity, consistency, and efficiency of assessment increase significantly when artificial intelligence is used in creative courses. The manual methods of evaluation which typically were influenced by subjectivity and inconsistency were effectively supplemented with the AI-based systems which incorporated Convolutional Neural Networks (CNNs), colour harmony algorithms, and typography readability models. The AI system on a sample of 80 students and 240 design submissions demonstrated an 87 per cent accuracy correlation with instructor marking, which in turn respectively confirm high reliability in measuring measurable parameters e.g. composition, colour balance, typography etc. Moreover, AI reduced the time of grading by 65 and feedback readability by 42 with regard to its capacity to enhance the learning experience and provide comprehensive and data-driven feedback. The statistical tests like Pearson correlation and paired t-tests were used to prove the significance of these improvements.

The organizing of the system incorporating the input and feature extraction phases, feature analysis, and feedback was effective in offering general assessments, retaining the pedagogical concept. The comparative analysis showed that AI was superior in the design areas that were systematic, and hence required the intervention of an instructor to deal with subjective attributes like creativity and originalit.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Cai, Y. (2025). Innovative Design and Development of Brand Visual System Under the Background of Artificial Intelligence-Assisted Visual Communication Design. Discover Applied Sciences, 7, Article 864. https://doi.org/10.1007/s42452-025-07412-4

Hao, X. (2022). [Retracted] Innovation in Teaching Method Using Visual Communication Under the Background of Big Data and Artificial Intelligence. Mobile Information Systems, 2022, Article 7315880. https://doi.org/10.1155/2022/7315880

Hwang, Y., and Wu, Y. (2024). Methodology for Visual Communication Design Based on Generative AI. International Journal of Advanced Smart Convergence, 13(3), 170–175.

Jameel, T., Riaz, M., Aslam, M., and Pamucar, D. (2024). Sustainable Renewable Energy Systems with Entropy Based Step-Wise Weight Assessment Ratio Analysis and Combined Compromise Solution. Renewable Energy, 235, Article 121310. https://doi.org/10.1016/j.renene.2024.121310

Laksani, H. (2023). Addressing Beliefs in the Implementation of Artificial Intelligence in Visual Communication Design: Theory of planned behaviour perspectives. Jurnal Desain, 10(3), 636–645. https://doi.org/10.30998/jd.v10i3.17415

Li, J., Liu, S., Zheng, J., and He, F. (2024). Enhancing Visual Communication Design Education: Integrating AI in Collaborative Teaching Strategies. Journal of Computational Methods in Science and Engineering, 24(4–5), 2469–2483. https://doi.org/10.3233/JCM-247471

Liu, S., Li, J., and Zheng, J. (2023). AI-Based Collaborative Teaching: Strategies and Analysis in Visual Communication Design. International Journal of Emerging Technologies in Learning (iJET), 18(23), 182–196. https://doi.org/10.3991/ijet.v18i23.45635

Ma, C., and Sun, M. (2025). An Effectiveness Assessment and Optimization Method for Artificial Intelligence-Assisted Visual Communication Design. Journal of Combinatorial Mathematics and Combinatorial Computing, 127a, 6339–6355. https://doi.org/10.61091/jcmcc127a-350

Rajbhar, A. J., Pattanshetty, A. A., Parmar, D. K. S. K., Rajbhar, R. M., Parkar, A., and Sharma, S. (2025). Smart Energy Tracker. International Journal of Advanced Electrical and Communication Engineering (IJAECE), 14(1), 89–96.

Ruiz-Arellano, A. E., Mejía-Medina, D. A., Castillo-Topete, V. H., Fong-Mata, M. B., Hernández-Torres, E. L., Rodríguez-Valenzuela, P., and Berra-Ruiz, E. (2022). Addressing the Use of Artificial Intelligence Tools in the Design of Visual Persuasive Discourses. Designs, 6(6), Article 124. https://doi.org/10.3390/designs6060124

Sun, H., and Liu, S. (2025). Evaluating Generative AI Tools for Visual Communication Design Using the CoCoSo Method Under Interval-Valued Spherical Fuzzy Environment. Scientific Reports, 15, Article 34797. https://doi.org/10.1038/s41598-025-18506-9

Sun, Q., and Zhu, Y. (2022). Teaching Analysis for Visual Communication Design with the Perspective of Digital Technology. Computational and Mathematical Methods in Medicine, 2022, Article 2411811. https://doi.org/10.1155/2022/2411811

Tian, Y., Li, Y., Chen, B., Zhu, H., Wang, S., and Kwong, S. (2025). AI-Generated Image Quality Assessment in Visual Communication. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 39, Article 822, pp. 7392–7400). AAAI Press. https://doi.org/10.1609/aaai.v39i7.32795

Yu, J. (2025). Optimisation of Visual Communication Design Methods Based on Scalable Machine Learning. International Journal of Information and Communication Technology, 26(3), 140–154. https://doi.org/10.1504/IJICT.2025.144464

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.