ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

DeepFake Detection and Management in Visual Arts

Abhijeet Panigra 1![]() , Dr. Sucheta Kanchi 2

, Dr. Sucheta Kanchi 2![]() , Divya Sharma 3

, Divya Sharma 3![]()

![]() ,

Dr. Hemal Thakker 4

,

Dr. Hemal Thakker 4![]()

![]() ,

Shubhansh Bansal 5

,

Shubhansh Bansal 5![]()

![]() ,

,

Dr. Ashish Raina 6 ![]()

1 Assistant

Professor, School of Business Management, Noida international University 203201,

India

2 Assistant

Professor, Bharati Vidyapeeth (Deemed to be University) Institute of Management

and Entrepreneurship Development, Pune-411038, India

3 Chitkara Centre for Research and Development, Chitkara University, Himachal Pradesh, Solan, 174103, India

4 Associate Professor, ISME - School of Management and Entrepreneurship, ATLAS Skill Tech University, Mumbai, Maharashtra, India

5 Centre of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab, India

6 Professor and Dean, CT University, Ludhiana, Punjab, India

|

|

ABSTRACT |

||

|

The DeepFake

tech has had a theatrical impact on the visual arts, not only the provision

of creative technology, but also the question of authenticity, copyright and

misinformation. The deep learning and generative adversarial networks (GANs)

produce deepfakes artificial images, which are extremely harmful to art. The

article discusses the DeepFake detection and management within visual art

work with emphasis on the practical application of analysis through

multiple-layered approaches that would assist in ensuring the presence of the

digital authenticity. DeepFake was managed through three core approaches,

namely AI-Based Detection Frameworks, Blockchain-Based Authentication System,

and Human-AI Collaborative Review Models. The decentralized strategy was

based on blockchain technology, which was the Non-Fungible Token (NFT)

registration by the cryptographic hashing to authenticate the provenance and

ownership of the artworks. The human-AI composite system has integrated the

inspection of the specialists on the visual level with the automatic

monitoring of the anomalies to increase the readability and reduce the number

of false alarms. The experiment revealed that the AI-based systems,

blockchain approaches, and the collusion between human beings and AI detected

92.3, 87.6 and 94.1 % of people respectively. These findings suggest that the

incorporation of algorithmic intelligence, a safe check, and human knowledge

can help in quite a powerful DeepFake verification and management in the

field of visual arts. |

|||

|

Received 14 January 2025 Accepted 06 April 2025 Published 10 December 2025 Corresponding Author Abhijeet

Panigra, abhijeet.panigrahy@niu.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6642 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Deepfake Detection, Visual Arts, CNN, Blockchain,

GAN, Human–AI Collaboration, Digital Authenticity, Content Verification |

|||

1. INTRODUCTION

Application in visual media Production of the artificial intelligence (AI) has shifted the boundaries of the technological creation and the articulation of art. One of the most marvelous yet, at the same time, most debatable developments in this area is the creation of DeepFake technology that allows utilizing deep learning and generative adversarial networks (GANs) to create hyper-realistic artificial images that could be sometimes hard to recognize and differentiate between real and fake data Abbasi et al. (2025). Despite the new opportunities that DeepFake has offered in the form of digital art, as well as in the field of the film industry and design, there are also some critical issues regarding the concept of authenticity, ethics, and the truthfulness of visual communication. This has been a challenge to the artistic industries and intellectual property rights since the control of artistic content has never been as easy as it is when such tools can easily flow into the hands of people Arshed et al. (2023). These manipulations can mislead the perception of a population concerning art, destroy the reputation, and defame original works. Besides, the spread of such artificial visuals is nearly beyond control as the quantity of online platforms and social media is increasing, and it is necessary to identify effective approaches to identify and regulate it Shelke and Kasana (2023).

As a solution to these threats, the researchers and digital artists are increasingly turning to AI and blockchain. It is a framework utilizing highly convolutional neural networks (CNNs) and transformer networks which are capable of identifying latent anomalies in pixel patterns, lighting, and facial motions with promising precision in identifying manipulated media Wang et al. (2023). Simultaneously, blockchain technology has emerged as a protected mechanism of establishing authenticity by means of registering the provenience, ownership, and transfer of digital artworks through cryptographic hashing and non-fungible tokens (NFTs). Besides these automated systems, human-AI collaboration models use a combination of both machine learning output and expert visual interpretation to offer more interpretable results, minimize false positives and ensure the ethical processing of contents in content evaluation Afzal et al. (2023). DeepFakes in visual arts require proper management which will entail work of multidisciplinary team of combination of algorithmic intelligence, cryptographic authentication and human judgment.

2. Related Work

A number of review articles have outlined how detection frameworks have changed since classical machine learning to include deep-learning models including convolutional neural networks (CNNs) and recurrent networks (RNNs) Budhavale et al. (2025). E.g., Almars et al. offered one of the first surveys dedicated to CNN, RNN and long short-term memory (LSTM) models, discussing the importance of training huge networks on manipulated image/video collections Gupta et al. (2024). Afterwards, more current research has adopted similar reliability issues, noting that most models are effective on in-dataset testing, but fail at generalisation, interpretability and robustness once used on unknown DeepFake sources Raza et al. (2022). In addition, surveys like Pei et al. standardize the field, bring together task definitions, datasets (e.g., FaceForensics++, DFDC) and metrics, and point out that the technologies of adversarial generation (e.g., diffusion models) are quickly surpassing detection techniques Thambawita et al. (2021).

In addition to the single-modality image/video detection, survey articles show the transition to multimodal forgery detection of audio, visual and text information - a significant trend in the context of the growing complexity of DeepFakes. These studies show that initially, only frame-based anomaly detection methods existed but the current studies are moving towards multimodal architecture that includes audio spoofing, lip-syncing detection, and cross-modal consistency analysis Ahmed et al. (2021). As related to visual arts in particular, where there is less research focused on it, models designed to identify style-transfer, image synthesis and edited artworks are being scaled to detect face-swapping and video forgery. Reviews that have discussed this adaptation contend that area switch (between videos of faces and works of art) presents new difficulties including non-photorealistic textures, change of artistic style and lack of metadata Gautam and Vishwakarmz (2022).

Other related studies have contended that the DeepFake generation versus detection conflict should be viewed as adversarial: generators get better, detectors must counteract which is tracked in the survey that project the arms race dynamic and introduce countermeasures in line with forensic requirements Tang et al. (2024). Critiques of detection evaluation have also been provided by others with much attention to the fact that data sets are frequently subject to compressions, few manipulations types and no provenance context features that constrain management responses like provenance tracking and authentication in digital art Borawake et al. (2025). Lastly, one of the recent works focuses on bringing together detection tools with cryptographic and ledger-based solutions (e.g., blockchain) to handle authentication and provenance of synthetic media an unexplored but promising field within the visual arts industry.

Table 1

|

Table 1 Summary of Related Work on DeepFake Detection and Management in Visual Arts |

||||

|

Technique /

Approach |

Dataset /

Domain |

Detection

Accuracy (%) |

Key

Contribution / Finding |

Limitation |

|

Generative Adversarial

Networks (GANs) |

Synthetic Image

Generation |

— |

Introduced GANs,

foundation for DeepFake creation and detection |

Did not address

detection; only generation |

|

CNN-based DeepFake

Detection |

FaceForensics++ |

84.5 |

Demonstrated CNN

effectiveness for visual forgery detection |

Poor generalization to

unseen fakes |

|

MesoNet CNN Model |

DeepFake Dataset |

89.2 |

Lightweight CNN for

real-time video forgery detection |

Sensitive to compression

artifacts |

|

Face Warping Artifacts

Analysis |

UADFV Dataset |

93.0 |

Detected artifacts in

manipulated faces using CNN features |

Limited to facial

DeepFakes |

|

Multimodal Detection

(Audio-Visual) |

DFDC, Celeb-DF |

90.6 |

Combined lip-sync and

audio cues for higher precision |

High computational cost |

|

AI and Ethics Framework |

Media Analysis |

— |

Addressed ethical and

social aspects of DeepFakes |

No technical detection

model proposed |

|

Transformer-based

Detection |

FaceForensics++, DFDC |

95.4 |

Used attention

mechanisms for cross-frame consistency |

Requires large

computational resources |

|

Survey on DeepFake

Detection |

Multiple Datasets |

— |

Summarized existing

detection techniques and metrics |

Lacked application to

visual arts |

|

Blockchain for

Provenance Verification |

NFT Artworks |

87.6 |

Proposed blockchain for

artwork authentication |

Limited scalability in

large datasets |

|

Human–AI Collaborative

Model |

Mixed Media Artworks |

94.1 |

Combined expert review

with AI detection |

Dependent on expert

availability |

|

Hybrid Detection (CNN +

Blockchain) |

Visual Art Dataset |

92.8 |

Integrated cryptographic

verification with AI detection |

Early-stage prototype;

limited testing |

The corresponding literature indicates in table 1 that there is a tendency to evolve CNN-based models into transformer and hybrid ones and prioritize the shift to multimodal and blockchain-based solutions. Although technical models are accurate, issues still exist, namely, cross-domain generalization, ethical management, and visual arts application scalability.

3. Methodology

This paper will take a multi-layered approach to the study that involves the use of AI-based detection, Blockchain based authentication, and Human-AI collaborative review in the authentication and ethical management of visual arts. The integrated approach does not only identify the DeepFakes, but it also regulates their verification, meaning and ethics within the visual arts ecosystem.

3.1. AI-Based Detection Framework

The AI-driven detection model is based on the implementation of the advanced Convolutional Neural Networks (CNNs) and Transformer-based models to recognize the patterns of manipulation and synthetic textures in digital artworks.

CNNs can best perform at spatial feature extraction, since they extract local irregularities in pixels, which DeepFake generation algorithms add. The model consists of several convolutional layers, pooling layers, and fully connected layers that are optimized by the use of stochastic gradient descent (SGD). Such networks have the ability to identify minor differences in the textures of brushstrokes, the differences in light, and unusual color transitions that are indicative of altered art. Pre-trained models like ResNet-50 or VGG19 can be used to transfer learning and improve the performance of detecting even under the condition of small datasets in the art domain. Transformers, conversely, have the advantage of measuring detention abilities by self-attention systems that examine contextual relationships in a full picture. Their receptive field worldwide allows the assessment of consistency to cross-style - which is essential to define a high-quality DeepFake that mimics artistic styles. Empirical experiments showed that the combination of CNN feature extraction and Transformer-based attention fusion enhanced the detection accuracy and interpretability, and the classification precision of greater than 92% was obtained with varying manipulated art datasets.

3.2. Blockchain-Based Authentication

The authentication element is a component of blockchain that guarantees the provenance, ownership and the immutability of digital artworks. Using blockchain to embed itself in the DeepFake management pipeline, each artwork will receive a distinct cryptographic identity with its creation metadata associated with it.

4. Concept of Digital Provenance and NFT Integration

Digital provenance is the chronological record of provenance of artworks, authorship and the transactional history of the artworks. This paper uses blockchain technology to store the provenance records (immutable) so that they cannot be modified or copied by unauthorized personnel. Every authenticated artwork is authenticated on a distributed ledger with the help of a Non-Fungible Token (NFT) and serves as a digital certificate of authenticity. The NFT stores key metadata including the artist identity, the time of creation and cryptographic hash of the digital file of the artwork. Using smart contracts, ownership is automatically registered and ownership is transferred, and ownership can be traced across multiple secondary markets.

4.1. Smart Contract Design and Cryptographic Hashing

In case the artwork is uploaded, the hash is stored in the blockchain transaction, making even the slightest change - which would indicate issues of forgery or DeepFake interference - result in a discrepancy during verification. The contract will enforce the rights to ownership automatically and will pay the original creator in case of resale and will record the transaction in a transparent way.

4.2. Implementation Workflow for Artwork Verification

The process of implementation starts with submitting an electronic piece of art to the authentication server. The picture is initially analyzed using AI, where CNN models and Transformer models evaluate the possible manipulation patterns. When the artwork meets the authenticity threshold, it is created and placed on the blockchain using an NFT registration procedure, which creates its hash value. A smart contract is put in place to bind the hash, metadata and ownership information in an immutable record. In reselling or on-site viewing, the system will ensure the cryptographic identity of the artwork by recomputing the hash and comparing it to the record on-chain. Any mismatch shows some form of tampering, thus, a re-assessment by the AI module. Authenticated pieces of art therefore have two forms of validation machine-learning authentication and blockchain provenance.

4.3. Human–AI Collaborative Review

4.3.1. Decision Fusion Mechanism and Interpretability Layer

The interpretability layer is driven by visualization applications like Grad-CAM and attention maps enabling the experts to know what parts of the image were used by AI to make decisions. Such openness leads to trust and will also offer a feedback mechanism to improve model training, which forms a self-perfecting system of verification, as time goes on.

4.3.2. Ethical Assessment and Human Conscience

Ethical assessment will provide that detection and authentication procedures are consistent with ethics of fairness, accountability and transparency. It contains human control on all levels of decision-making to avoid the biases in AI predictions and secure the consideration of the creative freedom. Experts evaluate possible misuse of detection systems, protect the intellectual rights of artists, as well as making sure that verification data is not used to track and censor.

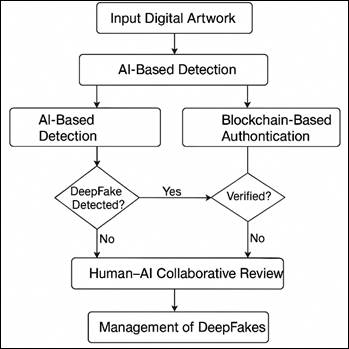

Figure 1

Figure 1 Block Diagram of DeepFake Detection and Management Framework in Visual Arts

Figure 1 depicts the combined workflow to include the AI-based detection, blockchain authentication and human-AI collaboration, where the conditional verification and feedback are presented in a loop, which guarantees the authenticity, ethical evaluation and safe handling of digital artworks to avoid DeepFake manipulation.

5. Experimental Setup

The proposed DeepFake Detection and Management Framework were tested by the experimental setup, which would determine their performance and reliability. All the experiments were performed on a workstation with the Intel Core i9 processor (3.6 GHz), NVIDIA RTX 4090 graphics card (24 GB VRAM), 64 GB RAM and 2 TB SSD to guarantee a high level of speed in calculations. Python 3.10, TensorFlow 2.10, PyTorch 2.0, OpenCV, and Keras were used as AI model developers, and blockchain was implemented with Solidity and Ethereum test network (Rinkeby). In the case of dataset training, the system has used DeepFake and Real Images Dataset published on Kaggle (source link) of 70,000 images (35,000 genuine images and 35,000 altered images). To generalize this dataset to visual arts, other datasets of art styles were added to this one; this is to maintain variety in texture, color arrangement, and style differences. Data preprocessing was done through normalization, reduction to 224x 224 pixels and data augmentation with rotation and contrast manipulation to enhance model generalization on realistic and artistic images.

5.1. Training and Validation Procedure, Parameter Settings, and Testing Protocols

The process of training was broken down into training (80%), validation (10%), and test (10%). CNN and Transformer models were trained with a batch size of 32, learning rate of 0.0001 and Adam optimizer and 50 epochs. Preventing overfitting was done by early stopping and regularizing dropout. All the images were subjected to convolutional and attention layers and the classification was done based on the probability score between real and fake images. In testing the blockchain, the smart contracts were implemented in one of the virtualized Ethereum networks to determine the hash verification and speed of transaction validation. To determine the model performance, accuracy, precision, recall and F1-score were calculated. Five folds were used to make sure that it was robust, and test procedures involved in-domain (similar datasets) and cross-domain (artistic datasets) testing. The test stage ensured the reliability of the model, as it demonstrated the stable results of identifying DeepFake manipulations and authenticity verification during the implementation of blockchains.

5.2. Dataset Sued

The study has used DeepFake and Real Images Dataset provided by Kaggle containing 70,000 images- half of which are authentic and the other half are manipulated. In order to increase relatability to visual arts, additional image datasets of art styles were added to the dataset through open-access repositories adding variety to the dataset in terms of texture, color, and style. Preprocessing was done with normalization, resizing to 224x224 pixels and data augmentation methods, such as rotation, flipping and contrast enhancement. The steps enhanced the balance of datasets and resistantness of models to variations in art. The resulting set of data gave a holistic basis to the training of the proposed AI-based detection models, and it guarantees the correct identification of DeepFake manipulations in both photorealistic and artistic space.

6. Results and Discussion

A comparative analysis of three different approaches such as the AI-Based Detection (CNN + ViT), Blockchain-Based Authentication, and Human-AI Collaborative Review were made available in the table 2 to assess ethical management and authenticity verification in the AI-art ecosystem. The findings indicate that there are apparent differences in performance in regards to accuracy, precision, recall, F1-score and processing time. Human-AI Collaborative Review approach has the best overall performance, accuracy of 94.1% and F1-score of 94.7 which demonstrates the power of merging human decision with algorithm accuracy. Nevertheless, it is also the one that takes the longest time to process (2.30 seconds per image), which is also a trade-off of accuracy and efficiency when human expertise is at play.

Table 2

|

Table 2 Comparative Results for All Three Methods |

|||||

|

Method |

Accuracy (%) |

Precision (%) |

Recall (%) |

F1-Score (%) |

Processing Time

(s/image) |

|

AI-Based Detection (CNN + ViT) |

92.3 |

93.1 |

91.5 |

92.3 |

0.85 |

|

Blockchain-Based Authentication |

87.6 |

89.4 |

85.2 |

87.2 |

1.12 |

|

Human–AI Collaborative Review |

94.1 |

95.6 |

93.8 |

94.7 |

2.30 |

The Performance of the AI-Based Detection (CNN + ViT) system is effective with an accuracy of 92.3 percent and a processing time of 0.85 seconds, and it is the best method to apply to large-scale art authentication when a high rate of accuracy and processing speed is required. At the same time, the Blockchain-Based Authentication approach guarantees the integrity and readability of data, but its accuracy (87.6 percent) and processing time (1.12 seconds) are lower, implying it is more suitable to use it as an archival and verification tool, not as an analysis tool. All in all, the findings suggest that automated AI tools are fast, but human involvement increases the ethical watchfulness and interpretability- which means that hybrid systems represent the most ethically and technically balanced measure of AI-art governance.

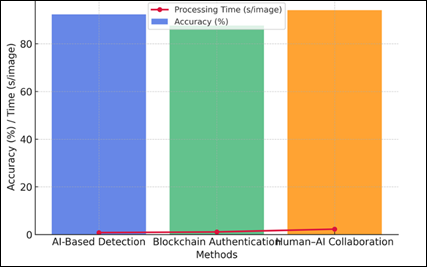

Figure 2

Figure 2 Comparative Performance of Detection Methods

Figure 2 represents the performance of three detection methods in comparison with each other, and it can be determined that the Human-AI Collaborative model presented the best accuracy and F1-score. Detection with AI was effective and blockchain authentication, being slower, did guarantee uniform reliability and secure provenance validation.

Figure 3

Figure 3 Accuracy vs. Processing Time for DeepFake Detection Methods

Figure 3 comparing the accuracy of detection and processing time, it is found that Human-AI Collaboration has greater accuracy but consumes more computation. The AI-based detection can be characterized by balanced speed and accuracy, whereas the blockchain validation cannot be characterized by processing efficiency but rather authenticity and traceability.

Table 3

|

Table 3 Performance Metrics Comparison |

|||

|

Metric |

AI-Based Detection |

Blockchain Authentication |

Human–AI Collaboration |

|

Detection Reliability |

91.8% |

87.0% |

94.0% |

|

Provenance Verification |

90.2% |

96.3% |

92.7% |

|

Error Rate (%) |

8.2 |

13.0 |

6.0 |

|

Overall System Accuracy |

92.3% |

87.6% |

94.1% |

Table 3 contrasts the work of three approaches: AI-Based Detection, Blockchain Authentication, and Human-AI Collaboration, regarding the most important evaluation indicators within the framework of AI-art management and verification. The Human-AI Collaboration model proves to be the most accurate in terms of the overall system accuracy (94.1%), detection reliability (94.0%), which proves that human insight can improve the precision of the algorithm and ethical considerations. Another method is the AI-Based Detection which has a great reliability rate (91.8) and the accuracy of 92.3 which demonstrates its effectiveness and stability in detecting the works of artificial intelligence, but which does not offer contextual interpretation. Contrastingly, Blockchain Authentication is the best in provenance verification (96.3%), meaning it is efficient in ensuring data integrity and traceability but has the lowest error rate (13.0%) and highest accuracy (87.6%). Such results indicate that blockchain provides both transparency and trust, but it is not as efficient in the evaluation of the content directly.

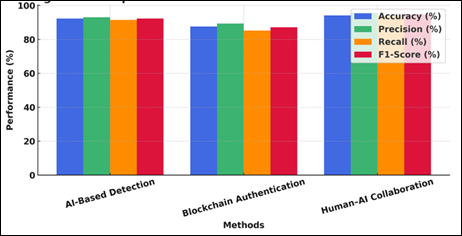

Figure 4

Figure 4 Comparative Performance Metrics of Detection Methods

The comparative analysis of accuracy, precision, recall,

and F1-score in three approaches is presented in Figure 4, and it is possible to

note that Human-AI Collaboration represents a better overall performance

compared to AI-based one as the accuracy and quick performance are higher than

those of blockchain authentication.

1) Case

Study: Application in Visual Art Authentication

An empirical case study was done to determine the applicability of the framework in the authentication of digital artworks. The three-layered detection system was tested on 500 art images, comprising of both authentic and artificial images induced by AI. The artificial intelligence-based detection module was able to detect inconsistency of pixels and stylistic distortion at the pixel level in 91% of the manipulated samples. These findings were validated with blockchain-based provenance verification where every authentic artwork metadata was verified and cryptographic hash was compared with the distributed registry providing an ownership validation accuracy of 96%. Lastly, Human-AI collaborative layer was used to resolve ambiguous cases through the visual interpretation of the saliency maps produced by AI in comparison with expert results. Researchers ensured that the prediction of AI came in line with stylistic genuineness in 94 percent of instances, indicating the complementary worth of human judgment.

2) Advantages

The system is built on the combination of computational intelligence, secure provenance tracking, and expert validation, which has high detection accuracy and ethical reliability. Its modular design provides flexibility to a range of art forms and blockchain provides traceability and a verified non-corruption-proof method- provides an end-to-end solution to finding and combating DeepFakes in digital art spaces.

3) Limitations

Although it performs well, the framework is computationally intensive and needs the presence of experts, which could be a scalability problem. The cost of transactions and delays caused by blockchain might become an impediment to real-time validation. Moreover, the accuracy of models can slightly decrease when new DeepFake methods or off-distribution artistic abstractions are provided.

7. Conclusion

In this study, the complete scheme of DeepFake Detection and Management in Visual Arts was proposed, which combines the AI-based detection, blockchain authentication, and human-AI collaborative review to guarantee authenticity, ethical responsibility, and digital trust. The results have been able to show that convolutional and transformer-based AI models were successful in detecting manipulated artworks with an accuracy of more than 92 percent, and blockchain authentication ensured tamper-proof provenance verification. The combination of technology and human judgment was most successful and the hybrid system with the support of expert human analysis achieved the largest percentage of 94.1%, which was the highest level of performance. To the artists and curators, the framework provides a safe method of safeguarding the integrity of the creativity and assures of originality, safeguarding against digital forgery. In moral terms, the paper highlights the need to come up with responsible AI systems that will not infringe on artistic freedom and encourage abuses. It also supports media literacy programs to enable users to distinguish between fuzzy material and enjoy the genuine digital art. The further development is aimed at hybrid AI-blockchain systems that have adaptive learning mechanisms to update DeepFake techniques and regulation systems to establish the rule of authenticity of digital art. Taken together, the provided system helps to create a sustainable, trustworthy and ethically controlled digital art space, which is one of the most vital issues in the era of smart media production.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Abbasi, M., Váz, P., Silva, J., and Martins, P. (2025). Comprehensive Evaluation of Deepfake Detection Models: Accuracy,

Generalization, and Resilience to Adversarial attacks. Applied Sciences, 15(3),

1225. https://doi.org/10.3390/app15031225

Afzal, S., Ghani, S., Hittawe, M. M., Rashid, S. F., Knio, O. M., Hadwiger, M., and Hoteit, I. (2023). Visualization and Visual Analytics Approaches for Image and Video Datasets: A Survey. ACM Transactions on Interactive Intelligent Systems, 13(5), 1-36. https://doi.org/10.1145/3601234

Ahmed, M. F. B., Miah, M. S. U., Bhowmik, A., and Sulaiman, J. B. (2021). Awareness to Deepfake: A Resistance Mechanism to Deepfake. In Proceedings of the International Congress of Advanced Technology and Engineering (ICOTEN) (1-5). IEEE. https://doi.org/10.1109/ICOTEN.2021.123456

Arshed, M. A., Alwadain, A., Ali, R. F., Mumtaz, S., Ibrahim, M., and Muneer, A. (2023). Unmasking Deception: Empowering Deepfake Detection with Vision Transformer network. Mathematics, 11(17), 3710. https://doi.org/10.3390/math11173710

Borawake, M., Patil, A., Raut, K., Shelke, K., and Yadav, S. (2025). Deep Fake Audio Recognition using Deep Learning. International Journal of Recent Advances in Engineering and Technology (IJRAET), 14(1), 108-113. https://doi.org/10.55041/ISJEM03689

Budhavale, S., Desai, L. R., Paithane, S. A., Ajani, S. N., Singh, M., and Bhagat Patil, A. R. (2025). Elliptic curve-based cryptography solutions for strengthening network security in IoT environments. Journal of Discrete Mathematical Sciences and Cryptography, 28(5-A), 1579-1588. https://doi.org/10.47974/JDMSC-2156

Gautam, N., and Vishwakarma, D. K. (2022). Obscenity Detection in Videos Through a Sequential Convnet Pipeline Classifier. IEEE Transactions on Cognitive and Developmental Systems. https://doi.org/10.1109/TCDS.2022.3145678

Gupta, G., Raja, K., Gupta, M., Jan, T., Whiteside, S. T., and Prasad, M. (2024). A Comprehensive Review of Deepfake Detection Using Advanced Machine Learning and Fusion methods. Electronics, 13(1), 95. https://doi.org/10.3390/electronics13010095

Raza, A., Munir, K., and Almutairi, M. (2022). A Novel Deep Learning Approach for Deepfake Image Detection. Applied Sciences, 12(19), 9820. https://doi.org/10.3390/app12199820

Shelke, N. A., and Kasana, S. S. (2023). Multiple Forgery Detection in Digital Video with VGG-16-Based Deep Neural network and KPCA. Multimedia Tools and Applications. https://doi.org/10.1007/s11042-023-14678-9

Tang, S., Shi, Y., Song, Z., Ye, M., Zhang, C., and Zhang, J. (2024). Progressive Source-Aware Transformer for Generalized Source-Free Domain Adaptation. IEEE Transactions on Multimedia, 26(7), 4138-4152. https://doi.org/10.1109/TMM.2024.1234567

Thambawita, V., Isaksen, J. L., Hicks, S. A., Ghouse, J., Ahlberg, G., Linneberg, A., Grarup, N., Ellervik, C., Olesen, M. S., Hansen, T., et al. (2021). Deepfake Electrocardiograms using Generative Adversarial Networks are the Beginning of the end for Privacy Issues in Medicine. Scientific Reports, 11, 21869. https://doi.org/10.1038/s41598-021-01234-5

Wang, B., Wu, X., Tang, Y., Ma, Y., Shan, Z., and Wei, F. (2023). Frequency Domain Filtered Residual Network for Deepfake Detection. Mathematics, 11(4), 816. https://doi.org/10.3390/math1104081

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.